NoSQL (Not Only SQL) database, departing from relational model, is a hot term nowadays although the name is kind of misleading. The data model (e.g., key-value, document, or graph) is surely very different from the tabular relations in the RDBMS. However, these non-relational data models are actually not new. For example, BerkeleyDB, a key-value store, was initially released in 1994 (20 years ago). In the web and social network era, the motivations of (distributed) NoSQL movement are mainly towards to horizontal scaling and high availability. By playing with the CAP theorem, many NoSQL stores compromise consistency in favor of availability and partition tolerance, which also brings the simplicity of design. Note that a distributed database system doesn’t has to drop consistency. For instance, TeraData and Google’s F1 are ACID-compliant (Atomicity, Consistency, Isolation, Durability). However, it makes systems much more complicated and also imposes high performance overhead.

In this series, I will look into several popular open source NoSQL solutions. This post will cover Apache HBase and Apache Accumulo. Both of them are modeled after Google’s BigTable, implemented in Java, and run on top of Apache Hadoop. Overall, HBase and Accumulo are very similar in architecture and features (especially now HBase 0.98 supports cell-level security that was a unique offer from Accumulo). In what follows, we will mainly discuss the design of HBase and also talk about the differences of Accumulo.

Data Model

In BigTable-like stores, data are stored in tables, which are made of rows and columns. Columns are grouped into column families. A column name is made of its column family prefix and a qualifier. The column family prefix must be composed of printable characters. The column qualifiers can be made of any arbitrary bytes. In HBase, column families must be declared up front at schema definition time whereas new columns can bed added to any column family without pre-announcing them. In contrast, column family are not static in Accumulo and can be created on the fly. The only way to get a complete set of columns that exist for a column family is to process all the rows.

Table row keys are uninterrpreted byte arrays. Rows are lexicographically sorted by row keys. In HBase, the empty byte array is used to denote both the start and end of a table’s namespace while null is used for this purpose in Accumulo.

A cell’s content is an uninterpreted array of bytes. And table cells are versioned. A (row, column, version) tuple exactly specifies a cell. The version is specified using a long integer. Typically this long contains time instances. By default, when doing a get, the cell whose version has the largest value is returned. It is possible to return more than one version with Get.setMaxVersions() or to return versions other than the latest by Get.setTimeRange() (here we use HBase Get class as an example). Without specifiying the version, Put always creates a new version of a cell with the server’s currentTimeMillis. But the user may specify the version on a per-column level. The user-provided version may be a time in the past or the future, or a non-time purpose long value. To overwrite an existing value, an exact version should be provided. Delete can happen on a specific version of a cell or all versions. To save space, HBase also cleans up old or expired versions. To declare how much data to retain, one may define the number of versions or the time to live (TTL).

Deletes work by creating tombstone markers. Once a tombstone marker is set, the “deleted” cells become effectively invisible for Get and Scan operations but are not immediately removed from store files. There is a snag with the tombstone approach, namely “deletes mask puts”. Once a tombstone marker is set, even puts after the delete will be masked by the delete tombstone. Performing the put will not fail. However when you do a get, the put has no effect but will start working after the major compaction, which will really remove deletes and tombstone markers (see below for details).

Storage

Physically, both HBase and Accumulo use HDFS to store data. Empty cells are not stored as tables usually have a large number of columns and are very sparse. In HBase, tables are stored on a per-column family basis. All column family members are stored together on HDFS. Accumulo also supports storing sets of column families separately on disk to avoid scanning over column families that are not requested. But tables place all column families into the same “default” locality group by default. Additional locality groups can be configured at any time.

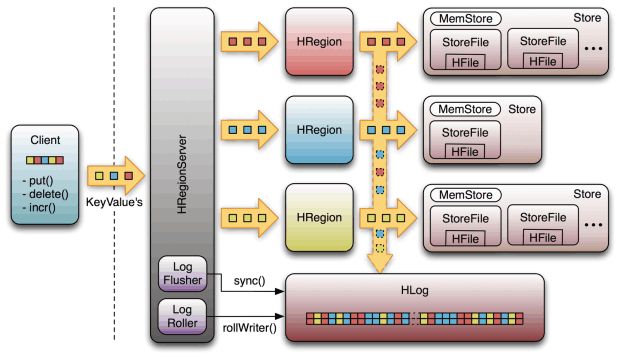

Recall that HDFS (modeled after Google’s GFS) is a write-once (and/or appending-only since 0.20) file system. It is very efficient for reading a large portion of big files but not designed for random access. So how does HBase provide random, realtime read/write access on top HDFS (which is actually the exact reason to build HBase)? Here we come to the concept of Store. In HBase, a Store corresponds to a column family in a Region (see next section for details). A Store hosts a MemStore and a set of zero or more StoreFiles. The MemStore holds in-memory modifications to the Store. When the MemStore reaches a certain size or the total size of all MemStores reaches the upper limit (both are configureable), the sorted key-value pairs in MemStore will flushed into a HDFS file called StoreFile in HFile format (based on SSTable file in the BigTable).

Because HDFS is write-once, a Store may have multiple StoreFiles that are created for each flush. In order to reduce the number of StoreFiles per Store, a process called compaction can be executed. There are two types of compactions: Minor and Major. Minor compactions pick up a couple of smaller adjacent StoreFiles and rewrite them as one. Minor compactions do not drop deletes and expired cells. In contrast, Major compactions pick up all the StoreFiles in the Store and generate a single StoreFile per Store that removes deletes and expired cells.

Sharding

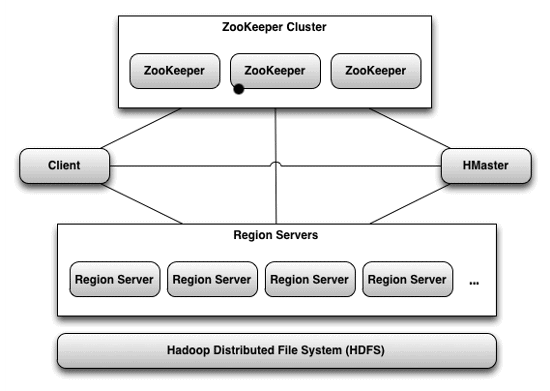

Any serious distributed database needs a sharding strategy. HBase and Accumulo supports auto-sharding, which means that tables are dynamically partitioned by rows and distributed by the system. The basic unit of sharding is called a Region in HBase (or Tablet in Accumulo). A region is a contiguous and sorted range of rows of a table stored together on disk. Initially, there is only one region for a table. However, when regions become two large, a region is split into two at the middle key (recall that rows are lexicographically sorted by row keys). Regions are served by RegionServer (TabletServer in Accumulo). Each RegionServer is responsible a set of regions but one region can be served only by one RegionServer. Because of this design, it is easy for HBase/Accumulo to provide row level consistency.

Here we have an assignment problem: given a region, which RegionServer should be assigned to it? This coordination work (and other administrative operations) is done by HMaster and recorded in ZooKeeper. Each region is assigned to a RegionServer on startup. However, the Master may decide to move a region from one RegionServer to another for load balance. Besides, the Master also handles RegionServer failures by assigning the regions to another RegionServer.

In general, HBase is designed to run with a small (20-200) number of relatively large (5-20Gb) regions per RegionServer. A large number of regions per RegionServer will cause a lot memory overhead and possibly too many flushes and compactions. A large number of regions also take a lot of time for the Master to assign and move them because of the heavy usage of ZooKeeper. When there are too many regions, one can consolidate them with the utility Merge.

As we are talking about RegionServer and Master, let’s dig into the architecture of HBase.

Architecture

Typically, HBase setups a RegionServer co-located with an HDFS DataNode on the same physical node. When StoreFiles are written into HDFS, one copy is written locally and two are written to other nodes. As long as the regions are not moved, there is good data locality. When the regions are reassigned to a new RegionServer, the data locality is lost and the RegionServer needs to read the data over the network from remote DataNodes until the data is rewritten locally.

Fault Tolerance

One may think that the Master is a SPOF (single point of failure). Actually, we can set up multiple HMasters although only one is active. HMasters use heartbeats to monitor each other. If the active Master shuts down or loses its lease in ZooKeeper, the remaining Masters jostle to take over the Master role. Because the clients talk directly to the RegionServers, the HBase cluster can still function in a steady state in short period during the Master failover. Note that Accumulo doesn’t support multiple Masters currently and thus the Master is a SPOF.

So how about RegionServers? It looks like that we are safe since there are multiple instances. However, recall that a region is managed by a single RegionServer at a time. If a RegionServer fails, the corresponding regions are not available until the detection and recovery steps have happened. It is actually a SPOF although there are no global failures in HBase.

To be resilient to node failures, all StoreFiles are written into HDFS, which replicates the blocks of these files (3 times by default). Besides, HBase, just like any other durable databases, uses a write-ahead-log (WAL), which is also written into HDFS. To detect the silent death of RegionServers, HBase uses ZooKeeper. Each RegionServer is connected to ZooKeeper and the Master watches these connections. ZooKeeper itself employs heartbeats. On a timeout, the Master declares the RegionServer as dead and starts the recovery process. During the recovery, the regions are reassigned to random RegionServers and each RegionServer reads the WAL to recover the correct region state. This is a complicated process and the mean time to recovery (MTTR) of HBase is often around 10 minutes if a DataNode crash with default settings. But we may reduce the MTTR to less than 2 minutes with careful settings.

Replication

The replication feature in HBase copies data between HBase deployments, which usually sit in different geographically distant data centers and thus provides a way of disaster recovery. The approach of HBase replication is master-push, just like MySQL’s master/slave replication. The replication is done asynchronously and each RegionServer replicates their own stream of WAL edits. Although there is similar work in progress in Accumulo, it is not available yet.

Cell-Level Security

The cell-level security was a unique feature of Accumulo because of its NSA root. When mutations (i.e. puts in HBase) are applied, users can specify a security label for each cell by passing a ColumnVisibility object. Security labels consist of a set of user-defined tokens that are required to read the associated cell. The security label expression syntax supports boolean logic operations. When a client attempts to read data, any security labels present are examined against the set of authorizations passed with Scanner. If the authorizations are determined to be insufficient to satisfy the security label, the cell is suppressed from the results. Each user has a set of associated security labels, which can be manipulated in the shell.

HBase 0.98 also provides the cell-level security feature now, which requires HFile v3.

HBase Coprocessor And Accumulo Iterator

Both HBase and Accumulo provide the modular approaches (called coprocessor and iterator respectively) to add custom functionalities. This allows users to efficiently summarize, filter, and aggregate data directly on RegionServers/TabletServers. Compared to MapReduce, it gives a dramatic performance improvement by removing communication overheads. In fact, cell-level security and column fetching are implemented using iterators in Accumulo.

Summary

As BigTable clones, HBase and Accumulo provide a wide-column data model and random real-time CRUD operations on top of HDFS. They can horizontally scale out to efficiently serve billions of rows and millions of columns by auto-sharding. Because each region is served by only one RegionServer at a time, they also support strong consistency for reads and writes. Automatic failover of RegionServer/TableServer are supported although efforts are needed to reduce MTTR. With replications across multi data centers, HBase adds more supports of disaster recovery. In the next post, I will cover Riak, which follows a very different system design.

ALSO IN THIS SERIES:

Distributed NoSQL: MongoDB

Distributed NoSQL: MongoDB

In this installment of his Understanding NoSQL series, guest contributor Haifeng Li delves deeper into MongoDB, the fifth most popular database in the world. Examining the data model, storage and cluster architecture, Li aims to give us an in-depth understanding of MongoDB’s database technology.

Haifeng Li is the Chief Data Scientist at ADP. He has a proven history in delivering end-to-end solutions, having previously worked for Bloomberg and Motorola. He is a technical strategist with deep understanding of computer theory and emerging technologies. He also has a diverse academic background, researching in fields including machine learning, data mining, computer vision, pattern recognition, NLP, and big data. His personal blog can be found here.

Haifeng Li is the Chief Data Scientist at ADP. He has a proven history in delivering end-to-end solutions, having previously worked for Bloomberg and Motorola. He is a technical strategist with deep understanding of computer theory and emerging technologies. He also has a diverse academic background, researching in fields including machine learning, data mining, computer vision, pattern recognition, NLP, and big data. His personal blog can be found here.

(Featured Image: Data Science Labs)