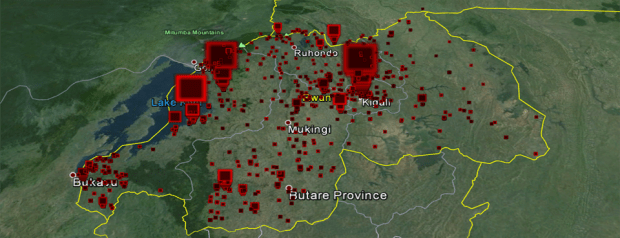

(Conflict (red) and cooperation (green) in Nigeria Jan-March 2014; source)

For centuries, society has been captivated by the idea of the eternal return. From Indian philosophy and ancient Egyptian thought, from Pythagoras through to Nietzsche, the idea that history is cyclical and events will recur again and again infinitely has always captured the human imagination.

This idea was most recently put to the test by the team behind the Global Database of Events, Language and Tones (GDELT), a quarter-billion record database of events in human history. They wanted to see if they could find correlations between current world events and historical events, to see if they could map the outcomes of current political unrests. Their experiment was likened to Asimov’s Pyschohistory, in which history, sociology, and mathematical statistics are combined to make general predictions about the future behavior of very large groups of people. Although the experiment worked astoundingly well, GDELT’s founder Kalev Leetaru was keen to point out to Dataconomy that “to me the story here is not about forecasting, but rather about the “big data” world having reached a point where we can now run an analysis of this magnitude with just a single line of code and get back the results in just a few minutes. We’ve reached a point where we can truly begin to interactively explore our world at scale.”

This idea was most recently put to the test by the team behind the Global Database of Events, Language and Tones (GDELT), a quarter-billion record database of events in human history. They wanted to see if they could find correlations between current world events and historical events, to see if they could map the outcomes of current political unrests. Their experiment was likened to Asimov’s Pyschohistory, in which history, sociology, and mathematical statistics are combined to make general predictions about the future behavior of very large groups of people. Although the experiment worked astoundingly well, GDELT’s founder Kalev Leetaru was keen to point out to Dataconomy that “to me the story here is not about forecasting, but rather about the “big data” world having reached a point where we can now run an analysis of this magnitude with just a single line of code and get back the results in just a few minutes. We’ve reached a point where we can truly begin to interactively explore our world at scale.”

Leetaru took some time out whilst working on this project to discuss his work with Dataconomy- what such a database means for our understanding of history, sociology, and the capabilities of technology.

Talk us through the genesis of GDELT.

I did a very famous paper in 2011 called Culturomics 2.0 where I used the tone- the sentiment of global media coverage- to show that you can build models that allow you to forecast a whole country collapse. There was a huge amount of potential for that project, but the problem was that the only thing I could only look at was whole country collapse. There simply weren’t data sets today or from the time period that gave you riots, protests and military attacks, down to the sub-national level over the entire world going back over time.

Now we have a quarter of a billion records in there, which is about 400 gigabytes on disc. You might say that’s nothing compared to some of the massive datasets being discussed today- Facebook talks about 500 terabytes of new data added to their servers everyday. But in these instances, you’re really accessing the data through a key store- it’s simply fetching an object- basically, it’s a filing system. Whereas with GDELT, what your have is a fully relational dataset where all of these fields are interrelated, and it’s really the number of columns times the number of rows that makes it so complex to work with. Before BigQuery, there was no real way of interacting with this because traditional database systems called for indexing, and even a lot of these newer, more powerful database platforms still require you to basically give them advice as to how people are going to interact with the data.

What we are finding is essentially is almost every query is different from the one before. BigQuery really allows you to set aside the indexing and that has transformed our capabilities. BigQuery really allows us to look at this dataset holistically.

In terms of use, the holy grail from the beginning was the ability to take something, say data from the last three months in Ukraine, and find the most similar periods in time from any country, the most similar to what Ukraine is going through right now. You pick the five most similar periods in time from any country that is most similar to where Ukraine is now and you will be able to say what happened after each of those situations, and see if that give us any insight as to what might happen to Ukraine in the future. We’re digging into this now, and the results are absolutely fascinating- the combinations and correlations and connections are astounding.

I wouldn’t go to this length, but many people liken the GDELT project to Isaac Asimov’s Psychohistory from the Foundation series. And I will agree that there are certain parallels, in that psychohistory was this notion of gathering up all the world’s open public data sets. Not the datasets that the NSA are dealing with, but public information, like news media. Stripping that up and then processing that by computer to understand these big, broad patterns in society. And that’s where we’re up to at this second.

GDELT is a database of events, but also language and tone. Could you delve a little deeper into how it records these elements?

GDELT really is two different parallel data sets. One obviously is the event database, which is a quarter of a billion records in over 300 categories that are physical activities- from riots and protests to peace pledges and diplomatic exchanges.

But the missing link, where we are today, is forecasting. When you think about Egypt, it wasn’t that people were happy and then one day everyone woke up and decided they should protest. What you are looking for are essentially these deeper, latent dimensions to language, so things like the semantic and emotional undercurrents.

I did a study maybe a month or two ago and when I looked at global media coverage of Assad, the president of Syria. When I looked at how negative or positive that was I found that he was actually in free fall towards negativity- the world was darkening about him prior to the Qusair attack. When the US failed to react to that, t entire world essentially said, “Wow if he can do that- if he can kill 1000 people with no reaction- he’s won now.” They weren’t positive about him, but what we saw was called military superiority language. Language that suggested he was now invulnerable, and that the rebels were now going to lose. And sure enough you saw the news cover that. So that’s actually what I’m most interested in- how do we measure all of this?

That is a very interesting and nuanced dimension- let’s think about Iran, when Rouhani was elected President. CNN set up a TV crew in the middle of the square in Tehran and asked passers-by “What do you think of the new president?” You saw these two interesting looking characters just off screen, who are just sitting there basically just close enough to listen to everything that was said. Obviously they were his security people and so when people are interviewed on TV, they weren’t giving their real opinion. They all realised that someone stares at the camera and says “I think he’s an idiot,” that’s not going to be very good for their health.

But, this is the interesting part- there are two dimensions to tone. There is explicit tone, when someone says “I love my iPhone” or “I hate eating vegetables”. There are also whole undercurrents of semantic tone embedded in what people say. Take, for example, if someone tweeted “It is a beautiful day outside, I am doing the laundry.” Oftentimes that would be discarded as a noise tweet but this actually tells us quite a lot about the person’s situation because they’re not not posturing, they’re not realizing the emotions that they are expressing. This tweet suggests security and safety. Let’s say if someone has just lost their job, they have a huge mortgage and they don’t know where they are going to get food tomorrow, they probably wouldn’t be cheerfully tweeting about their laundry. So the ability to really get all these dimensions and really understand is giving it a much broader picture.

GDELT is available to download. Have you had any particularly interesting public use cases yet, or are there any particular applications you’d like to see in the future?

There’s a ton of things being done with it right now, and it’s being used many NGO’s around the world. I’ve heard of some successful applications, but they’re not being made public as much as I would like. One thing I’m working on this year is building tools to help organisations leverage this dataset. But it’s already becoming very widely used-the first week that it was available on the cloud server it had over 30,000 downloads.

There’s some phenomenal interest in it and I think again with any data set it takes a while before it starts growing and expanding out there. I think again obviously it has huge implications for forecasting and so the moments someone comes up with a good forecasting algorithm if it works they’re likely to put it out there. But this is the general aim for GDELT: an open platform for computing on the entire world.