We hear the term “machine learning” a lot these days (usually in the context of predictive analysis and artificial intelligence), but machine learning has actually been a field of its own for several decades. Only recently have we been able to really take advantage of machine learning on a broad scale thanks to modern advancements in computing power. But how does machine learning actually work? The answer is simple: algorithms.

Machine learning is a type of artificial intelligence (AI) where computers can essentially learn concepts on their own without being programmed. These are computer programmes that alter their “thinking” (or output) once exposed to new data. In order for machine learning to take place, algorithms are needed. Algorithms are put into the computer and give it rules to follow when dissecting data.

Machine learning algorithms are often used in predictive analysis. In business, predictive analysis can be used to tell the business what is most likely to happen in the future. For example, with predictive algorithms, an online T-shirt retailer can use present-day data to predict how many T-shirts they will sell next month.

Regression or Classification

While machine learning algorithms can be used for other purposes, we are going to focus on prediction in this guide. Prediction is a process where output variables can be estimated based on input variables. For example, if we input characteristics of a certain house, we can predict the sale price.

Prediction problems are divided into two main categories:

- Regression Problems: The variable we are trying to predict is numerical (e.g., the price of a house)

- Classification Problems: The variable we are trying to predict is a “Yes/No” answer (e.g., whether a certain piece of equipment will experience a mechanical failure)

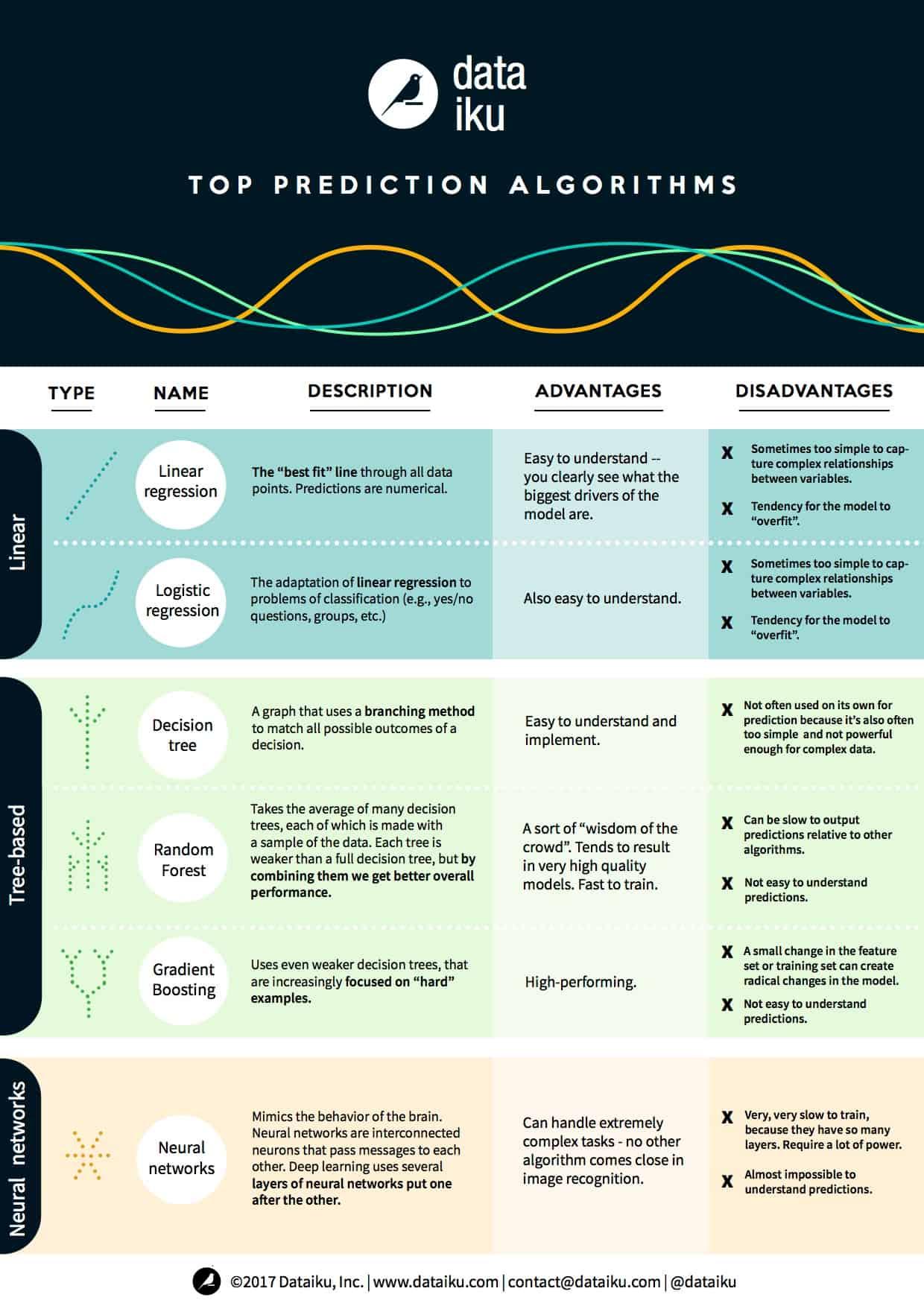

Now that we’ve covered what machine learning can do in terms of predictions, we can discuss the machine learning algorithms, which come in three groups: linear models, tree-based models, and neural networks.

What are Linear Model Algorithms

A linear model uses a simple formula to find a “best fit” line through a set of data points. You find the variable you want to predict (for example, how long it will take to bake a cake) through an equation of variables you know (for example, the ingredients). In order to find the prediction, we input the variables we know to get our answer. In other words, to find how long it will take for the cake to bake, we simply input the ingredients.

For example, to bake our cake, the analysis gives us this equation: t = 0.5x + 0.25y, where t = the time it takes the bake the cake, x = the weight of the cake batter, and y = 1 for chocolate cake and 0 for non-chocolate cake. So let’s say we have 1kg of cake batter and we want a chocolate cake, we input our numbers to form this equation: t = 0.5(1) + (0.25)(1) = 0.75 or 45 minutes.

There are different forms of linear model algorithms, and we’re going to discuss linear regression and logistic regression.

Linear Regression

Linear regression, also known as “least squares regression,” is the most standard form of linear model. For regression problems (the variable we are trying to predict is numerical), linear regression is the simplest linear model.

Logistic Regression

Logistic regression is simply the adaptation of linear regression to classification problems (the variable we are trying to predict is a “Yes/No” answer). Logistic regression is very good for classification problems because of its shape.

Drawbacks of Linear Regression and Logistic Regression

Both linear regression and logistic regression have the same drawbacks. Both have the tendency to “overfit,” which means the model adapts too exactly to the data at the expense of the ability to generalise to previously unseen data. Because of that, both models are often “regularised,” which means they have certain penalties to prevent overfit. Another drawback of linear models is that, since they’re so simple, they tend to have trouble predicting more complex behaviours.

What Are Tree-Based Models

Tree-based models help explore a data set and visualise decision rules for prediction. When you hear about tree-based models, visualise decision trees or a sequence of branching operations. Tree-based models are highly accurate, stable, and are easier to interpret. As opposed to linear models, they can map non-linear relationships to problem solve.

Decision Tree

A decision tree is a graph that uses the branching method to show each possible outcome of a decision. For example, if you want to order a salad that includes lettuce, toppings, and dressing, a decision tree can map all the possible outcomes (or varieties of salads you could end up with).

To create or train a decision tree, we take the data that we used to train the model and find which attributes best split the train set with regards to the target.

For example, a decision tree can be used in credit card fraud detection. We would find the attribute that best predicts the risk of fraud is the purchase amount (for example that someone with the credit card has made a very large purchase). This could be the first split (or branching off) – those cards that have unusually high purchases and those that do not. Then we use the second best attribute (for example, that the credit card is often used) to create the next split. We can then continue on until we have enough attributes to satisfy our needs.

Random Forest

A random forest is the average of many decision trees, each of which is trained with a random sample of the data. Each single tree in the forest is weaker than a full decision tree, but by putting them all together, we get better overall performance thanks to diversity.

Random forest is a very popular algorithm in machine learning today. It is very easy to train (or create), and it tends to perform well. Its downside is that it can be slow to output predictions relative to other algorithms, so you might not use it when you need lightning-fast predictions.

Gradient Boosting

Gradient boosting, like random forest, is also made from “weak” decision trees. The big difference is that in gradient boosting, the trees are trained one after another. Each subsequent tree is trained primarily with data that had been incorrectly identified by previous trees. This allows gradient boost to focus less on the easy-to-predict cases and more on difficult cases.

Gradient boosting is also pretty fast to train and performs very well. However, small changes in the training data set can create radical changes in the model, so it may not produce the most explainable results.

What Are Neural Networks

Neural networks in biology are interconnected neurons that exchange messages with each other. This idea has now been adapted to the world of machine learning and is called artificial neural networks (ANN). The concept of deep learning, which is a word that pops up often, is just several layers of artificial neural networks put one after the other.

ANNs are a family of models that are taught to adopt cognitive skills to function like the human brain. No other algorithms can handle extremely complex tasks, such as image recognition, as well as neural networks can. However, just like the human brain, it takes a very long time to train the model, and it requires a lot of power (just think about how much we eat to keep our brains working).

Like this article? Subscribe to our weekly newsletter to never miss out!