Neuromorphic computing is a growing computer engineering approach that models and develops computing devices inspired by the human brain. Neuromorphic engineering focuses on using biology-inspired algorithms to design semiconductor chips that will behave similarly to a brain neuron and then work in this new architecture.

What is neuromorphic computing?

Neuromorphic computing adds abilities to think creatively, recognize things they’ve never seen, and react accordingly to machines. Unlike AIs, the human brain is fascinating at understanding cause and effect and adapts to changes swiftly. However, even the slightest change in their environment renders AI models trained with traditional machine learning methods inoperable. Neuromorphic computing aims to overcome these challenges with brain-inspired computing methods.

Gartner believes that traditional computing technology based on legacy semiconductor architecture will hit a digital barrier by 2025, necessitating a paradigm shift such as neuromorphic computing. Meanwhile, Emergen Research predicts the worldwide market for neuromorphic processing to reach $11.29 billion in 2027.

How does neuromorphic computing works?

Neuromorphic computing constructs spiking neural networks. Spikes from individual electronic neurons activate other neurons down a cascading chain, mimicking the physics of the human brain and nervous system. It works similar to how the brain sends and receives signals from neurons that spark computing. Neuromorphic chips compute more flexibly and broadly than conventional systems, which orchestrate computations in binary. Spiking neurons work without any specified pattern.

Neuromorphic computing achieves this brain-like performance and efficiency by constructing artificial neural networks out of “neurons” and “synapses.” Analog circuitry is used to connect these artificial neurons and synapses. They can modulate the amount of electricity flowing between those nodes, replicating natural brain signals’ various degrees of intensity.

Neuromorphic chips solve complex issues and adapt to new settings swiftly

These are spiking neural networks (SNN) in the brain, which can detect these distinct analog signal changes and aren’t present in conventional neural networks that employ less-nuanced digital signals.

Neuromorphic technology also envisions a new chip architecture that mixes memory and processing on each neuron rather than having distinct areas for one or the other.

Traditional chip designs based on the von Neumann architecture usually include a distinct memory unit, core processing unit (CPU), and data paths. The information must be transferred between various components as the computer completes a task, implying that data must travel back and forth numerous times. The von Neumann bottleneck is a limitation in time and energy efficiency when data transport across multiple components causes bottlenecks.

Neuromorphic computing provides a better way to handle massive amounts of data. It enables chips to be simultaneously very powerful and efficient. Depending on the situation, each neuron can perform processing or memory tasks.

Researchers are also working on alternate ways to model the brain’s synapse by utilizing quantum dots and graphene and memristive technologies, such as phase-change memory, resistive RAM, spin-transfer torque magnetic RAM, and conductive bridge RAM.

Advantages of neuromorphic computing

Traditional neural network and machine learning computations are well suited to current algorithms. They tend to prioritize performance or power, frequently resulting in one at the expense of the other. In contrast, neuromorphic systems deliver both rapid computing and low energy consumption.

Neuromorphic computing has two primary goals. The first is to construct a cognition machine that learns, retains data, and makes logical conclusions like humans. The second goal is to discover more about how the human brain works

Neuromorphic chips can perform many tasks simultaneously. Its event-driven working principle enables it to adapt to changing terms and conditions. These systems, which have computing capabilities such as generalization, are very flexible and robust. It’s highly fault-tolerant. Data is preserved redundantly, and even minor failures do not prevent overall performance. It can also solve complex issues and adapt to new settings swiftly.

Neuromorphic computing use cases

Edge computing devices like smartphones currently have to hand off processing to a cloud-based system, which processes the query and transmits the answer to the device for compute-intensive activities. That query wouldn’t have to be shunted back and forth with neuromorphic systems; it could be handled right on the device. The most important motivator of neuromorphic computing is the hope it provides for the future of AI.

AI systems are, by nature, highly rule-based; they’re trained on data until they can generate a particular outcome. On the other hand, our mind is much more natural to ambiguity and adaptability.

Researchers’ objective is to make the next generation of AI systems capable of dealing with more brain-like challenges. Constraint satisfaction is one of them and implies that machines must discover the best solution to a problem with many restrictions.

Neuromorphic chips are more comfortable with issues like probabilistic computing, where machines handle noisy and uncertain data. Other concepts, such as causality and non-linear thinking, are still in their infancy in neuromorphic computing systems but will mature once they become more widespread.

Neuromorphic computer systems available today

Many neuromorphic systems have been developed and utilized by academics, startups, and the most prominent players in the technology world.

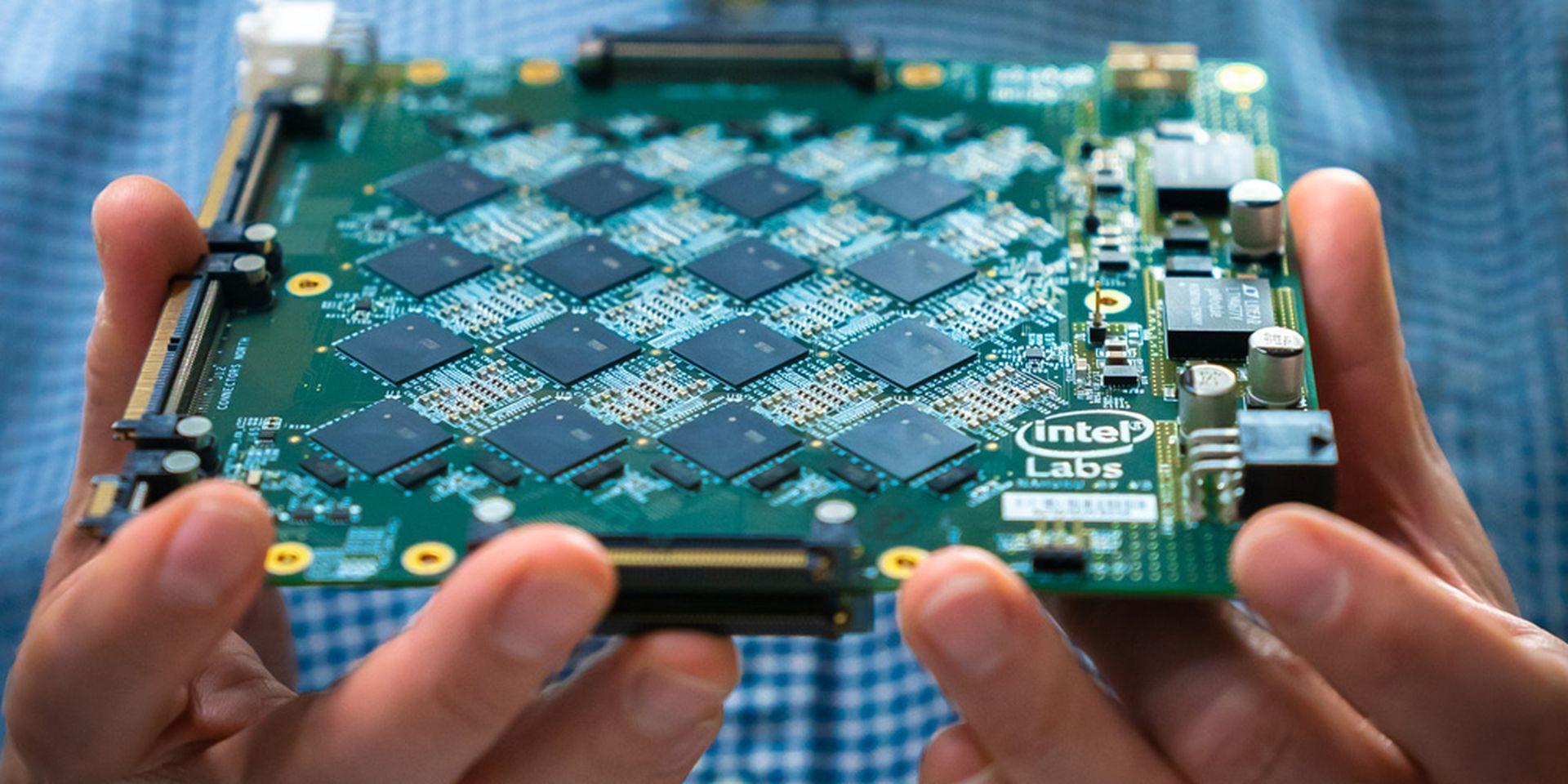

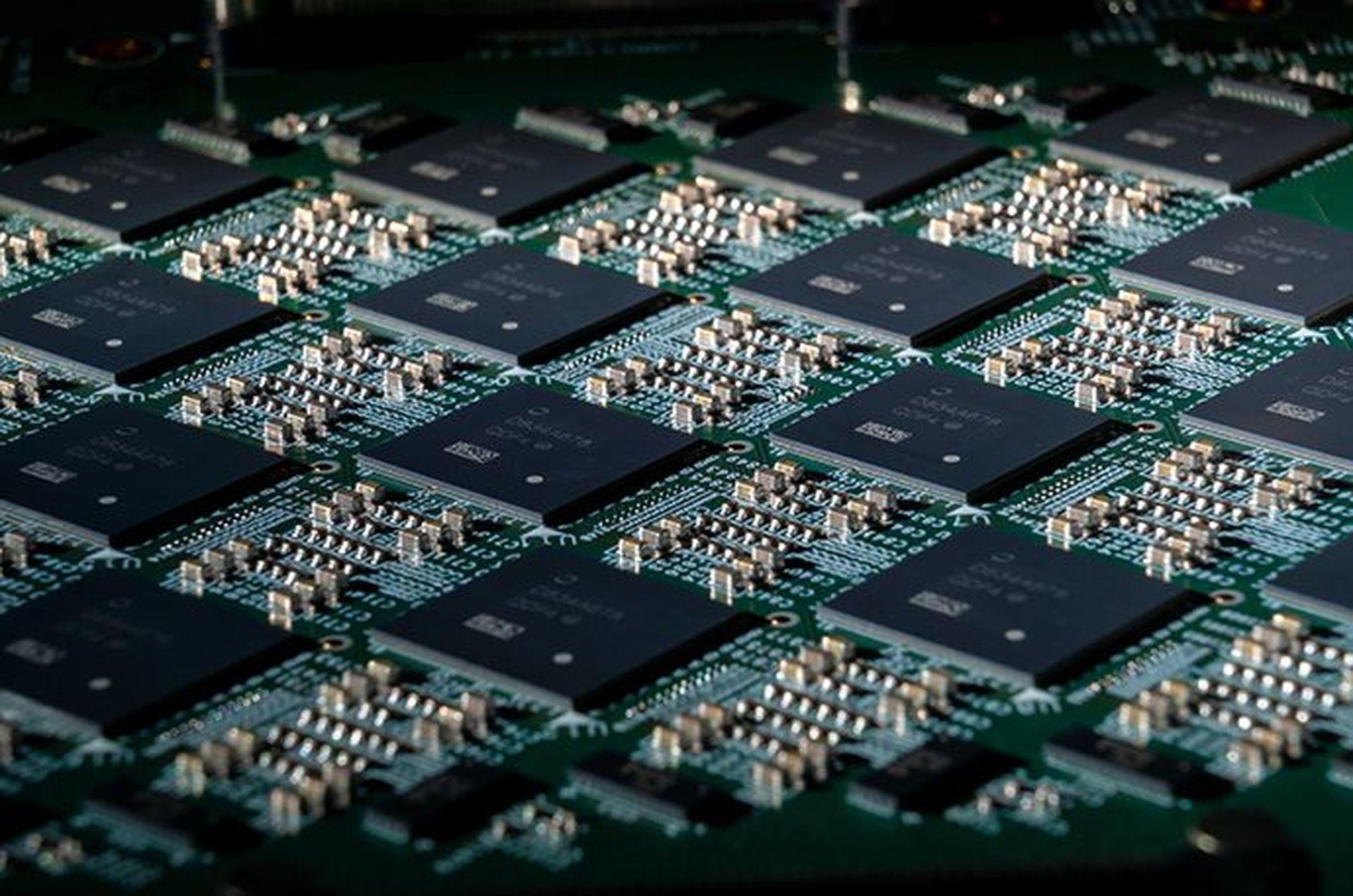

Intel’s neuromorphic chip Loihi has 130 million synapses and 131,000 neurons. It was designed for spiking neural networks. Scientists use Intel Loihi chips to develop artificial skin and powered prosthetic limbs. Intel Labs’ second-generation neuromorphic research chip, codenamed Loihi 2, and Lava, an open-source software framework are also announced.

IBM’s neuromorphic system TrueNorth was unveiled in 2014, with 64 million neurons and 16 billion synapses. IBM recently announced a collaboration with the US Air Force Research Laboratory to develop a “neuromorphic supercomputer” known as Blue Raven. While the technology is still being developed, one use may be to develop smarter, lighter, and less energy-demanding drones.

The Human Brain Project (HBP), a 10-year project that began in 2013 and is funded by the European Union, was established to further understand the brain through six areas of study, including neuromorphic computing. The HBP has inspired two major neuromorphic projects from universities, SpiNNaker and BrainScaleS. In 2018, a million-core SpiNNaker system was introduced, becoming the world’s largest neuromorphic supercomputer at the time; The University of Manchester aims to scale it up to model one million neurons in the future.

The examples from IBM and Intel focus on computational performance. In contrast, the examples from the universities use neuromorphic computers as a tool for learning about the human brain. Both methods are necessary for neuromorphic computing since both types of information are required to advance AI.

Intel Loihi neuromorphic chip architecture

Behind the Loihi chip architecture for neuromorphic computing lies the idea of bringing computing power from the cloud to the edge. The second-generation Loihi neuromorphic research chip was introduced in April 2021, along with Lava, an open-source software architecture for applications inspired by the human brain. Neuromorphic computing adapts key features of neural architectures found in nature to create a new computer architecture model. Loihi was developed as a guide to the chips that would power these computers and Lava to the applications that would run on them.

According to the research paper Advancing Neuromorphic Computing with Loihi, the most striking feature of neuromorphic technology is its ability to mimic how the biological brain evolves to solve the challenges of interacting with dynamic and often unpredictable real-world environments. Neuromorphic chips that can mimic advanced biological systems include neurons, synapses for interneuron connections, and dendrites, allowing neurons to receive messages from more than one neuron.

A Loihi 2 chip consists of microprocessor cores and 128 fully asynchronous neuron cores interconnected by a network-on-chip (NoC). All communication between neuron nuclei optimized for neuromorphic workloads takes place in the form of impulse messages that mimic neural networks in a biological brain.

Instead of copying the human brain directly, neuromorphic computing diverges in different ways by being inspired by it; for example, in the Loihi chip, part of the chip functions as the neuron’s nucleus to model biological neuron behavior. In this model, a piece of code describes the neuron. On the other hand, there are neuromorphic systems in which biological synapses and dendrites are created using asynchronous digital complementary metal-oxide-semiconductor (CMOS) technology.

What is a memristor, and why it is important for neuromorphic computing?

Memristor, the core electronic component in neuromorphic chips, has been demonstrated to function similarly to brain synapses because it has plasticity similar to that of the brain. It is utilized to make artificial structures that mimic the brain’s abilities to process and memorize data. Until recently, the only three essential passive electrical components were capacitors, resistors, and inductors. The memristor created a stir when it was first discovered because it functioned as an integration of all three previously described types of passive components.

A memristor is a passive component that keeps a connection between the time integrals of current and voltage across a two-terminal element. As a result, the resistance of a memristor changes according to the memristance function of a device, allowing for access to memory via small read charges. This electrical component can remember its past states without being powered on. Although no energy flows through them, membrane memristors exhibit the ability to retain their previous states. They may also be used as memory and processing units in tandem.

Moving computation to the memory eliminates the von Neumann bottleneck

Leon Chua first discovered the memristor in 1971 in theoretical form. In 2008, a research team at HP’s labs developed the first memristor from a thin film of Titanium Oxide. Since then, many other materials have been tested by various companies for the development of memristors.

The time it takes for data from the memory of a device to reach the processing unit is known as the von Neumann bottleneck. The processing unit must wait for the data required to perform computations. Because computations occur in the memory, neuromorphic chips do not experience this. Like how the brain works, memristors, which form the basis of neuromorphic chips, can be used for memory and calculation functions. They are composed of the first inorganic neurons, as we mentioned above.

Spiking neural networks (SNN) and artificial intelligence

The first generation of artificial intelligence was rule-based imitation logic, used to arrive at logical conclusions in a specific and restricted context. This tool is ideal for monitoring or optimizing a process. The focus was on artificial intelligence’s perception and detecting abilities. Deep Neural Networks have already been introduced into the application using conventional approaches such as SRAM or Flash-based. They mimic the parallelism and efficiency of the brain. Innovative DNN solutions may help to lower energy consumption for edge applications.

The next generation of artificial intelligence, which is already here and awaits our attention, extends these capabilities and fuses with human intellect, such as autonomous adaptation and the capacity to comprehend. This shift is critical to overcoming the current AI limitations caused by machine learning and inference. This is because such judgments, often uncontextualized and literal, are based on deterministic and literal interpretations of events that frequently lack context.

Teaching machines to think in a new way

The next generation of AI must be able to respond to new circumstances and abstractions for it to automate common human activities. Spiking Neural Networks (SNN) also aims to replicate the temporal aspect of neurons and synapses functionality. This allows for more energy efficiency and flexibility.

The ability to adapt to a rapidly changing environment is one of the most challenging aspects of human intelligence. One of neuromorphic computing’s most intriguing problems is the capacity to learn from unstructured stimuli while being as energy-efficient as the human brain. The computer building blocks within neuromorphic computing systems are comparable to human neurons’ logic. Spiking Neural Network (SNN) is a new way of arranging these components to mimic human neural networks.

The Spiking Neural Network (SNN) is a type of artificial intelligence that creates outputs based on the responses given by its various neurons. Every neuron in the network may be triggered separately. Each neuron transmits pulsating signals to other neurons in the system, causing them to change their electrical state immediately. SNNs mimic natural learning processes by dynamically mapping synapses between artificial neurons in response to stimuli by interpreting this data within its signals and time.