Contrary to popular belief, the history of machine learning, which enables machines to learn tasks for which they are not specifically programmed, and train themselves in unfamiliar environments, goes back to the 17th century.

Machine learning is a powerful tool for implementing artificial intelligence technologies. Because of its ability to learn and make decisions, machine learning is frequently referred to as AI, even though it is technically a subdivision of AI technology. Until the late 1970s, machine learning was only another component of AI’s progress. It then diverged and evolved on its own, as machine learning has emerged as an important function in cloud computing and e-Commerce. ML is a vital enabler in many cutting-edge technology areas of our times. Scientists are currently working on Quantum Machine Learning approaches.

Remembering the basics

Before embarking on our historical adventure that will span several centuries, let’s briefly go over what we know about Machine Learning (ML).

Today, machine learning is an essential component of business and research for many organizations. It employs algorithms and neural network models to help computers get better at performing tasks. Machine learning algorithms create a mathematical model from data – also known as training data – without being specifically programmed.

The brain cell interaction model that underpins modern machine learning is derived from neuroscience. In 1949, psychologist Donald Hebb published The Organization of Behavior, in which he proposed the idea of “endogenous” or “self-generated” learning. However, it took centuries and crazy inventions like the data-storing weaving loom for us to have such a deep understanding of machine learning as Hebb had in ’49. After this date, other developments in the field were also astonishing and even jaw-dropping on some occasions.

The history of Machine Learning

For ages, we, the people, have been attempting to make sense of data, process it to obtain insights, and automate this process as much as possible. And this is why the technology we now call “machine learning” emerged. Now buckle up, and let’s take on an intriguing journey down the history of machine learning to discover how it all began, how it evolved into what it is today, and what the future may hold for this technology.

· 1642 – The invention of the mechanical adder

Blaise Pascal created one of the first mechanical adding machines as an attempt to automate data processing. It employed a mechanism of cogs and wheels, similar to those in odometers and other counting devices.

Pascal was inspired to build a calculator to assist his father, the superintendent of taxes in Rouen, with the time-consuming arithmetic computations he had to do. He created the device to add and subtract two numbers directly and multiply and divide.

The calculator had articulated metal wheel dials with the digits 0 through 9 displayed around the circumference of each wheel. The user inserted a stylus into the corresponding space between the spokes and turned the knob until a metal stop at the bottom was reached to input a digit, similar to how a rotary dial on old phone works. The number is displayed in the top left window of the calculator. Then, simply redialed the second number to be added, resulting in the accumulator’s total being displayed. The carry mechanism, which adds one to nine on one dial and carries one to the next, was another feature of this machine.

· 1801 – The invention of the data storage device

When looking at the history of machine learning, there are lots of surprises. Our first encounter was a data storage device. Believe it or not, the first data storage device was, in fact, a weaving loom. The first use of data storage was in a loom created by a French inventor named Joseph-Marie Jacquard, that used metal cards with holes to arrange threads. These cards comprised a program to control the loom and allowed a procedure to be repeated with the same outcome every time.

The Jacquard Machine used interchangeable punched cards to weave the cloth in any pattern without human intervention. The punched cards were used by Charles Babbage, the famous English inventor, as an input-output medium for his theoretical, analytical engine and by Herman Hollerith to feed data to his census machine. They were also utilized to input data into digital computers, but they have been superseded by electronic equipment.

· 1847 – The introduction of Boolean Logic

In Boolean Logic (also known as Boolean Algebra), all values are either True or False. These true and false values are employed to check the conditions that selection and iteration rely on. This is how Boolean operators work. George Boole created AND, OR, and NOR operators using this logic, responding to questions about true or false, yes or no, and binary 1s and 0s. These operators are still used in web searches today.

Boolean algebra is introduced in artificial intelligence to address some of the problems associated with machine learning. One of the main disadvantages of this discipline is that machine-learning algorithms are black boxes, which means we don’t know a lot about how they autonomously operate. Random forest and decision trees are examples of machine learning algorithms that can describe the functioning of a system, but they don’t always provide excellent results. Boolean algebra is used to overcome this limitation. Boolean algebra has been used in machine learning to produce sets of understandable rules that can achieve quite good performance.

After reading the history of machine learning, you might want to check out 75 Big Data terms everyone should know.

· 1890 – The Hollerith Machine took on statistical calculations

Herman Hollerith developed the first combined mechanical calculation and punch-card system to compute statistics from millions of individuals efficiently. It was an electromechanical machine built to assist in summarizing data stored on punched cards.

The 1890 census in the United States took eight years to complete. Because the Constitution requires a census every ten years, a larger workforce was necessary to expedite the process. The tabulating machine was created to aid in processing 1890 Census data. Later versions were widely used in commercial accounting and inventory management applications. It gave rise to a class of machines known as unit record equipment and the data processing industry.

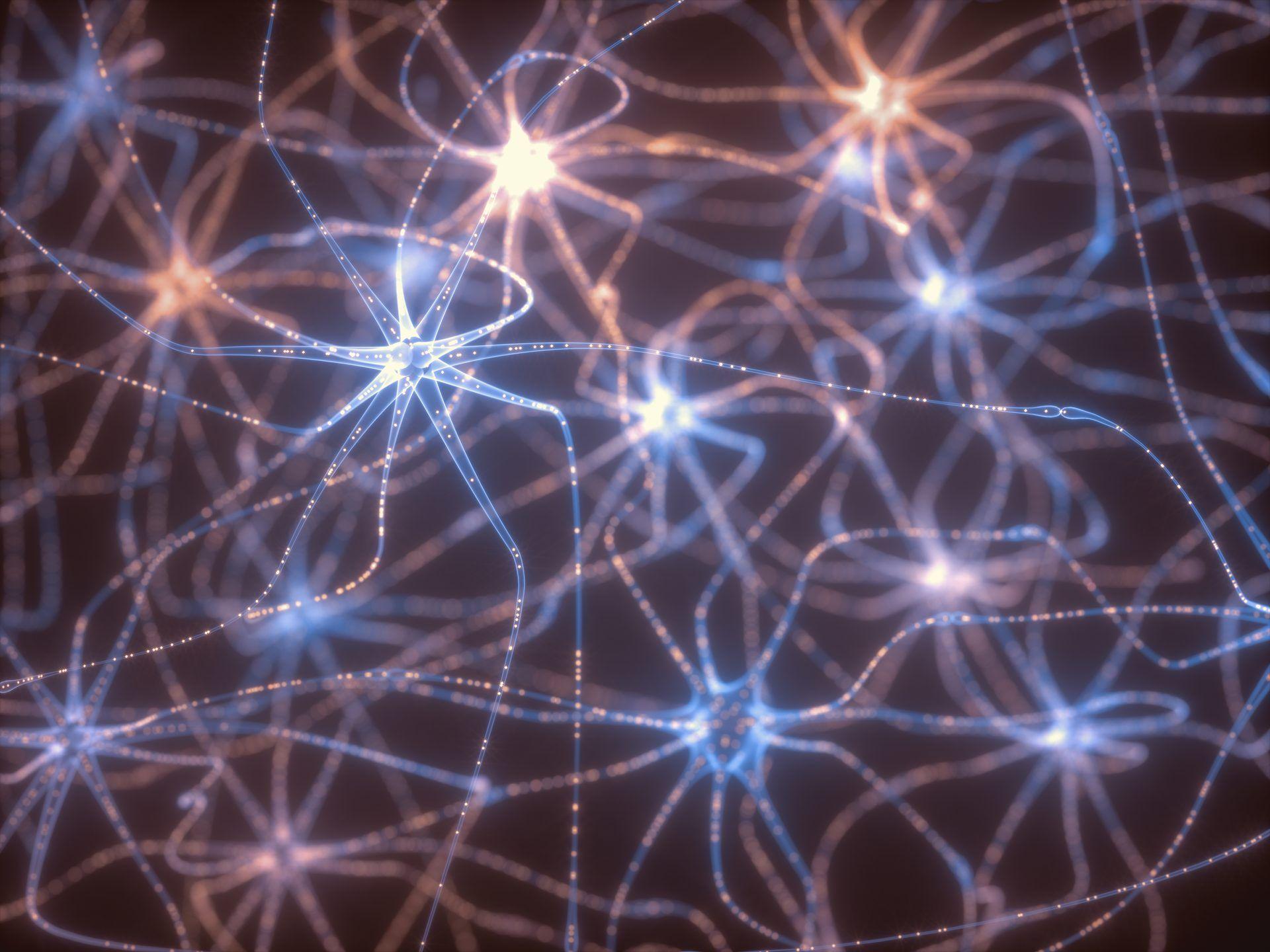

· 1943 – The first mathematical model of a biological neuron presented

The scientific article “A Logical Calculus of the Ideas Immanent in Nervous Activity,” published by Walter Pitts and Warren McCulloch, introduced the first mathematical model of neural networks. For many, that paper was the real starting point for the modern discipline of machine learning, which led the way for deep learning and quantum machine learning.

McCulloch and Pitts’s 1948 paper built on Alan Turing’s “On Computable Numbers” to provide a means for describing brain activities in general terms, demonstrating that basic components linked in a neural network might have enormous computational capability. Until the ideas were applied by John von Neuman, the architect of modern computing, Norbert Wiene, and others, the paper received little attention.

· 1949 – Hebb successfully related behavior to neural networks and brain activity

In 1949, Canadian psychologist Donald O. Hebb, then a lecturer at McGill University, published The Organization of Behavior: A Neuropsychological Theory. This was the first time that a physiological learning rule for synaptic change had been made explicit in print and became known as the “Hebb synapse.”

McCulloch and Pitts developed cell assembly theory in their 1951 paper. McCulloch and Pitts’ model was later known as Hebbian theory, Hebb’s rule, Hebb’s postulate, and cell assembly theory. Models that follow this idea are said to exhibit “Hebbian learning.” As stated in the book: “When an axon of cell A is near enough to excite cell B and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that A’s efficiency, as one of the cells firing B, is increased.”

Hebb’s model paved the way for the development of computational machines that replicated natural neurological processes

Hebb referred to the combination of neurons that may be regarded as a single processing unit as “cell assemblies.” And their connection mix determined the brain’s change in response to stimuli.

Hebb’s model for the functioning of the mind has had a significant influence on how psychologists view stimulus processing in mind. It also paved the way for the development of computational machines that replicated natural neurological processes, such as machine learning. While chemical transmission became the major form of synaptic transmission in the nervous system, modern artificial neural networks are still built on the foundation of electrical signals traveling through wires that Hebbian theory was created around.

· 1950 – Turing found a way to measure the thinking capabilities of machines

The Turing Test is a test of artificial intelligence (AI) for determining whether or not a computer thinks like a human. The term “Turing Test” derives from Alan Turing, an English computer scientist, cryptanalyst, mathematician, and theoretical biologist who invented the test.

It is impossible to define intelligence in a machine, according to Turing. If a computer can mimic human responses under specific circumstances, it may be said to have artificial intelligence. The original Turing Test requires three physically separated terminals from one another. One terminal is controlled by a computer, while humans use the other two.

During the experiment, one of the humans serves as the questioner, with the second human and computer as respondents. The questioner asks questions of the respondents in a specific area of study within a specified format and context. After a determined duration or number of queries, the questioner is invited to select which respondent was real and which was artificial. The test is carried out numerous times. The computer is called “artificial intelligence” if the inquirer confirms the correct outcome in half of the test runs or fewer.

The test was named after Alan Turing, who pioneered machine learning during the 40s and 50s. In 1950, Turing published a “Computing Machinery and Intelligence” paper to outline the test.

· 1952 – The first computer learning program was developed at IBM

Arthur Samuel’s Checkers program, which was created for play on the IBM 701, was shown to the public for the first time on television on February 24, 1956. Robert Nealey, a self-described checkers master, played the game on an IBM 7094 computer in 1962. The computer won. The Samuel Checkers program lost other games to Nealey. However, it was still regarded as a milestone for artificial intelligence and provided the public with an example of the abilities of an electronic computer in the early 1960s.

The more the program played, learning which moves made up winning strategies in a ‘supervised learning mode,’ and incorporating them into its algorithm, the better it performed at the game.

Samuel’s program was a groundbreaking story for the time. Computers could beat checkers for the first time. Electronic creations were challenging humanity’s intellectual advantage. To the technology-illiterate public of 1962, this was a significant event. It established the groundwork for machines to do other intelligent tasks better than humans. And people started to think; will computers surpass humans in intelligence? After all, computers were only around for a few years back then, and the artificial intelligence field was still in its infancy…

Moving on in the history of machine learning, you might also want to check out Machine learning engineering: The science of building reliable AI systems.

· 1958 – The Perceptron was designed

In July 1958, the United States Office of Naval Research unveiled a remarkable invention: The perception. An IBM 704 – a 5-ton computer size of a room, was fed a series of punch cards and, after 50 tries, learned to identify cards with markings on the left from markings on the right.

According to its inventor, Frank Rosenblatt, it was a show of the “perceptron,” which was “the first machine capable of generating an original thought,” according to its inventor, Frank Rosenblatt.

“Stories about the creation of machines having human qualities have long been a fascinating province in the realm of science fiction,” Rosenblatt observed in 1958. “Yet we are about to witness the birth of such a machine – a machine capable of perceiving, recognizing, and identifying its surroundings without any human training or control.”

He was right about his vision, but it took almost half a decade to provide it.

· The 60s – Bell Labs’ attempt to teach machines how to read

The term “deep learning” was inspired by a report from the late 1960s describing how scientists at Bell Labs were attempting to teach computers to read English text. The invention of artificial intelligence, or “AI,” in the early 1950s began the trend toward what is now known as machine learning.

· 1967 – Machines gained the ability to recognize patterns

The “nearest neighbor” algorithm was created, allowing computers to conduct rudimentary pattern detection. When the program was given a new object, it compared it to the existing data and classified it as the nearest neighbor, which meant the most similar item in memory.

The invention of the pattern recognition algorithm is credited to Fix and Hodges, who detailed their non-parametric technique for pattern classification in 1951 in an unpublished issue of a US Air Force School of Aviation Medicine report. The k-nearest neighbor rule was initially introduced by Fix and Hodges as a non-parametric method for pattern classification.

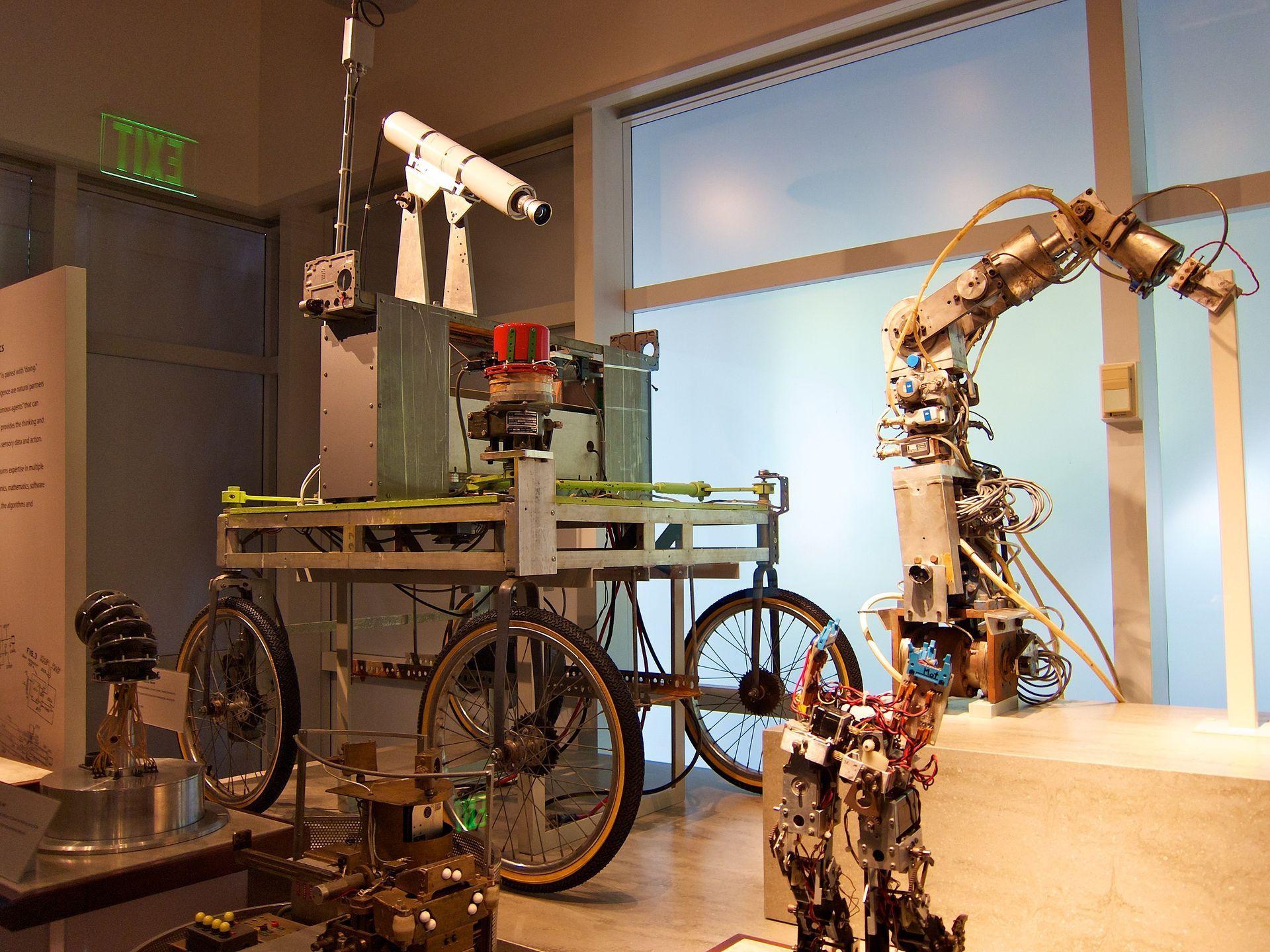

· 1979 – One of the first autonomous vehicles was invented at Stanford

The Stanford Cart was a decades-long endeavor that evolved in various forms from 1960 to 1980. It began as a study of what it would be like to operate a lunar rover from Earth and was eventually revitalized as an autonomous vehicle. On its own, the student invention cart could maneuver around obstacles in a room. The Stanford Cart was initially a remote-controlled television-equipped mobile robot.

A computer program was created to control the Cart through chaotic locations, obtaining all of its information about the world from on-board TV images. The Cart used a variety of stereopsis to discover things in three dimensions and determine its own motion. Based on a model created with this data, it planned an obstacle-avoiding route to the target destination. As the Cart encountered new obstacles on its trip, the plan evolved.

We are talking about the history of machine learning, but data science is also advanced today in many areas. Here are a couple interesting articles we prepared before:

- Data science, machine learning, and AI in fitness – now and next

- Uncommon machine learning examples that challenge what you know

- Machine Learning vs. Artificial Intelligence: Which Is the Future of Data Science?

· 1981 – Explanation based learning prompt to supervised learning

Gerald Dejong pioneered explanation-based learning (EBL) in a journal article published in 1981. EBL laid the foundation of modern supervised learning because training examples supplement prior knowledge of the world. The program analyzes the training data and eliminates unneeded information to create a broad rule applied to future instances. For example, if the software is instructed to concentrate on the queen in chess, it will discard all non-immediate-effect pieces.

· The 90s – Emergence of various machine learning applications

Scientists began to apply machine learning in data mining, adaptive software, web applications, text learning, and language learning in the 1990s. Scientists create computer programs that can analyze massive amounts of data and draw conclusions or learn from the findings. The term “Machine Learning” was coined as scientists were finally able to develop software in such a way that it could learn and improve on its own, requiring no human input.

· The Millennium – The rise of adaptive programming

The new millennium saw an unprecedented boom in adaptive programming. Machine learning went hand to hand with adaptive solutions for a long time. These programs can identify patterns, learn from experience, and improve themselves based on the feedback they receive from the environment.

Deep learning is an example of adaptive programming, where algorithms can “see” and distinguish objects in pictures and videos, which was the underlying technology behind Amazon GO shops. Customers are charged as they walk out without having to stand in line.

· Today – Machine learning is a valuable tool for all industries

Machine learning is one of today’s cutting-edge technologies that has aided us in improving not just industrial and professional procedures but also day-to-day life. This branch of machine learning uses statistical methods to create intelligent computer systems capable of learning from data sources accessible to it.

Machine learning is already being utilized in various areas and sectors. Medical diagnosis, image processing, prediction, classification, learning association, and regression are just a few applications. Machine learning algorithms are capable of learning from previous experiences or historical data. Machine learning programs use the experience to produce outcomes.

Organizations use machine learning to gain insight into consumer trends and operational patterns, as well as the creation of new products. Many of today’s top businesses incorporate machine learning into their daily operations. For many businesses, machine learning has become a significant competitive differentiator. In fact, machine learning engineering is a rising area.

· Tomorrow – The future of Machine Learning: Chasing the quantum advantage

Actually, our article was supposed to end here, since we came to today in the history of machine learning, but it doesn’t, because tomorrow holds more…

For example, Quantum Machine Learning (QML) is a young theoretical field investigating the interaction between quantum computing and machine learning methods. Quantum computing has recently been shown to have advantages for machine learning in several experiments. The overall objective of Quantum Machine Learning is to make things move faster by combining what we know about quantum computing with conventional machine learning. The idea of Quantum Machine Learning is derived from classical Machine Learning theory and interpreted in that light.

The application of quantum computers in the real world has advanced rapidly during the last decade, with the potential benefit becoming more apparent. One important area of research is how quantum computers may affect machine learning. It’s recently been demonstrated experimentally that quantum computers can solve problems with complex correlations between inputs that are difficult for traditional systems.

According to Google’s research, quantum computers may be more beneficial in certain applications. Quantum models generated on quantum computing machines might be far more potent for particular tasks, allowing for quicker processing and generalization on fewer data. As a result, it’s crucial to figure out when such a quantum edge can be exploited…