Google AI’s Pathways Language Model is able to handle many tasks at the same time. The world has been open-eyed to what large language models (LLMs) may do with very little data for nearly two years, responsibly answering questions, translating text, and even creating their own bits of creative writing after OpenAI’s 175 billion-parameter GPT-3 language model revealed the potential of such systems.

Pathways Language Model is the next-gen AI architecture

The success of GPT-3 prompted further models, such as Google’s LaMDA (137 billion parameters) and Megatron-Turing NLG (530 billion parameters), which were all successful in small-sample learning. Now, Google has introduced a new large language model called Pathways Language Model (PaLM), a 540 billion-parameter Transformer model trained on Google’s new Pathways system.

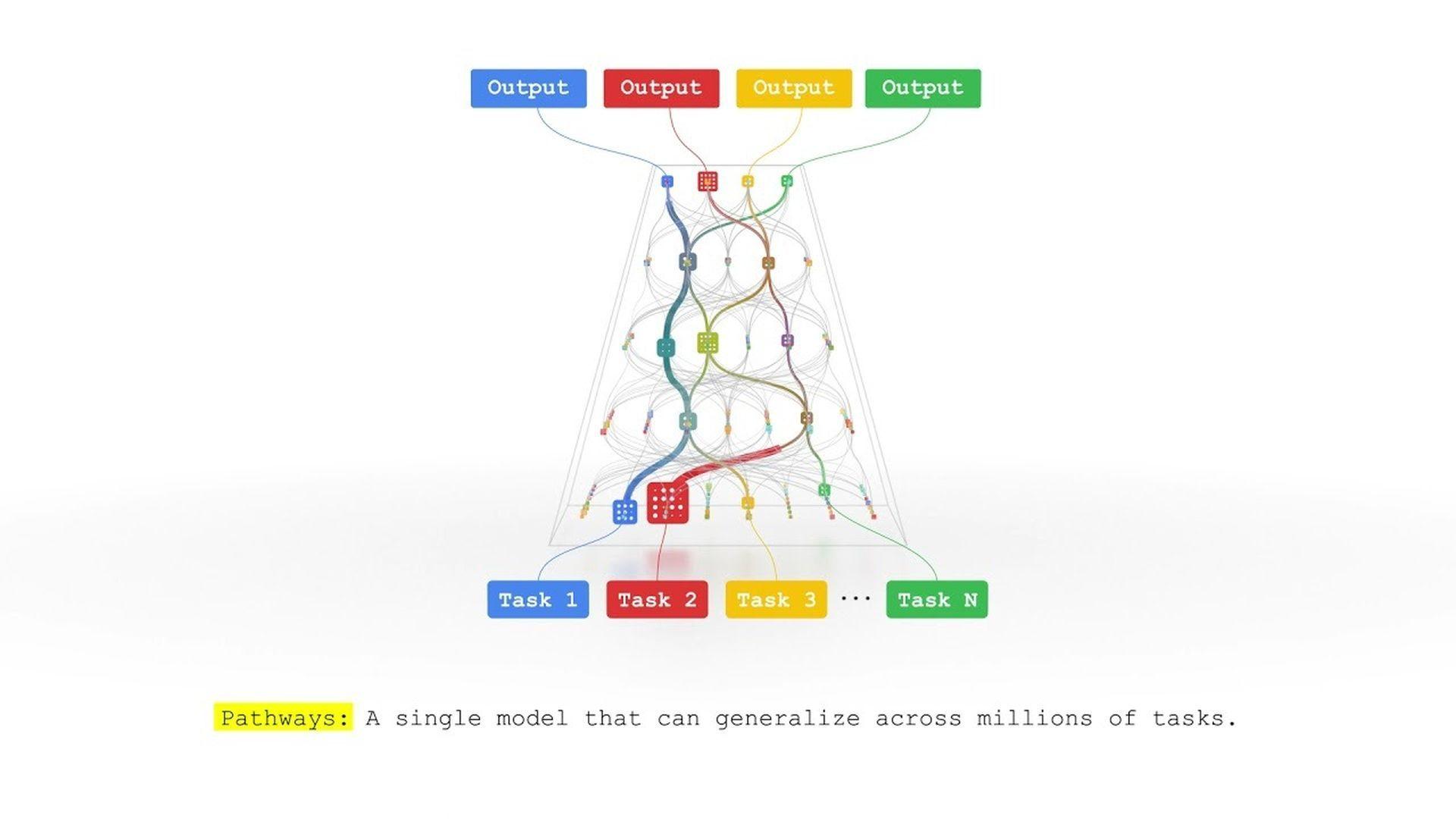

Jeff Dean, senior vice president of Google Research, introduced the Pathways Language Model as a “next-generation AI architecture” in October that would allow developers to “train a single model to do thousands or millions of things” rather than just one.

“[We]d] like to train one model that can not only handle many separate tasks but also draw upon and combine its existing skills to learn new tasks faster and more effectively. That way what a model learns by training on one task—say, learning how aerial images can predict the elevation of a landscape—could help it learn another task—say, predicting how flood waters will flow through that terrain,” Mr. Dean said.

Since Pathways Language Model has improved a lot and PaLM seems to be the first product of hard work. “PaLM demonstrates the first large-scale application of the Pathways system to scale training to…the largest TPU-based system configuration used for training to date,” Google said. That training was carried out across six Cloud TPU v4 Pods and included 6,144 chips.

“This is a significant increase in scale compared to most previous LLMs which were either trained on a single TPU v3 Pod (e.g., GLaM, LaMDA), used pipeline parallelism to scale to 2240 A100 GPUs across GPU clusters (Megatron-Turing NLG) or used multiple TPU v3 Pods (Gopher) with a maximum scale of 4096 TPU v3 chips,” Google said. PaLM achieved hardware flops utilization of 57.8 percent, which Google claims is the greatest yet by an LLM at this scale.

According to Google, PaLM—which was trained on a mix of English and multilingual data—exhibited breakthrough potential on a number of tough activities, outperforming GPT-3, LaMDA, and Megatron-Turning NLG in 28 of 29 tasks ranging from question answering to sentence completion.

Google AI: PaLM is able to explain an original joke

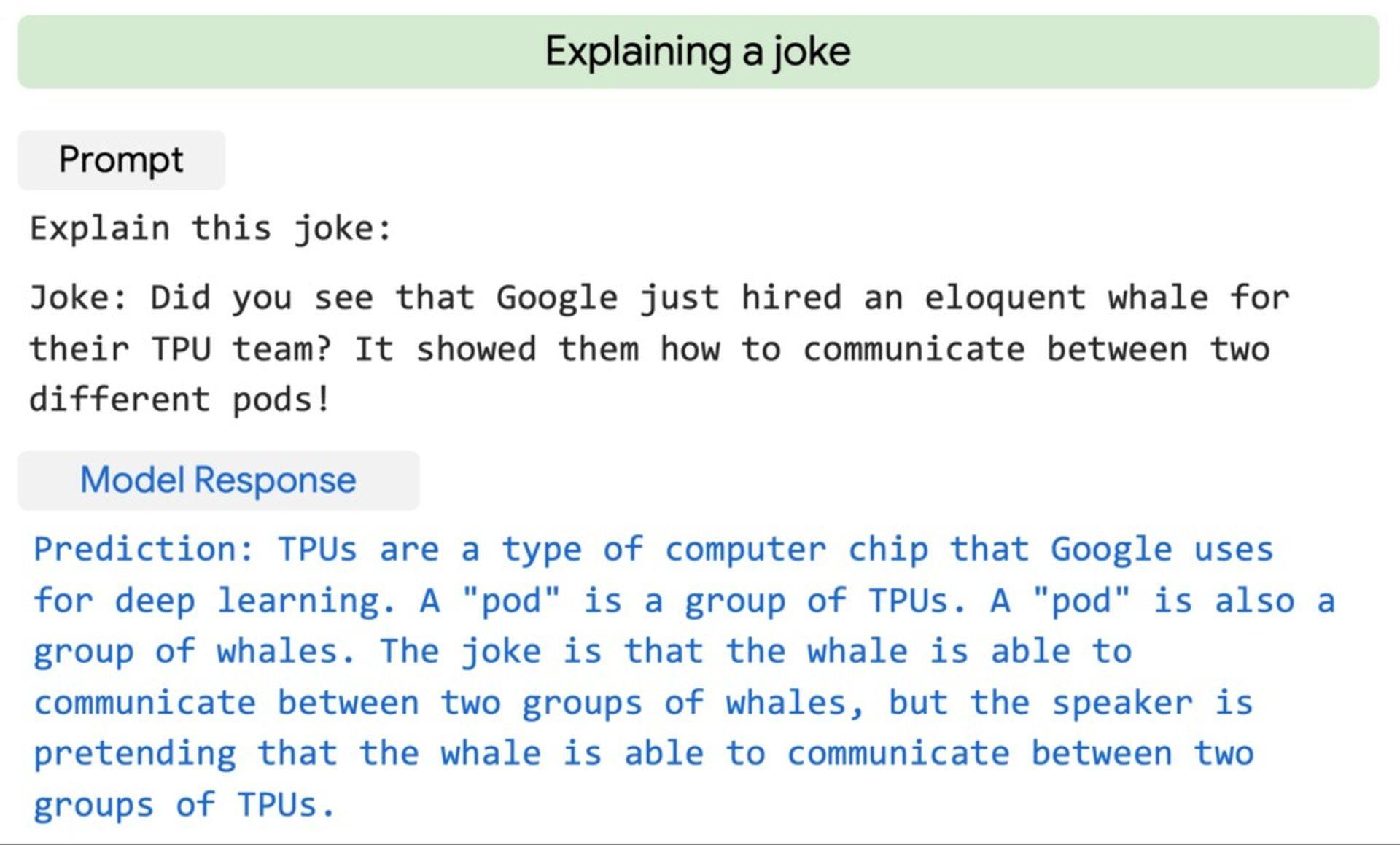

“PaLM can distinguish cause and effect, understand conceptual combinations in appropriate contexts, and even guess the movie from an emoji,” said Google.

Google also showed a fantastic example of the Pathways Language Model telling an original joke.

Given the capabilities of PaLM, Google includes a note on ethical considerations for large language models, which are a subject of great interest and concern among AI ethics researchers.

“While the analysis helps outline some potential risks of the model, domain- and task-specific analysis is essential to truly calibrate, contextualize, and mitigate possible harms. Further understanding of risks and benefits of these models is a topic of ongoing research, together with developing scalable solutions that can put guardrails against malicious uses of language models,” researchers noted.

Closing out the session, Google emphasized that PaLM’s progress helps it closer to its Pathways architecture goal of allowing a single artificial intelligence system to generalize across thousands or millions of tasks, understand diverse sorts of data, and do so with remarkable efficiency.