A recent research from MIT Lincoln Laboratory and Northeastern University has investigated the savings that can be made by power capping GPUs used in model training and inference and several different methods to reduce AI energy use in light of growing concern over huge machine learning models’ energy demands.

Power capping can significantly reduce energy usage when training ML

The study’s major problem focuses on power capping (cutting off the available power to the GPU training the model). They think power capping results in significant energy savings, especially for Masked Language Modeling (MLM) and frameworks like BERT and its descendants. Language modeling is a rapidly growing area. Did you know that Pathways Language Model can explain a joke?

Similar cost savings may be had due to reduced training time and energy usage for larger-scale models, which have grabbed people’s attention in recent years owing to hyperscale data and new models with billions or trillions of parameters.

For bigger deployments, the researchers found that lowering the power limit to 150W produced an average 13.7% reduction in energy usage and a modest 6.8% increase in training time compared to the standard 250W maximum. If you want to dig into more detail, find out how to manage the machine learning lifecycle by reading our article.

The researchers further contend that, despite the headlines about the cost of model training in recent years, the energy requirements of utilizing those trained models are significantly greater.

“For language modeling with BERT, energy gains through power capping are noticeably greater when performing inference than training. If this is consistent for other AI applications, this could have significant ramifications in energy consumption for large-scale or cloud computing platforms serving inference applications for research and industry.”

Finally, the study claims that extensive machine learning training should be limited to the colder months of the year and at night to save money on cooling.

“Evidently, heavy NLP workloads are typically much less efficient in the summer than those executed during winter. Given the large seasonal variation, if there, are computationally expensive experiments that can be timed to cooler months this timing can significantly reduce the carbon footprint,” the authors stated.

The study also recognizes the potential for energy savings in optimizing model architecture and processes. However, it leaves further development to other efforts.

Finally, the authors advocate for new scientific papers from the machine learning industry to end with a statement that details the energy usage of the study and the potential energy consequences of adopting technologies documented in it.

The study titled “Great Power, Great Responsibility: Recommendations for Reducing Energy for Training Language Models” was conducted by six researchers Joseph McDonald, Baolin Li, Nathan Frey, Devesh Tiwari, Vijay Gadepally, Siddharth Samsi from MIT Lincoln and Northeastern University.

How to create power-efficient ML?

To achieve the same level of accuracy, machine learning algorithms require increasingly large amounts of data and computing power, yet the current ML culture equates energy usage with improved performance.

According to a 2022 MIT collaboration, achieving a tenfold improvement in model performance would need a 10,000-fold increase in computational requirements and the same amount of energy.

As a result, interest in more power-efficient effective ML training has grown in recent years. According to the researchers, the new paper is the first to focus on the influence of power constraints on machine learning training and inference, with particular emphasis paid to NLP approaches.

“[This] method does not affect the predictions of trained models or consequently their performance accuracy on tasks. That is, if two networks with the same structure, initial values, and batched data are trained for the same number of batches under different power caps, their resulting parameters will be identical, and only the energy required to produce them may differ,” explained the authors.

To evaluate the impact of power capping on training and inference, the researchers utilized Nvidia-smi (System Management Interface) and a HuggingFace MLM library.

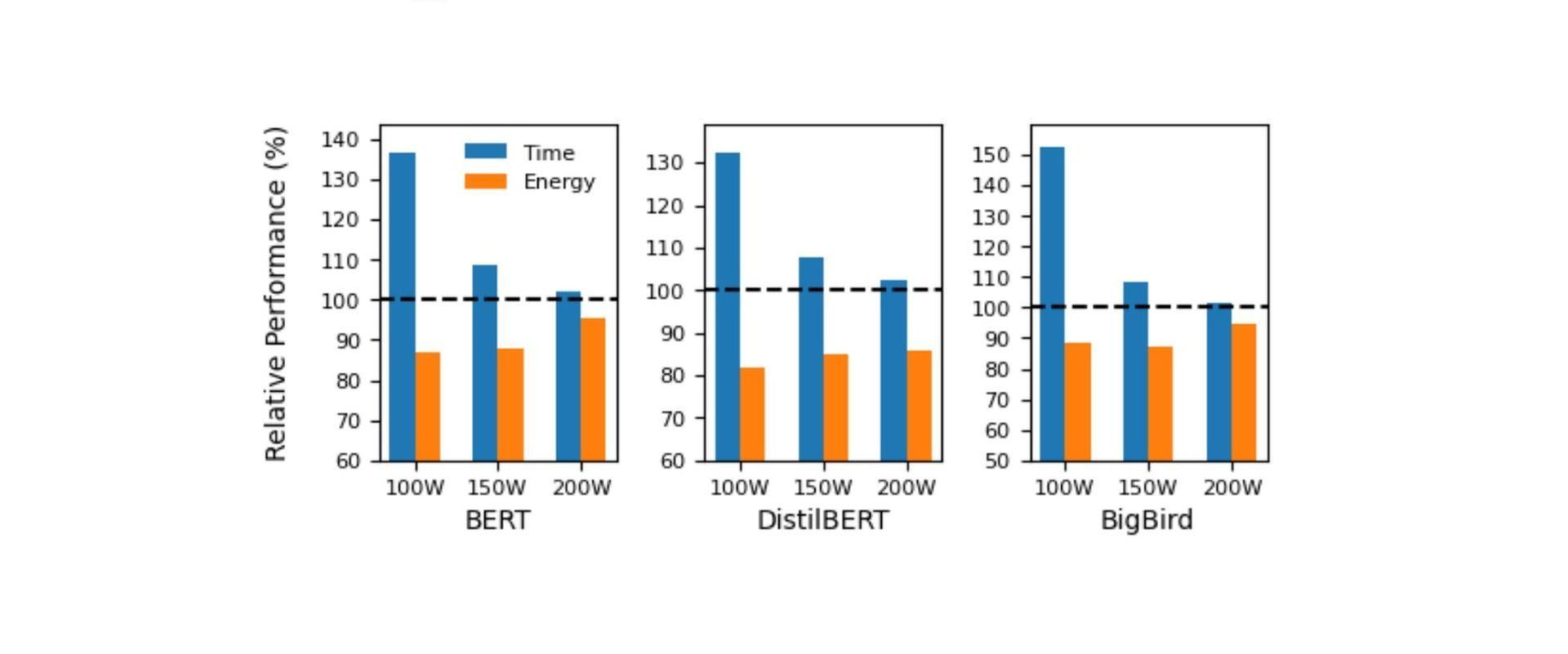

The researchers trained BERT, DistilBERT, and Big Bird using MLM and tracked their energy usage throughout training and deployment.

For the experiment, DeepAI’s WikiText-103 dataset was used for four epochs of training in batches of eight on 16 V100 GPUs, with four different power capping: 100W, 150W, 200W, and 250W (the default or baseline for an NVIDIA V100 GPU). To guard against bias during training, scratch-trained parameters and random init values were used.

As demonstrated in the first graph, with favorable changes in training time and non-linear, a great amount of energy savings may be achieved.

“Our experiments indicate that implementing power caps can significantly reduce energy usage at the cost of training time,” said the authors.

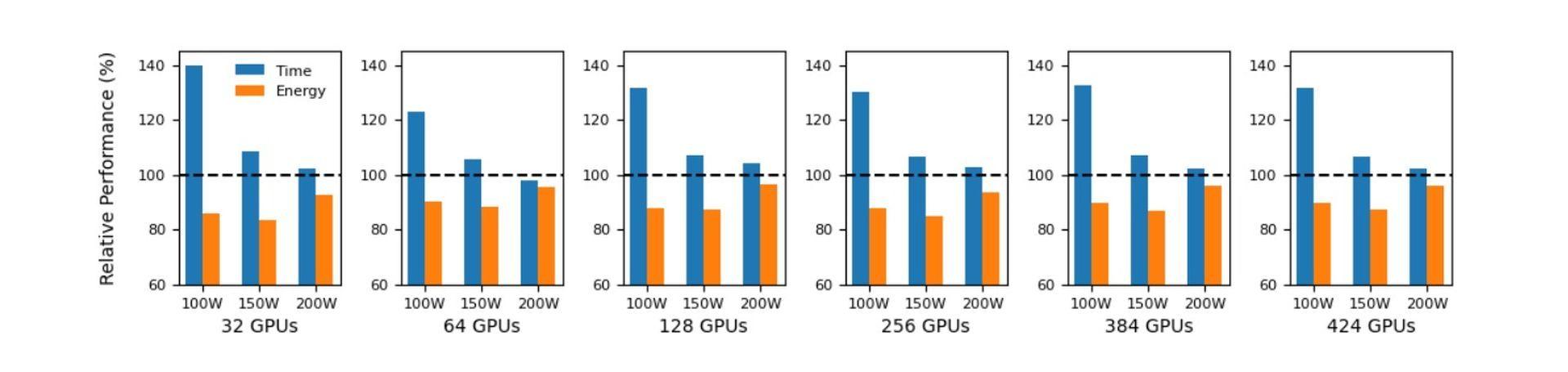

The authors then used the same method to tackle a more challenging problem: training BERT on dispersed configurations of numerous GPUs, which is a more typical case for well-funded and well-publicized FAANG NLP models.

The paper states:

“Averaging across each configuration choice, a 150W bound on power utilization led to an average 13.7% decrease in energy usage and 6.8% increase in training time compared to the default maximum. [The] 100W setting has significantly longer training times (31.4% longer on average). The authors explained that a 200W limit corresponds with almost the same training time as a 250W limit but more modest energy savings than a 150W limit,” explained the authors.

The researchers determined that these findings support the notion of power-capping GPU architectures and applications that run on them at 150W. They also noted that energy savings apply to various hardware platforms, so they repeated the tests to see how things fared for NVIDIA K80, T4, and A100 GPUs.

Inference requires a lot of power

Despite the headlines, it’s inference (i.e., utilizing a completed model, such as an NLP model) rather than training that has the greatest amount of power according to prior research, implying that as popular models are commercialized and enter the mainstream, power usage might grow more problematic than it is at this early phase of NLP development.

The researchers quantified the influence of inference on power usage, finding that restricting power use has a significant impact on inference latency:

“Compared to 250W, a 100W setting required double the inference time (a 114% increase) and consumed 11.0% less energy, 150W required 22.7% more time and saved 24.2% the energy, and 200W required 8.2% more time with 12.0% less energy,” explained the authors.

The importance of PUE

The paper’s authors propose that training might be done at peak Power Usage Effectiveness (PUE), roughly in the winter and night when the data center is most efficient.

“Significant energy savings can be obtained if workloads can be scheduled at times when a lower PUE is expected. For example, moving a short-running job from daytime to nighttime may provide a roughly 10% reduction, and moving a longer, expensive job (e.g., a language model taking weeks to complete) from summer to winter may see a 33% reduction. While it is difficult to predict the savings that an individual researcher may achieve, the information presented here highlights the importance of environmental factors affecting the overall energy consumed by their workloads,” stated the authors.

Finally, the paper suggests that because local processing resources are unlikely to have implemented the same efficiency measures as big data centers and high-level cloud computing players, transferring workloads to regions with deep energy investments may provide environmental benefits.

“While there is convenience in having private computing resources that are accessible, this convenience comes at a cost. Generally speaking, energy savings and impact are more easily obtained at larger scales. Datacenters and cloud computing providers make significant investments in the efficiency of their facilities,” added the authors.

This is not the only attempt to create power-efficient machine learning and artificial intelligence models. The latest researches show that nanomagnets will pave the way for low-energy AI.