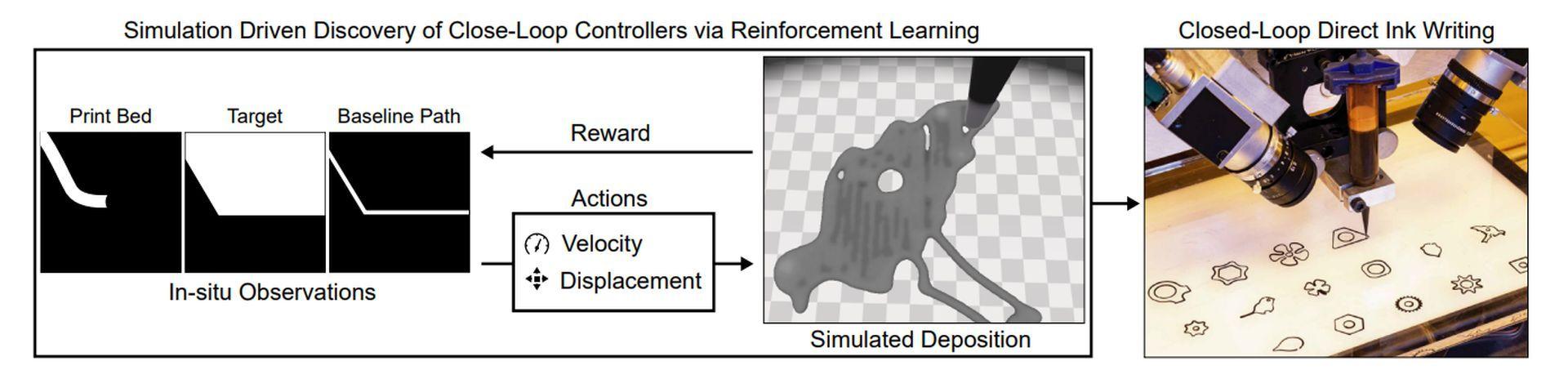

To track and modify the digital manufacturing processes in real-time, researchers trained a new AI. Although scientists and engineers are continually creating new materials with special features that can be utilized for 3D printing, figuring out how to print with them can be challenging and expensive.

A simulation teaches the digital manufacturing process to AI

To find the optimal parameters that consistently produce high-quality prints of new material, an expert operator frequently needs to conduct manual trial-and-error experiments, sometimes producing thousands of prints. Printing speed and the amount of material the printer deposits are some of these variables.

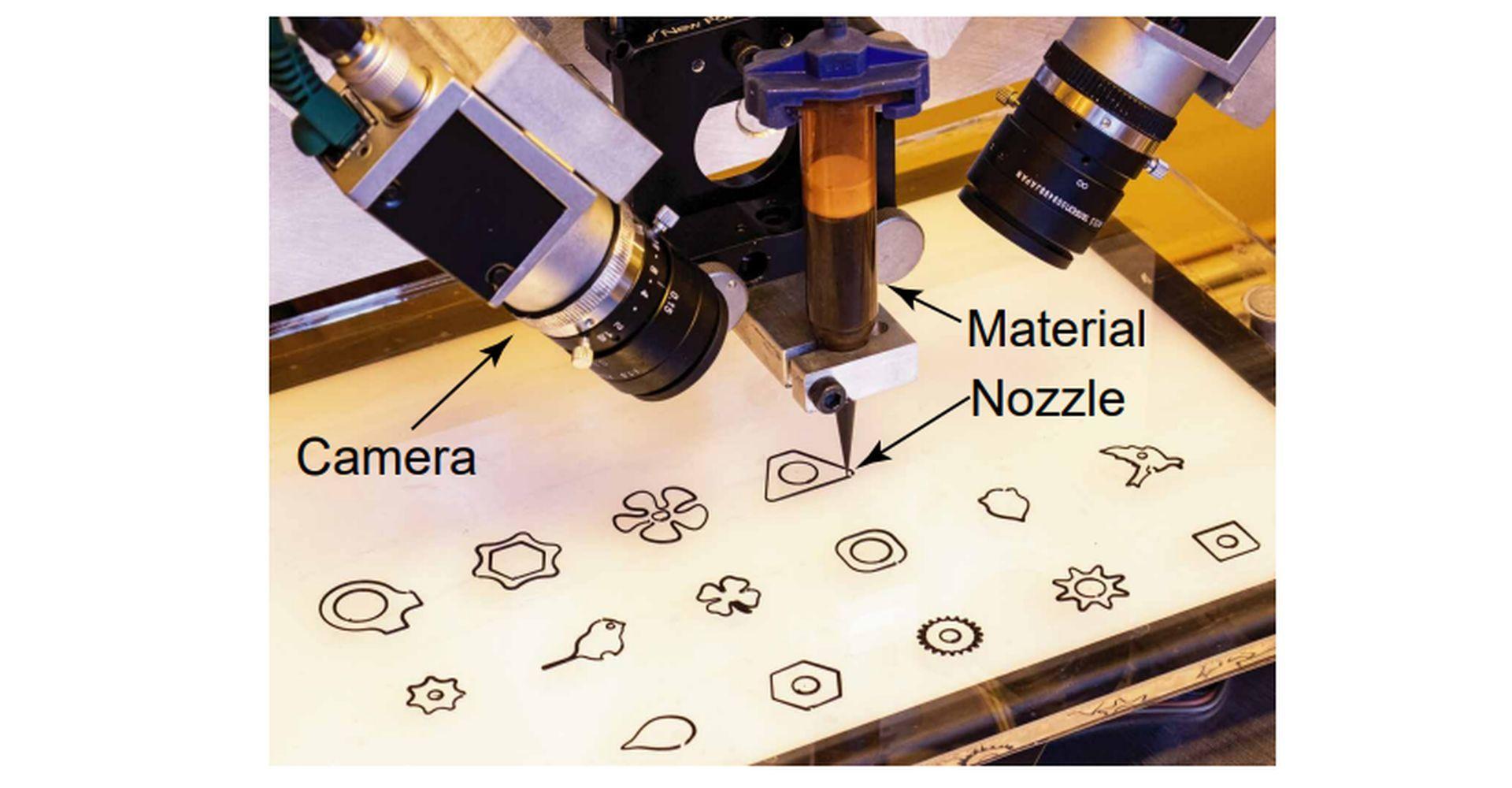

MIT researchers have now employed artificial intelligence to streamline this process. They created a machine-learning system that watches the digital manufacturing process using computer vision and corrects handling faults in real-time.

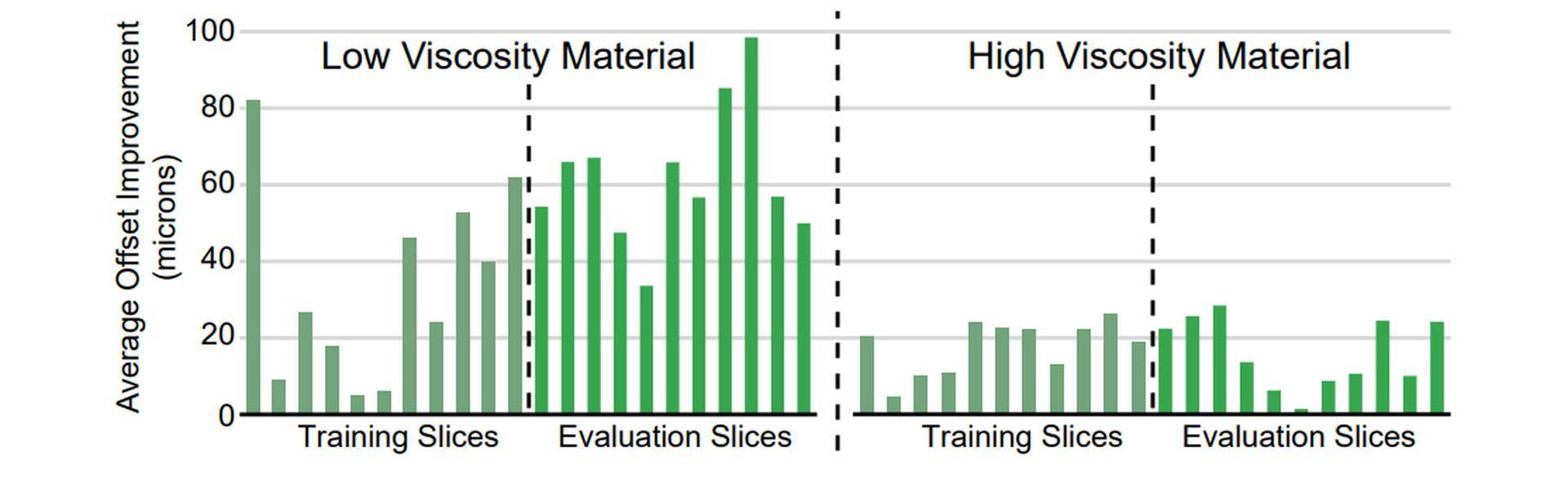

They put the controller on a real 3D printer after using simulations to teach a neural network how to change the printing parameters to reduce error. Compared to other 3D printing controllers, their technology produced items more correctly.

The digital manufacturing process of printing tens of thousands or hundreds of millions of actual objects to train the neural network is avoided by the work. Additionally, it might make it simpler for engineers to incorporate new materials into their designs, enabling them to create products with unique chemical or electrical properties. If unexpected changes occur in the environment or the printed material, it might also make it easier for technicians to adjust the printing process quickly.

AI in manufacturing: The future of Industry 4.0

“This project is really the first demonstration of building a manufacturing system that uses machine learning to learn a complex control policy. If you have manufacturing machines that are more intelligent, they can adapt to the changing environment in the workplace in real-time to improve the yields or the accuracy of the system. You can squeeze more out of the machine,” says Wojciech Matusik, a senior author and MIT professor of electrical engineering and computer science who directs the Computational Design and Fabrication Group (CDFG) in the Computer Science and Artificial Intelligence Laboratory.

The co-lead authors are Michal Piovarci, a postdoc at the Institute of Science and Technology in Austria, and Mike Foshey, a mechanical engineer and project manager with the CDFG, Jie Xu, an electrical engineering and computer science graduate student, and Timothy Erps, a former technical associate with the CDFG. The study will be presented at the SIGGRAPH conference of the Association for Computing Machinery.

The parameters

Due to the extensive amount of trial and error involved, choosing the optimal parameters for a digital manufacturing process can be one of the most challenging steps in the procedure. Additionally, once a technician discovers a combination that functions well, those parameters are only optimal in that one particular circumstance. She lacks information on how the substance will operate in various settings, on various gear, or if a fresh batch has different characteristics.

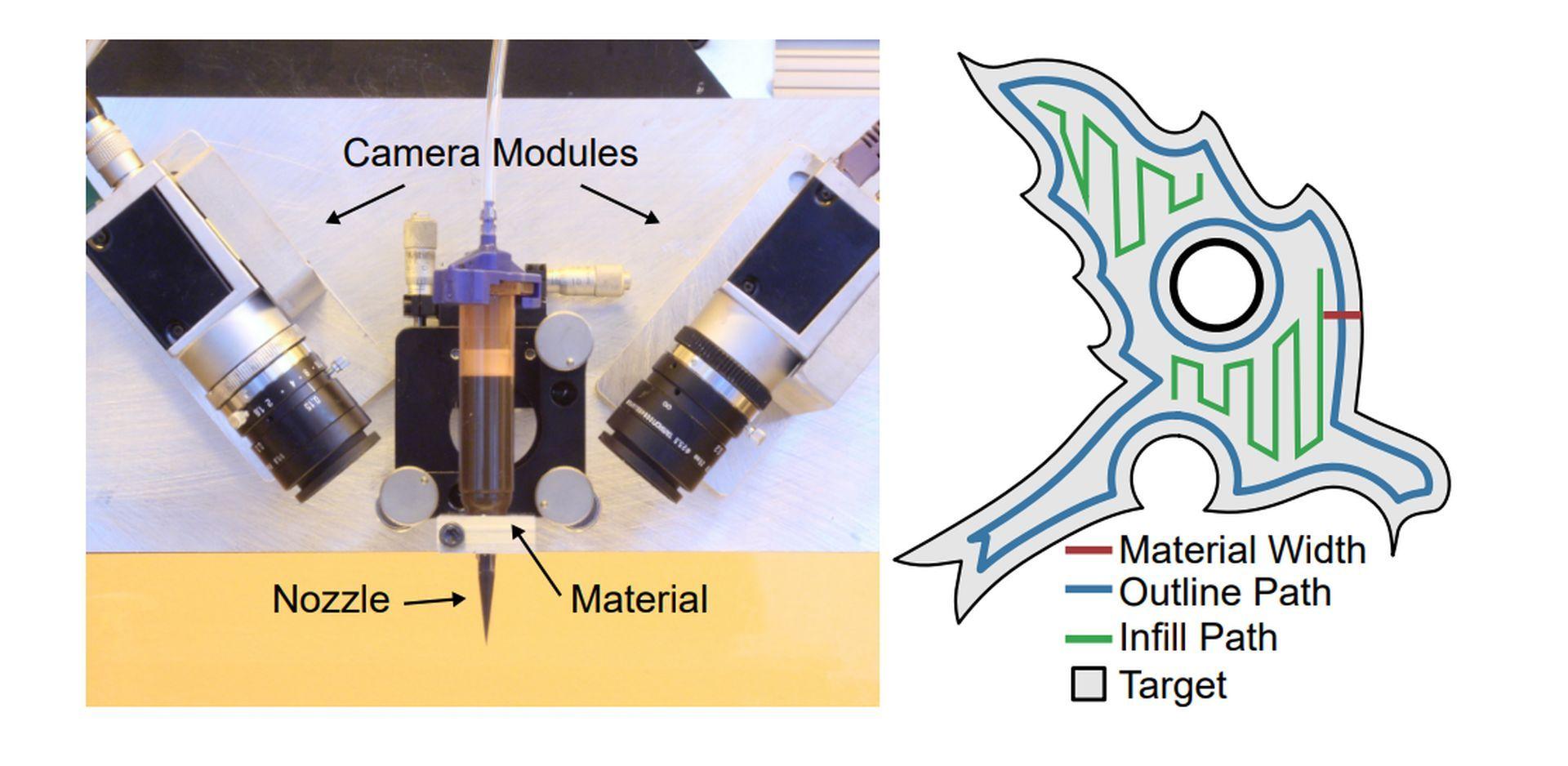

Additionally, there are difficulties with using a machine-learning system. The researchers had to first take real-time measurements of what was happening on the printer.

They created a machine-vision system with two cameras pointed at the 3D printer’s nozzle to accomplish this. The technology illuminates the material as it is deposited and determines the thickness of the material based on how much light passes through.

“You can think of the vision system as a set of eyes watching the process in real-time,” Foshey explains.

Once the controller has processed the images it has received from the vision system, it will change the printer’s orientation and feed rate in response to any errors it finds.

Making millions of prints is necessary for training a neural network-based controller to comprehend this digital manufacturing process, which is a data-intensive operation. So instead, the researchers created a simulator.

Reinforcement learning is used to train the controller

They employed a reinforcement learning technique to train their controller, which teaches a model by rewarding it when it makes a mistake. The model was tasked with choosing printing settings to produce a specific object in a virtual setting. The model was awarded when the selected parameters minimized the error between its print and the anticipated result after being given the predicted result.

The environmental impact of AI makes regulations vital for a sustainable future

An “error” in this context means that the model either dispensed too much material, filling in spaces that should have remained empty, or not enough material, leaving spaces that needed to be filled in. The model improved its control policy to maximize the reward by running more simulated prints, increasing its accuracy.

The real world, however, is messier than a simulation. Conditions typically alter due to minute fluctuations or printing process noise. The researchers developed a numerical model that roughly represents 3D printer noise. They utilized this approach to simulate noise, which produced more accurate outcomes.

“The interesting thing we found was that, by implementing this noise model, we were able to transfer the control policy that was purely trained in simulation onto hardware without training with any physical experimentation. We didn’t need to do any fine-tuning on the actual equipment afterwards,” Foshey says.

When the controller was put through its paces, it printed objects more precisely than any other control strategy they examined. It worked particularly well when printing infill, which involves printing an object’s interior. The researchers’ controller changed the printing path, so the object kept level while some other controllers deposited so much material that the printed object bulged up.

Even after materials are deposited, their control policy can learn how they disperse and adapt parameters.

“We were also able to design control policies that could control different types of materials on the fly. So if you had a manufacturing process out in the field and you wanted to change the material, you wouldn’t have to revalidate the manufacturing process. You could just load the new material, and the controller would automatically adjust,” Foshey explains.

The researchers intend to create controls for other digital manufacturing processes now that they have demonstrated the efficiency of this method for 3D printing. They would also like to examine how the strategy may be changed to accommodate situations where there are several material layers, or various materials are being produced simultaneously. Additionally, their method assumed that each material had a constant viscosity (or “syrupiness”). Still, a later version might employ artificial intelligence to detect and account for viscosity in real-time for digital manufacturing processes.