Machine learning pipeline architectures streamline and automate a machine learning model’s whole workflow. Once laid out within a machine learning pipeline, fundamental components of the machine learning process can be improved or automated. Models are created and applied in an increasing range of contexts as more businesses take advantage of machine learning. This development is standardized through the use of a machine learning pipeline, which also improves model accuracy and efficiency.

Machine learning pipeline architecture explained

Defining each phase as a separate module of the overall process is a crucial step in creating machine learning pipelines. The end-to-end process may be organized and managed by organizations using this modular approach, which enables them to understand machine learning models holistically.

Streamlining a machine learning process flow: Planning is the key

But because individual modules can be scaled up or down inside the machine learning process, it also offers a solid foundation for scaling models. A machine learning pipeline’s various stages can also be modified and repurposed for use with a new model to achieve even greater efficiency gains.

What is a machine learning pipeline?

The machine learning pipeline architecture facilitates the automation of an ML workflow, and it enables the transformation and correlation of sequence data into a model for analysis and output. The purpose of an ML pipeline is to enable the transfer of data from a raw data format to some useful information.

It offers a way to create a multi-ML parallel pipeline system to analyze the results of various ML techniques. Its goal is to exert control over the machine learning model. The implementation can be made more flexible with the aid of a well-planned pipeline. Identifying the error and replacing it with the appropriate code is similar to having an overview of the code.

What is an end-to-end machine learning pipeline?

Building a machine learning model can benefit greatly from machine learning pipelines. Data scientists can concentrate on preparing other stages of the sequence, while some of the sequences can be conducted automatically (such as data import and cleaning) thanks to clearly documented machine learning pipelines. Parallel execution of machine learning pipelines is another option for increasing process effectiveness. Machine learning pipelines may be reused, repurposed, and modified to fit the needs of new models because each stage is precisely specified and optimized, making it simple to scale the process.

A greater return on investment, quicker delivery, and more accurate models are all benefits of improved end-to-end machine learning procedures. Less human error results from replacing manual procedures, and delivery times are shortened. A good machine learning pipeline must also have the ability to track different iterations of a model, which requires a lot of resources to execute manually. Additionally, a machine learning pipeline offers a single point of reference for the entire procedure. This is significant because different experts frequently lead several difficult phases in machine learning training and deployment.

What is a pipeline model?

The process of developing, deploying, and monitoring a machine learning model is called a “machine learning pipeline.” The method is used to map the entire machine learning model development, training, deployment, and monitoring process. The process is frequently automated using it. In the overall machine learning pipeline architecture, each stage of the process is represented by a separate module. Then, each component can be automated or optimized. The orchestration of these many components is a crucial factor to take into account while developing the machine learning pipeline.

Machine learning changed marketing strategies for good and all

Machine learning pipelines are iteratively developed and improved upon, making them cyclical in essence. The workflow is divided into multiple modular stages that can each be upgraded and optimized independently. The machine learning pipeline then unites these separate steps to create a polished, more effective procedure. It can be viewed as a development guide for machine learning models. The machine learning pipeline can be enhanced, scrutinized, and automated after it has been created and developed. An effective method for increasing process efficiency is an automated machine learning pipeline. It is end-to-end, starting with the model’s initial development and training and ending with its final deployment.

The automation of the dataflow into a model can also be thought of as a component of machine learning pipelines. This relates to how the term “data pipeline” is used more frequently in organizations. Instead, the preceding definition—one of the modular elements in the entire machine learning model lifecycle—is the subject of this guide. It includes all phases of model creation, deployment, and continuous optimization. A machine learning pipeline architecture will also consider static elements like data storage options and the environment surrounding the larger system. Machine learning pipelines are beneficial because they enable top-down understanding and organization of the machine learning process.

Data scientists train the model, and data engineers deploy it within the organization’s systems; these are only two of the numerous teams involved in the development and deployment of a machine learning model. Effective collaboration across the various parts of the process is ensured by a well-designed machine learning pipeline architecture. It is related to the idea of machine learning operations (MLOps), which manages the full machine learning lifecycle by incorporating best practices from the more mature field of DevOps.

The best practice approach to the various components of a machine learning pipeline is what is known as MLOps. The MLOps life cycle includes model deployment, model training, and ongoing model optimization. However, the machine learning pipeline is a standalone product that serves as a structured blueprint for creating machine learning models that can later be automated or repeated.

Why pipeline is used in ML?

A machine learning pipeline serves as a roadmap for the steps involved in developing a machine learning model, from conception to implementation and beyond. The machine learning process is a complicated one that involves numerous teams with a range of expertise. It takes a lot of time to move a machine learning model from development to deployment manually. By outlining the machine learning pipeline, the strategy can be improved and comprehended from the top down. Elements can be optimized and automated once they are laid out in a pipeline to increase overall process efficiency. This allows the entire machine learning pipeline to be automated, freeing up human resources to concentrate on other factors.

ML engineers build the bridge between data and AI

The machine learning pipeline serves as a common language of communication between each team because the machine learning lifecycle spans numerous teams and areas.

To enable expansion and reuse in new pipelines, each stage of a machine learning pipeline needs to be precisely specified. This characteristic of reusability allows for the efficient use of time and resources with new machine learning models by repurposing old machine learning processes. The machine learning pipeline architecture can be optimized to make each individual component as effective as feasible.

Active learning overcomes the ML training challenges

For instance, at the beginning of the machine learning lifecycle, a stage of the pipeline often involves the collection and cleaning of data. The movement, flow, and cleaning of the data are all taken into account throughout the stage. Once it has been precisely defined, a process that may have initially been manual can be improved upon and automated. For instance, a certain section of the machine learning pipeline could include triggers that would automatically recognize outliers in data.

It is possible to add, update, change, or improve specific steps.

How do you build a pipeline for machine learning?

There are several steps in a machine learning pipeline architecture. Each stage in a pipeline receives the processed data from the stage before it, or the output of a processing unit provided as an input to the following step. Pre-processing, Learning, Evaluation, and Prediction are its four primary phases.

Pre-processing

Data preprocessing is a data mining approach that entails converting unstructured data into something that can be interpreted. Real-world data typically lack specific behaviors or trends, is inconsistent, and is incomplete, making it more likely to be inaccurate. Following procedures like feature extraction and scaling, feature selection, dimensionality reduction, and sampling, acceptable data is obtained for a machine learning algorithm. The final dataset used to train the model and conduct tests is the byproduct of data pre-processing.

Learning

Data is processed using a learning algorithm to uncover patterns suitable for use in a new circumstance. Utilizing a system for a certain input-output transformation task is the main objective. Select the top-performing model for this from a group of models created using various hyperparameter settings, metrics, and cross-validation strategies.

Evaluation

Fit a model to the training data and forecast the labels of the test set to assess the effectiveness of the machine learning model. To determine the model’s prediction accuracy, also tally the number of incorrect predictions made using the test dataset.

When it comes to evaluating ML models, the machine learning pipeline architecture understanding of AWS is key:

“You should always evaluate a model to determine if it will do a good job of predicting the target on new and future data. Because future instances have unknown target values, you need to check the accuracy metric of the ML model on data for which you already know the target answer, and use this assessment as a proxy for predictive accuracy on future data.”

AWS

Prediction

No training or cross-validation exercises were performed using the model after it had successfully predicted the results of the test data set.

Understanding a machine learning pipeline architecture

Before beginning the build, it is helpful to comprehend the typical machine learning pipeline architecture design. Overall, the actions necessary to train, deploy, and constantly improve the model will make up the machine learning pipeline components. Every single section is a module that is outlined and thoroughly investigated. The architecture of the machine learning pipeline also comprises static components, such as data storage or version control archives.

Each machine learning pipeline will seem different depending on the kind of machine learning model that is applied or the various final purposes of the model. For instance, an unsupervised machine learning model used to cluster consumer data will have a different pipeline than a regression model used in finance or a predictive model, especially because system architectures and structures vary across various organizations.

However, a machine learning pipeline architecture will typically move between correspondingly distinct phases, as the machine learning process does. This includes the initial intake and cleaning of the data, the preprocessing and training of the model, and the final model tuning and deployment. As part of the post-deployment process, it will also incorporate a cyclical approach to machine learning optimization, closely observing the model for problems like machine learning drift before invoking retraining.

The following are typical components of the machine learning pipeline architecture:

- Data collection and cleaning

- Data validation

- Model training

- Model evaluation and validation

- Optimization and retraining

To understand, optimize, and if at all possible, automate each phase, it must be carefully defined. There should be tests and inspections at every stage of the machine learning process, and these are typically automated as well. In addition to these numerous stages, the machine learning pipeline architecture will also include static elements like the data and feature storage, as well as various model iterations.

The following are some instances of the machine learning pipeline architecture’s more static components:

- Feature storage

- Data and metadata storage and data pools

- Model version archives

Architecting a machine learning pipeline

Pipelines typically require overnight batch processing, which entails gathering data, transferring it across an enterprise message bus, and processing it to produce pre-calculated results and direction for the operations the following day. While this is effective in some fields, it falls short in others, particularly when it comes to machine learning (ML) applications.

ML skyrockets retailers to the top

Machine learning pipeline diagram

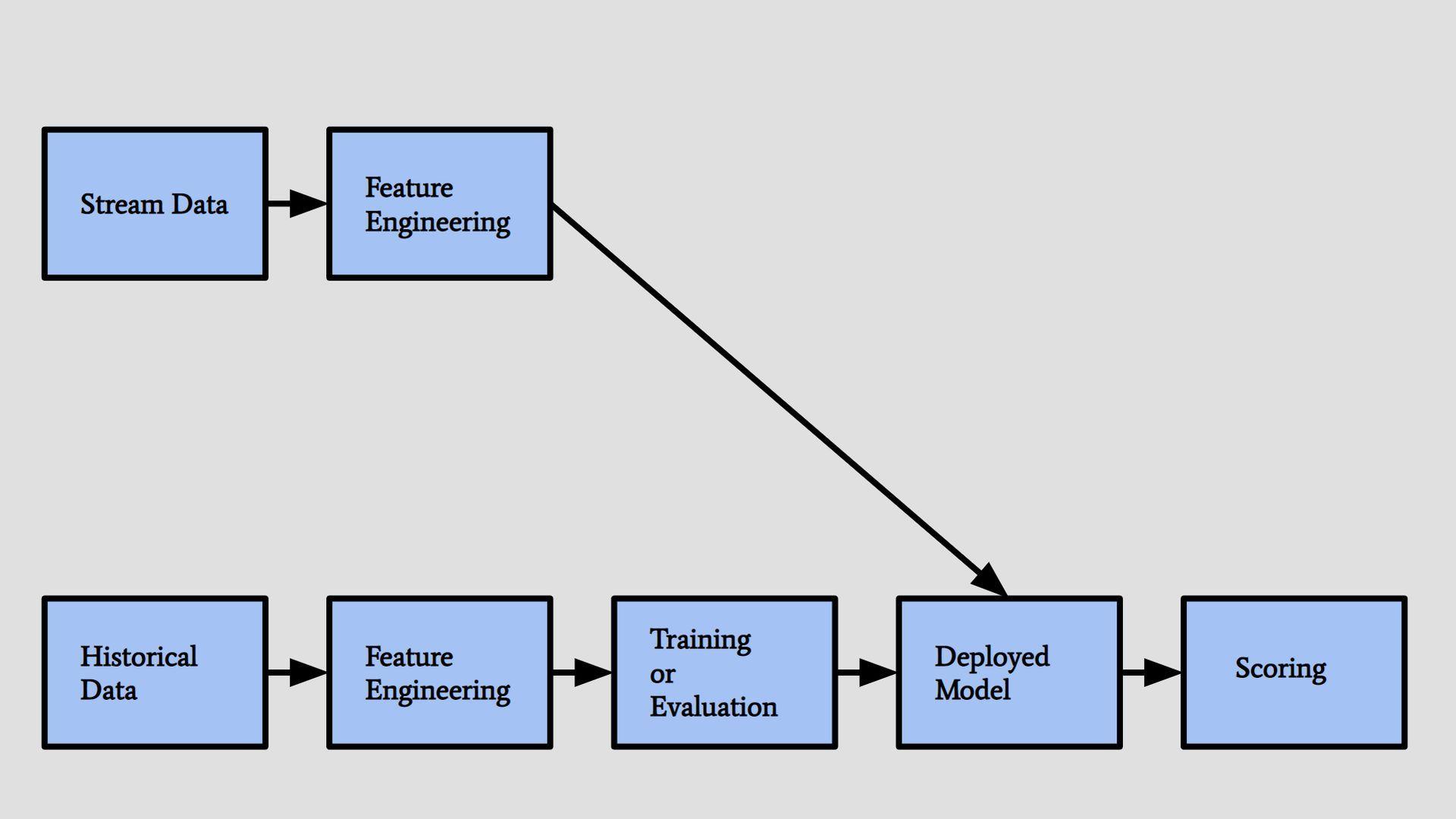

The diagram that follows illustrates a machine learning pipeline used to solve a real-time business problem with time-sensitive features and predictions (such as Spotify’s recommendation engines, Google Maps’ estimation of arrival time, Twitter’s follower suggestions, Airbnb’s search engines, etc.) is shown.

It comprises two clearly defined components:

- Online model analytics: The operational part of the application, or where the model is used for real-time decision-making, is represented by the top row.

- Offline data discovery: The learning component, which is the examination of historical data to build the ML model in batch processing, is represented by the bottom row.

How do you automate a machine learning pipeline architecture?

Every machine learning pipeline architecture will vary to some extent depending on the use case of the model and the organization itself. The same factors must be taken into account when creating any machine learning pipeline, though, as the pipeline often follows a typical machine learning lifecycle. The first step in the process is to think about the many phases of machine learning and separate each phase into distinct modules. A modular approach makes it simpler to focus on the individual components of the machine learning pipeline and enables the gradual improvement of each component.

The more static components, such as data and feature storage, should then be mapped onto the machine learning pipeline architecture. The machine learning pipeline’s flow, or how the process will be managed, must then be established. Setting the order of modules as well as the input and output flow is part of this. The machine learning pipeline’s components should all be carefully examined, optimized, and, whenever possible, automated.

The following four actions should be taken while creating machine learning pipeline architectures:

- Create distinct modules for each distinct stage of the machine learning lifecycle.

- Map the machine learning pipeline architecture’s more static components, such as the metadata store.

- Organize the machine learning pipeline’s orchestration.

- Each step of the machine learning pipeline should be optimized and automated. Integrate techniques for testing and evaluation to validate and keep an eye on each module.

Create distinct modules for each distinct stage

Working through each stage of the machine learning lifecycle and defining each stage as a separate module is the first step. Data collection and processing come first, followed by model training, deployment, and optimization. It is important to define each step precisely so that modules can be improved one at a time. The scope of each stage should be kept to a minimum so that changes can be made with clarity.

Map the machine learning pipeline architecture’s components

The more static components that each step interacts with should also be included in the overall machine learning pipeline architecture map. The data storage pools or a version control archive of a company could be examples of these static components. To better understand how the machine learning model will fit inside the larger system structure, the system architecture of the organization as a whole can also be taken into account.

Organize the machine learning pipeline’s orchestration

The machine learning pipeline architecture’s various processes should then be staged to see how they interact. This entails determining the order in which the modules should be used as well as the data flow and input and output orientation. The lifetime can be managed and automated with the aid of machine learning pipeline orchestration technologies and products.

Data science conquers the customer journey and sales funnel

Automate and optimize

The overall objective of this strategy is to automate and optimize the machine learning workflow. The process can be mechanized, and its components can be optimized by breaking it down into simple-to-understand modules. Using the machine learning architecture described above, the actual machine learning pipeline is typically automated and cycles through iterations. Models can be prompted to retrain automatically since testing and validation are automated as part of the process.

What are the benefits of a machine learning pipeline architecture?

The benefits of a machine learning pipeline architecture include:

- Creating a process map that gives a comprehensive view of the entire series of phases in a complex process that incorporates information from various specialties.

- Concentrating on a single stage at a time, allowing for the optimization or automation of that stage.

- The initial stage is turning a manual machine learning development process into an automated series.

- Providing a template for more machine learning models, with each step’s ability to be improved upon and altered in accordance with the use case.

- There are tools for orchestrating machine learning pipelines that increase effectiveness and automate processes.

What are the steps in a basic machine learning pipeline architecture?

As we explained above, a basic machine learning architecture looks like this:

- Data preprocessing: This step comprises gathering unprocessed, erratic data that has been chosen by an expert panel. The pipeline transforms the raw data into a format that can be understood. Techniques for processing data include feature selection, dimension reduction, sampling, and feature extraction. The result of data preprocessing is the final sample that is utilized for training and testing the model.

- Model training: In a machine learning pipeline architecture, it is essential to choose the right machine learning algorithm for model training. How a model will find patterns in data is described by a mathematical method.

- Model evaluation: To create predictions and determine which model will perform the best in the following stage, sample models are trained and evaluated using historical data.

- Model deployment: Deploying the machine learning model to the manufacturing line is the last stage. In the end, forecasts based on real-time data can be obtained by the end user.

Challenges: Updating a machine learning pipeline architecture

While retraining can be automated, coming up with new models and improving existing ones is more difficult. Version control systems handle updates in conventional software development. All the engineering and production branches are divided. It is beneficial to roll back software to an earlier, more reliable version in case something goes wrong.

Advanced CI/CD pipelines and careful, complete version control are also necessary for updating machine learning models. The complexity of upgrading machine learning systems, however, increases. If a data scientist creates a new version of a model, it almost always includes a variety of new features and additional parameters.

Changes must be made to the feature store, the operation of data preprocessing, and other aspects of the model for it to operate correctly. In essence, altering a relatively small portion of the code that controls the ML model causes noticeable changes in the other systems that underpin the machine learning process.

Additionally, a new model cannot be released right soon. If the model supports some customer-facing features, it may also need to go through some A/B testing. It must be compared to the baseline during these studies, and model measurements and KPIs might even be re-evaluated. Finally, the entire retraining pipeline needs to be set up if the model is successful in going into production.

Machine learning pipeline architecture creation tools

Typically, a machine learning pipeline is constructed to order. However, you can lay the groundwork for this using certain platforms and technologies. To get the idea, let’s take a brief look at a few of them.

- Google ML Kit: The ability to use the Firebase platform to exploit ML pipelines and close connection with the Google AI platform allows for the deployment of models in the mobile application through API.

- Amazon SageMaker: You may complete the entire cycle of model training on a managed MLaaS platform. ML model preparation, training, deployment, and monitoring are all supported by a range of tools in SageMaker. One of the important aspects is that you may use Amazon Augmented AI to automate the process of providing model prediction feedback.

- TensorFlow: Earlier, Google created it as a machine learning framework. You can use its core library to implement in your pipeline even though it has expanded to become the entire open-source ML platform. TensorFlow’s strong integration possibilities via Keras APIs are a clear benefit.

Key takeaways

- Data scientists can be relieved of the maintenance of current models thanks to automated machine learning pipelines.

- Automated pipelines can reduce bugs.

- A data science team’s experience can be improved by standardized machine learning workflows.

- Data scientists can readily join teams or switch teams because of the standardized configurations, which allow them to work in the same development environments.

Using an automated machine learning pipeline architecture is key for a data science team because:

- It provides more time for unique model development.

- It brings simpler techniques for updating current models.

- It will take less time to reproduce models.