The machine learning process flow determines which steps are included in a machine learning project. Data gathering, pre-processing, constructing datasets, model training and improvement, evaluation, and deployment to production are examples of typical steps. Some steps in the machine learning process flow, such as the model and feature selection phases, can be automated too.

Although these procedures are commonly recognized as the norm, they are not set in stone. You must first identify the project when establishing a machine learning process flow and then choose a successful strategy. Try not to force the model into a predetermined machine learning process flow. Instead, create a flexible process flow that enables you to start small and expand to a solution fit for production.

What is machine learning?

The process of creating systems that learn and develop on their own through carefully designed programming is known as machine learning.

Designing algorithms that automatically assist a system in gathering data and using that data to learn more is the ultimate goal of machine learning. Systems are anticipated to analyze the collected data for patterns and use those patterns to make important decisions autonomously.

Machine learning often involves giving systems a brain, a human-like intellect, and the ability to think and behave like humans. Existing machine learning models in the real world are capable of performing the following tasks:

- Separating legitimate emails from spam (for example, Gmail)

- Fixing spelling and grammar errors (autocorrect tools)

- Image and object recognition

- Finding false news

- Comprehension of spoken or written language

- Bots on websites that communicate with people, just like people

- Autonomous vehicles

How does machine learning process data?

Artificial intelligence (AI) machine learning teaches computers to learn from experience. Machine learning algorithms employ computer techniques to “learn” information directly from data without using a preexisting equation as a model. The algorithms adapt to their performance as more samples are available for learning. A particular type of machine learning is deep learning.

Supervised, unsupervised, and reinforcement learning are the three types of machine learning.

- Supervised learning: In this method, machine learning feeds historical input and output data into machine learning algorithms, with processing added in between each input/output pair to enable the system to change the model and provide outputs that are as feasible to the intended outcome.

- Unsupervised learning: Unsupervised learning doesn’t employ the same labeled training sets and data as supervised learning, which requires humans to assist the machine in learning. Instead, the machine scans the data for less evident patterns. This type of machine learning is particularly useful when you need to find patterns and use data to make judgments.

- Reinforcement learning: The machine learning method closely resembles human learning is reinforcement learning. The algorithm or agent being employed learns by interacting with its environment and receiving good or negative rewards. Deep adversarial networks, Q-learning, and temporal differences are common algorithms.

If you want further information about supervised learning, unsupervised learning, and reinforcement learning, we recommend you read our article, “Active learning overcomes the ML training challenges.”

What are the key tasks of machine learning?

Regression: The majority of regression tasks involve estimating numerical values (continuous variables). Examples include estimating the cost of homes, the cost of goods, the value of stocks, etc.

Multivariate querying: Finding related objects is what multivariate querying is all about.

Clustering: Finding natural groups of data and a name for each of these groups is the main goal of clustering activities (clusters). Customer segmentation and identifying product features for the product roadmap are a couple of typical examples.

Classification: Simple prediction tasks are involved in classification problems (discrete variables). Predicting whether an email is spam or not is one of the most popular instances. Some typical use cases can be found in the healthcare industry, such as determining whether or not a person has a specific condition.

Probability density and mass function estimation: Finding the likelihood or frequency of items is related to problems with the estimation of probability density functions. Density estimation in probability and statistics is the creation of an estimate of an unobservable underlying probability density function based on seen data.

Machine learning makes life easier for data scientists

Synthesis & sampling: Synthesis and sampling are crucial in deep learning and machine learning. They are utilized to create fresh data from old data or to choose a representative subset of data for additional research. In order to produce a more diverse and representative dataset, synthesis and sampling are frequently employed in tandem.

Anomaly detection: Finding odd patterns in data that don’t match expected behavior is known as anomaly detection. It is frequently utilized in a variety of applications, including diagnosing equipment faults in sensor data and identifying fraudulent conduct in financial data as well as hostile activities in network traffic data.

Transcription: The process of turning text from audio, video, or image recordings into written text is known as transcription. They are frequently employed in professions like media, education, and medicine.

Machine translation: The process of translating text from one language to another using machine learning algorithms is known as machine translation. The jobs that can be translated using machine translation are numerous, including document translation, speech translation, and web page translation.

What is a machine learning process flow?

Machine learning process flows define the steps started during a certain machine learning implementation.

The touchstone of machine learning: Epoch

Understanding a machine learning process flow

This section gives a high-level overview of a typical machine learning process flow. A machine learning project’s general objective is to create a statistical model utilizing gathered data and machine learning techniques. As a result, the three fundamental artifacts of every ML-based product are data, ML models, and code. According to these artifacts, there are three primary stages in the standard machine learning process flow:

- Data Engineering: Data acquisition & data preparation.

- ML Model Engineering: ML model training & serving.

- Code Engineering: Integrating ML model into the final product.

Data engineering

Acquiring and getting ready the data for analysis is the first stage in any machine learning process flow. Data is frequently combined from many sources and comes in a variety of formats. Data capture is followed by data preparation.

“Data acquisition is an iterative and agile process for exploring, combining, cleaning and transforming raw data into curated datasets for data integration, data science, data discovery and analytics/business intelligence (BI) use cases.”

Notably, while being an intermediate phase to prepare data for analysis, the preparation phase is said to be the most time- and resource-intensive. In the data science pipeline, data preparation is crucial because it prevents errors from spreading to the data analysis phase, which could lead to incorrect conclusions being drawn from the data.

A series of operations on the provided data is part of the Data Engineering pipeline and provide training and testing datasets for the machine learning algorithms:

- Data gathering: Employing different frameworks and data formats, such as Spark, HDFS, CSV, etc., to collect data. This stage could also involve creating artificial data or enriching data.

- Validation: Incorporates data profiling to learn more about the structure and substance of the data. This stage produces a set of metadata, including the maximum, minimum, and average values. User-defined error detection functions called data validation operations to check the dataset for errors.

- Data cleaning: The method of reformatting certain attributes and fixing data flaws like missing values imputation.

- Data labeling: The process through which each data piece is given a particular category as part of the data engineering pipeline.

- Data splitting: Dividing the data into training, validation, and test datasets for usage in the main machine learning stages of developing the ML model.

Model engineering

The step of writing and running machine learning algorithms to produce an ML model is the central component of a machine learning process flow. Several processes are part of the Model Engineering pipeline that results in the final model:

- Model training: The training data runs a machine learning algorithm. The feature engineering and hyperparameter tuning for the model training activity are also included.

- Model evaluation: Before delivering the ML model in production to the end-user, the trained model must be validated to ensure it satisfies the originally stated objectives.

- Model testing: The final “Model Acceptance Test” is carried out by utilizing the hold-backtest dataset.

- Model packaging: The method of converting the finished machine learning model into a specific format (such as PMML, PFA, or ONNX) so the business application can use it.

Model deployment

Once a machine learning model has been trained, it must be used as a component of a business application, such as a desktop or mobile application. ML models need various data points (feature vectors) to generate predictions. Integrating the previously constructed ML model into the current program is the last step in the ML workflow. The following actions are performed during this stage

- Model serving: The method for dealing with the ML model artifact in a production setting.

- Model performance monitoring: The practice of monitoring an ML model’s performance using real-time and untainted data to make predictions or provide recommendations. We are especially interested in signals distinctive to machine learning, including predicted divergence from historical model performance. These signals might serve as model retraining triggers.

- Model performance logging: The log records the results from each inference request.

How many steps are there in a machine learning process flow?

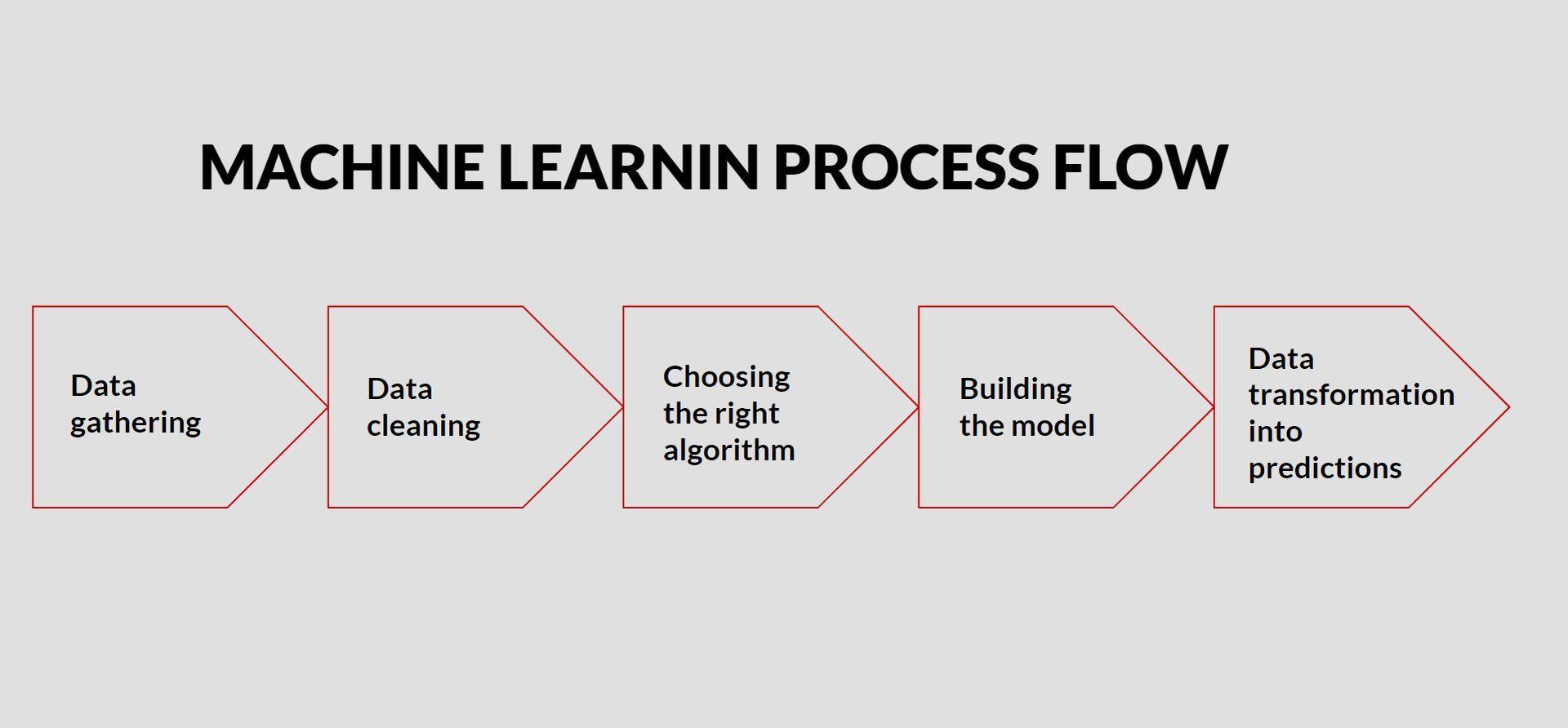

The machine learning process flow varies depending on the project. However, there are usually 5 fundamental steps.

Gathering machine learning data

You know that machines initially learn from the information you provide them. It is crucial to gather trustworthy data so your machine learning model can identify the proper patterns. How accurate your model is will depend on the quality of the data you provide the computer. Inaccurate or out-of-date data will result in irrelevant results or predictions.

Data collection is one of the most crucial phases of the machine learning process. The quality of the data you get during data collection determines your project’s potential utility and accuracy.

Data sourcing is still a major stumbling block for AI

To gather data, you must choose your sources and combine the information from each source into a single dataset. This could entail obtaining open source data sets, streaming data from the Internet of Things sensors, or building a data lake out of various files, logs, and media.

As it will directly impact the result of your model, be sure to obtain data from a reputable source. Good data is pertinent, has few duplicated and missing information, and accurately represents all classifications and subcategories.

Pre-processing data

After gathering your data, you must pre-process it. This is one of the crucial parts of a machine learning process flow. Cleaning, confirming, and converting data into a useful dataset are all parts of pre-processing. This may be a rather simple operation if you gather data from a single source.

However, if you combine data from many sources, you must ensure that data formats are compatible, that the data is equally trustworthy, and that any potential duplicates are eliminated. You can accomplish this by:

- Combining and randomizing all of the data you have. This ensures that the distribution of the data is balanced and that the learning process is unaffected by the ordering.

- Cleaning the data removes unnecessary information, missing values, duplicate values, rows, and columns, data type conversion, etc. The dataset’s rows, columns, and index of rows and columns may need to be changed.

- Visualize the data to comprehend its organization and the connections between the many variables and classes it contains.

- Dividing the clean data into two sets, one for training and the other for testing. The set that your model learns from is called the training set. After training, your model’s accuracy is tested using a testing set.

Dataset building

In this stage, processed data is divided into three datasets for training, validating, and testing:

- Training set: Used to initially instruct and train the algorithm on how to process data. Through parameters, this collection establishes model classifications.

- Validation set: Utilized to calculate the model’s accuracy estimate. The model’s parameters are adjusted using this dataset.

- Test set: Used to evaluate the models’ performance and accuracy. This collection aims to highlight any flaws or improper training in the model.

Training

You are prepared to train your model once you receive the datasets. For your algorithm to learn the appropriate parameters and characteristics required in classification, you must feed it your training set.

Following the conclusion of training, the model can be improved using the validation dataset. This comprises adjusting model-specific settings (hyperparameters) until an acceptable accuracy level is attained, which may entail changing or eliminating variables.

Evaluation

You can test your model once an acceptable collection of hyperparameters has been identified and model accuracy has been optimized. Testing uses your test dataset to confirm that your models employ accurate features. You can go back and retrain the model to increase accuracy, modify output settings, or deploy the model as necessary based on the input you get.

Best practices for creating an efficient machine learning process flow

When defining the process flow for your machine learning project, you can apply several best practices. Below are a few to start with.

Clarify the project

You may use many best practices when creating the process flow for your machine learning project. Here are a few to get you started.

Models are typically created to replace an existing procedure. It’s crucial to comprehend the current process’ operation, objectives, participants, and success criteria. Knowing these elements helps you determine your model’s responsibilities, any implementation constraints, and the standards it must meet or surpass.

Understanding what information you need to gather and how models should be trained requires defining what you want to predict with care. This process should be as thorough as possible, and results should be quantified. If your goals aren’t measurable, it will be difficult to ensure they are all achieved.

IT leaders will boost their cloud adoption strategies over the next 2 years

Analyze the data your present process uses, how it is collected, and how much there is. You should identify the precise data types and points required to make predictions based on those sources.

Find a strategy that works

Machine learning process flows will help your present process be more accurate and/or efficient. To find a strategy that accomplishes this objective, you must:

You should take the time to examine how other teams have carried out similar tasks before implementing a strategy. To save time and money, you might be able to adopt their techniques or learn from their errors.

You need to test whether you have found an existing strategy to build on or developed your own. This is essentially the model training’s training and testing phases.

Develop a full-scale solution

Usually, when designing a strategy, the final product is a proof of concept. To achieve your objective, you must be able to transform this proof into a useful product. You require the following to get from proof to a deployable solution:

- A/B testing, for example, permits you to contrast your current model with the current procedure. This can either support or refute your model’s efficacy and ability to benefit your teams and users.

- Your model implementation’s ability to interact with data sources and services depends on creating an API. If you want to sell your model as a machine learning service, accessibility is crucial.

- Key components include user-friendly documentation that covers code, procedures, and model usage. Users must understand how to use the model, get its results, and what kind of results they may anticipate if they want to develop a marketable product.

What is automated machine learning?

In essence, AutoML uses currently available machine learning methods to create new models. Its goal is not to fully automate the model development process. Instead, it aims to minimize the number of interventions needed for successful development on the part of humans.

Developers can start and finish projects with AutoML much more quickly. Additionally, it may facilitate self-correction in generated models, enhancing deep learning and unsupervised machine learning training processes.

How to automate a machine learning process flow?

By automating a machine learning process flow, teams can work more effectively on some of the repetitive processes involved in model creation. This is sometimes known as autoML, and there are numerous modules and an expanding number of platforms for it.

Although it would be wonderful to be able to automate machine learning activities fully, this is not yet feasible. The following can be effectively automated:

- Feature selection: The most pertinent features are chosen by tools from predetermined features.

- Model selection: To choose which model is most suited to learn from your data, the same dataset is run through several times using default hyperparameters.

- Hyperparameter optimization: Tests combinations of pre-defined parameters and identifies the best combination using techniques including grid search, random search, and Bayesian methods.

Best frameworks to automate a machine learning process flow

Three frameworks in total are listed below and they might help you start automating your machine learning process flow:

Tsfresh

Using the open-source Python package tsfresh, you may determine and extract properties from time series data. You can use it to extract features that you can then apply to train using scikit-learn or pandas.

DataRobot

For automated data preparation, feature engineering, model selection, training, testing, and deployment, you can use the DataRobot proprietary platform. It can be employed to locate fresh data sources, implement business rules, or combine and reorganize data.

You can utilize the library of open source and paid models on the DataRobot platform as a foundation for your model implementation. A dashboard with visualizations is also included, which you can use to assess your model and comprehend forecasts.

Featuretools

An open-source framework called Featuretools can be used to automate feature engineering. Using a Deep Feature Synthesis technique, you may utilize it to alter structured temporal and relational datasets. This algorithm aggregates or transforms data into useful properties by using primitives (operations like sum, mean, or average). This framework is based on a project called Data Science Machine that Max Kanter and Kalyan Verramachaneni at MIT developed.

Which stage of the machine learning workflow includes feature engineering?

The answer is model engineering. The procedure of using training data to run a machine learning algorithm. The feature engineering and hyperparameter tuning for the model training activity are also included.

Conclusion

In order to better comprehend a machine learning process flow, we covered numerous phases and learned about the data workflows for a machine learning model. It’s crucial to keep in mind that the quality of a machine learning model depends on the data it receives and the algorithms’ capacity to process it.

There is no lack of methods to carry out a variety of complex tasks in the field of data science. What it likely lacks, though, is the ability to deal with uncommon business situations; here is where machine learning techniques shine.