- The camera system in autonomous vehicles depends on image processing speed.

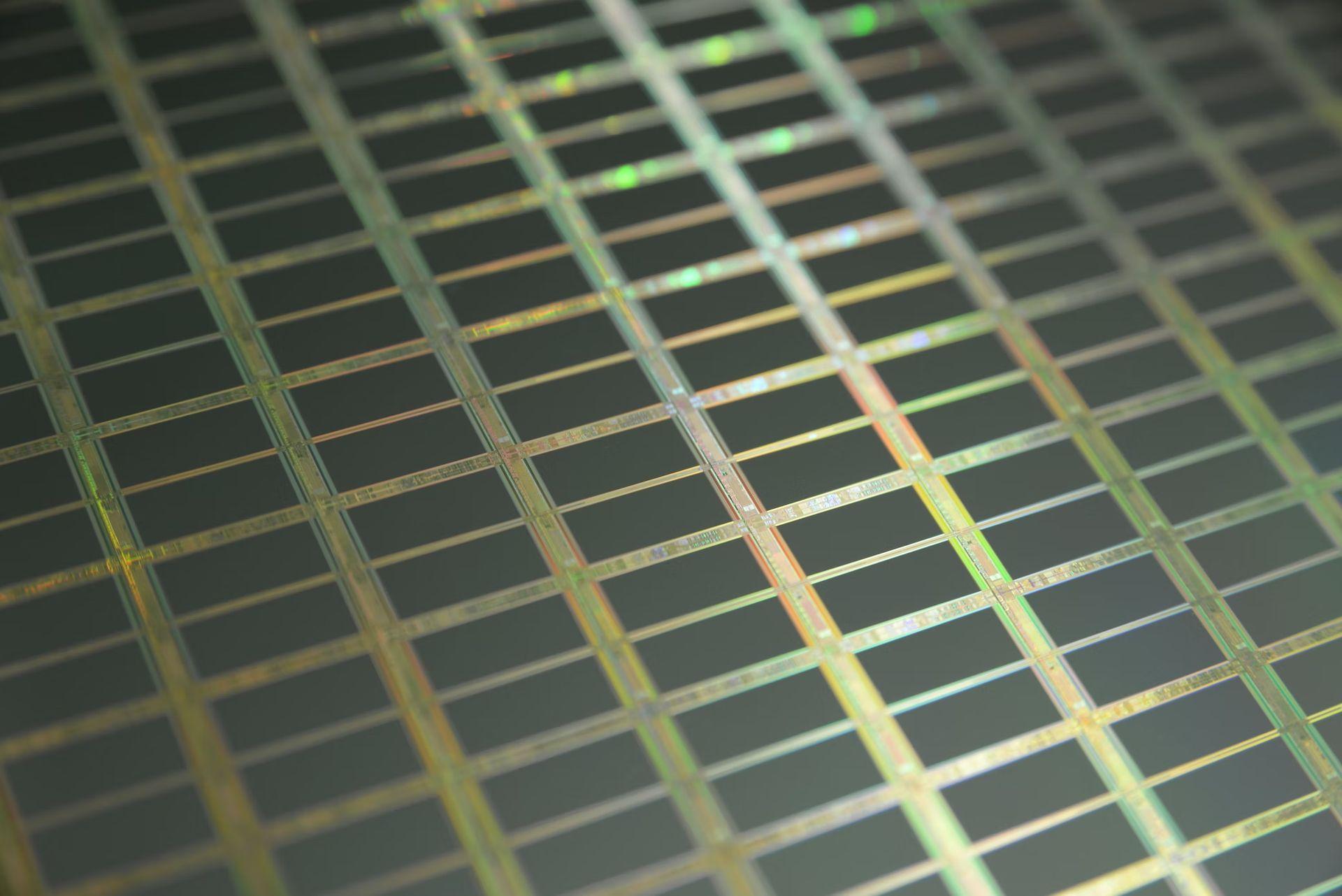

- Complementary metal-oxide-semiconductor (CMOS) image sensors, also known as silicon imaging sensor chips, have recently benefited from researchers’ development of the first in-sensor processor.

- Although commercial image sensor chips also use silicon photodiode arrays, the team’s photodiodes are electrostatically doped, allowing voltages to regulate the sensitivity of individual photodiodes, or pixels, to incoming light.

- The silicon photodiode array can be constructed with different image filters to reduce unnecessary information or noise for various purposes.

- The team’s next objective is to make photodiodes denser and incorporate them into silicon-integrated circuits.

As every driver is aware, accidents may occur instantaneously. Therefore, image processing speed is essential for the camera system in autonomous vehicles. The time it takes for the system to capture a picture and send the data to the CPU for image processing may determine whether a person avoids a barrier or has a serious accident.

Faster image processing is made possible thanks to silicon photodiodes

The visual processing can be sped up via in-sensor image processing, in which the image sensor itself retrieves significant characteristics from raw data rather than a separate computer. Until recently, in-sensor processing demonstrations have only been possible using newly developed research materials that are currently challenging to integrate into commercial systems.

MIT researchers have built a new LEGO-like AI chip

Recently, researchers at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) have created the first in-sensor processor that could be incorporated into commercial silicon imaging sensor chips, also referred to as complementary metal-oxide-semiconductor (CMOS) image sensors, which are used in nearly all commercial devices that need to capture visual data, including smartphones.

Donhee Ham, the Gordon McKay Professor of Electrical Engineering and Applied Physics at SEAS and the paper’s senior author, stated, “Our work can harness the mainstream semiconductor electronics industry to rapidly bring in-sensor computing to a wide variety of real-world applications.”

Ham and his team developed an array of silicon photodiodes. A silicon photodiode array is also used in commercially available image sensor chips. Still, the team’s photodiodes are electrostatically doped, allowing individual photodiodes’ sensitivity, or pixels, to incoming light to be controlled by voltages.

Analog versions of the multiplication and addition processes essential to many image processing pipelines can be carried out by an array of voltage-tunable photodiodes, collecting the necessary visual information as soon as the image is collected.

According to Houk Jang, a postdoctoral scholar at SEAS and the paper’s first author, these dynamic photodiodes “can concurrently filter images as they are captured, allowing for the first stage of vision processing to be moved from the microprocessor to the sensor itself.”

The silicon photodiode array can be designed with various image filters to sift out extraneous information or noise for various purposes. For example, an autonomous car’s imaging system may require a high-pass filter to monitor lane markers. At the same time, other applications may necessitate a filter that blurs to reduce noise.

Ultralow power AI chips are possible thanks to SNNs

According to Henry Hinton, a graduate student at SEAS and the paper’s co-first author, “the use of this silicon-based in-sensor processor not only in machine vision applications but also in bio-inspired applications, wherein early information processing allows for the co-location of sensor and compute units, like in the brain,” said Henry Hinton, a graduate student at SEAS and co-first author of the paper.

The team’s next goal is to make photodiodes denser and incorporate them into silicon-integrated circuits.

“By replacing the standard non-programmable pixels in commercial silicon image sensors with the programmable ones developed here, imaging devices can intelligently trim out unneeded data, thus making energy and bandwidth more efficient to address the demands of the next generation of sensory applications,” explained Jang.

For those who want to read further, the research is published in Nature Electronics.