LaMDA, GPT, and more… Nowadays, everyone talking about AI models and what they are capable of. So what’s the reason for this hype? The use of AI models is expanding rapidly across all industries. AI’s capacity to find solutions to difficult issues with minimal human input is a major selling point for the technology. Several businesses have found success by adopting AI to boost productivity, streamline operations, and improve the customer service they provide. But do you know what AI models mean exactly?

Artificial intelligence (AI) is a broad term that encompasses the ability of computers and machines to perform tasks that normally require human intelligence, such as reasoning, learning, decision-making, and problem-solving. AI can be applied to various domains and industries, such as healthcare, education, finance, entertainment, and more, depending on its AI model.

In this article, we will explain everything you need to know about AI models, such as the best ones, their types, and how to choose them. But first, let’s start from the bottom and better understand where we are now in the age of AI.

What is an AI model?

Artificial Intelligence (AI) models are the building blocks of modern machine learning algorithms that enable machines to learn and perform complex tasks. These models are designed to replicate the human brain’s cognitive functions, enabling them to perceive, reason, learn, and make decisions based on data. When given enough data, the model may draw certain inferences and make predictions. To put it simply, if there are superheroes in the data in which the AI model is trained, you can find out who will win if Thor and Superman fight.

An AI model is a crucial part of artificial intelligence. To learn how to perform a given activity, such as facial recognition, email spam detection, or product recommendation, an artificial intelligence model requires a dataset. Images, text, music, and numbers are just some of the many forms of data that may be used to train an AI model.

Reminder: Training data refers to the data used to train an AI model, and commonly there are three techniques for it:

- Supervised learning: The AI model learns from labeled data, which means that each data point has a known output or target value.

- Unsupervised learning: The AI model learns from unlabeled data, which means that there is no known output or target value for each data point. The AI model tries to find patterns or structures in the data without any guidance.

- Reinforcement learning: The AI model learns from its own actions and feedback from the environment. The AI model tries to maximize a reward or minimize a penalty by exploring different actions and outcomes.

An AI model learns from the training data by finding patterns and relationships among the data points. For example, an AI model that recognizes faces might learn to identify features such as eyes, nose, mouth, and ears from thousands of images of human faces. The process of finding patterns and relationships from the data is called learning or training.

An AI model can be evaluated on how well it performs the task it was trained for by using another set of data that is different from the training data. This set of data is called test data or validation data.

The test data is used to measure the accuracy, precision, recall, or other metrics of the AI model. For example, an AI model that recognizes faces might be tested on how many faces it can correctly identify from a new set of images.

An AI model can be deployed or used to perform the task it was trained for on new data that it has not seen before. This set of data is called inference data or production data. The inference data is used to generate outputs or predictions from the AI model. For instance, an AI model that recognizes faces might be used to unlock a smartphone by verifying the face of the user.

Importance of AI models

The importance of data and AI in business is rising rapidly. In order to make sense of the massive amounts of data being produced, businesses increasingly rely on AI models. AI models, when applied to real-world situations, can do what would take too long or be too complex for people, such as:

- Collecting data: When rivals have no or limited access to data or when data is difficult to get, the capacity to collect data for training is of the highest significance. Businesses may use data to train AI models and to re-train (better) such models on an ongoing basis.

- Generating outputs: With a Generative Adversarial Network, for instance, a model can produce new data that is analogous to the training data (GAN). Generative AI models currently under development allow for the creation of both artistic and photorealistic images (such as DALL-E 2).

- Understand massive data sets: In model inference, the input data is used to predict the output. To do this, the model method is applied to data that the model has never “seen” before, whether it is new data or real-time sensory data.

- Automate tasks: AI models are incorporated into pipelines for use in the commercial world. Data input, processing, and final presentation are all components of a pipeline and can be automated, thank its AI model.

Are you confused? Don’t worry, it’s quite natural! AI is a huge field of research. That’s why the researchers sorted the AI models into some types to make sense of them better.

AI model types

AI models can be classified into different types based on the complexity or architecture they use. Some of the common types are:

- Linear Regression

- Deep Neural Networks

- Logistic Regression

- Decision Trees AI

- Linear Discriminant Analysis

- Naive Bayes

- Support Vector Machines

- Learning Vector Quantization

- K-nearest Neighbors

- Random Forest

What do they mean? Let’s dig deeper and learn more about them!

Linear Regression

The AI model uses a simple mathematical function that maps the input data to the output data using a linear relationship. For example, an AI model that predicts house prices might use a linear function that takes into account factors such as size, location, and more.

Deep Neural Networks

Deep Neural Networks (DNNs) are a common AI/ML model that is essentially an ANN with numerous (hidden) layers between the input and output layers.

They are also built on interconnected components called artificial neurons, and the human brain’s neural network inspires them. Check out our detailed explanation of how Deep Neural Network models function to gain a deeper understanding of this AI tool.

Many fields make use of DNN models, such as those dealing with speech recognition, picture recognition, and NLP (NLP).

Logistic Regression

In logistic regression, the X variable is a binary one (true/false, present/absent), and the Y variable is the result of the mapping process.

Decision Trees AI

This AI methodology is not only easy to understand but also quite effective. The information from previous decisions is analyzed via the decision tree. Often, these trees adhere to an elementary if/then structure. If you pack a sandwich for lunch instead of buying lunch, for instance, you can save money.

Both regression and classification issues are amenable to decision trees. Moreover, early kinds of predictive analytics were powered by basic decision trees.

Linear Discriminant Analysis (LDA)

A single decision tree may be an effective AI model, but what can a forest of them accomplish? Several decision trees are combined into one random forest. All of the trees in a decision forest each return their own result or decision, which is subsequently averaged. The final forecast or conclusion is more reliable because of the combined results.

When working with a huge dataset, the random forest AI model really shines. Both regression and classification issues may be tackled using this approach. The use of random forest models has been crucial in the development of modern predictive analytics.

Naive Bayes

Naive Bayes is a method of classification that makes no assumptions about the connections between the inputs.

Support Vector Machines

In order to classify data more precisely, support vector machine methods create a partition (a hyperplane).

Learning Vector Quantization (LVQ)

By using learning vector quantization, the model will converge as data points into prototypes, much like k-nearest neighbor does when evaluating the distance between individual data points.

K-nearest Neighbors

For both regression and classification tasks, the K-nearest Neighbors (kNN) model provides a straightforward supervised ML solution. This technique is based on the concept that related information tends to cluster together.

A key drawback of this robust approach is that it becomes increasingly sluggish as more data is added.

Random Forest

An ensemble learning model, Random Forest, may be applied to both regression and classification tasks. It uses a bagging technique to combine the results of numerous decision trees before making a final prediction.

To reduce complexity, it constructs a ‘forest’ of decision trees that were individually trained on separate subsets of data before combining the findings to make more accurate predictions.

AI models are powerful tools that can enhance human capabilities and enable new possibilities in various domains and industries. However, they also pose challenges and risks, such as ethical issues, bias, privacy, security, explainability, and accountability. Therefore, it is important to understand how they work, how they can be used responsibly, and which ones to use.

Best AI models

It’s important to note that several AI models are employed for various tasks, such as text generating, image generating, and more, and these are some of the best ones:

- GPT4

- MT-NLG

- LaMDA

- Chinchilla AI

- LLaMA

- DALL-E 2

- Stable Diffusion

- Midjourney v5

The best AI models are able to process large amounts of data quickly and accurately.

Let’s take a closer look at their purposes briefly.

GPT4

GPT-4 is the latest and most advanced artificial intelligence system for natural language processing from OpenAI. It is a deep neural network that can generate coherent and diverse texts on any topic, given some keywords or a prompt. It is one of the best AI models.

Announcing GPT-4, a large multimodal model, with our best-ever results on capabilities and alignment: https://t.co/TwLFssyALF pic.twitter.com/lYWwPjZbSg

— OpenAI (@OpenAI) March 14, 2023

GPT-4 is based on the transformer architecture and uses a large-scale self-attention mechanism to learn from billions of words of text data. GPT-4 can perform various tasks such as text summarization, question answering, text generation, translation, and more. The best AI models are able to learn from experience and adapt to changing conditions.

We have previously explained how to use GPT-4.

Everything you need to know about GPT5

MT-NLG

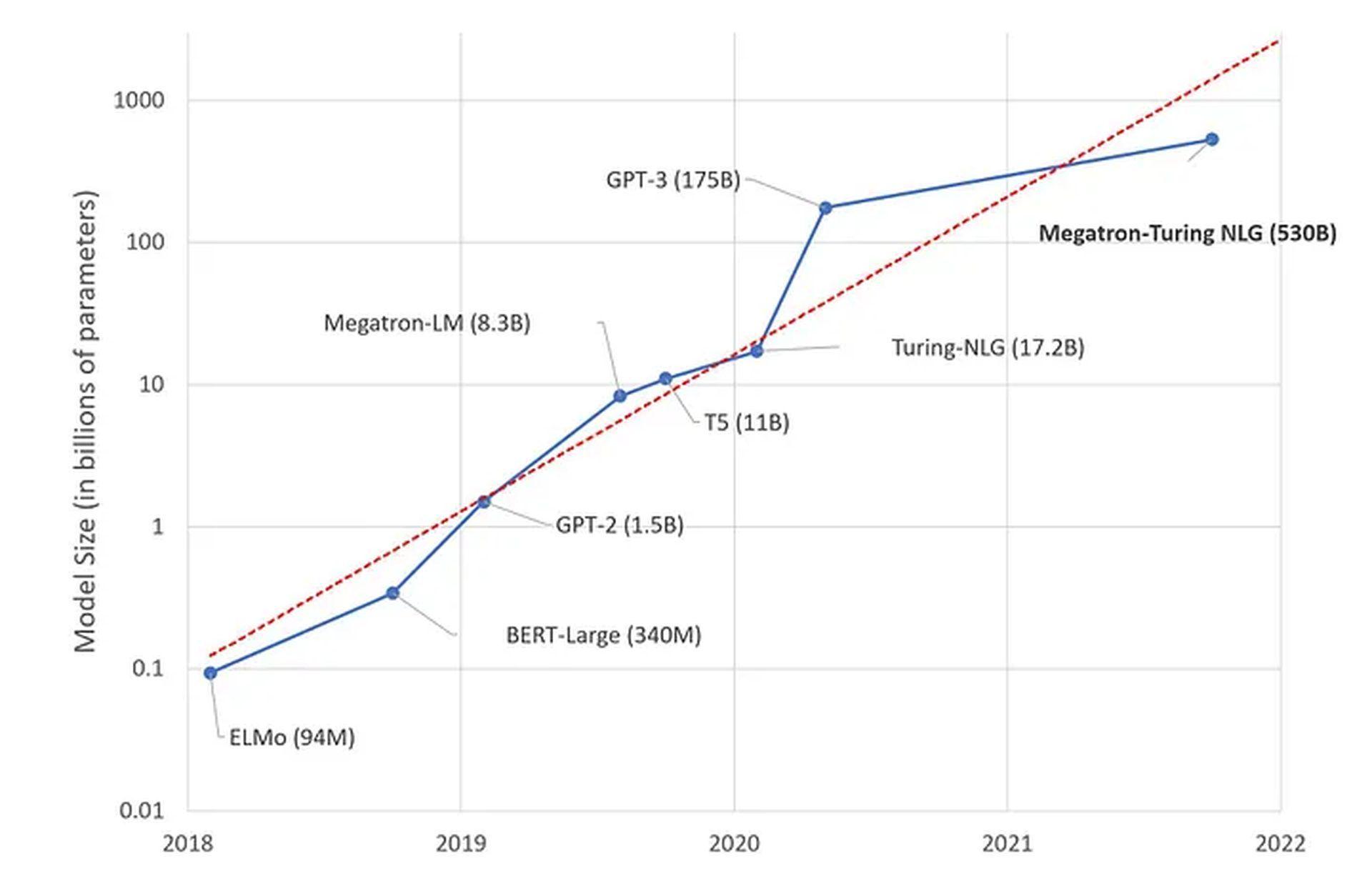

The 530 billion parameters that make up the Megatron-Turing Natural Language Generation model (MT-NLG) make it one of the most advanced monolithic transformer English language models yet built.

As compared to previous state-of-the-art models, this 105-layer, transformer-based MT-NLG performs better in zero-, one-, and few-shot scenarios. It outperforms other systems in a variety of natural language tasks, including completion prediction, reading comprehension, commonsense reasoning, natural language inferences, word meaning disambiguation, etc. The best AI models are able to make predictions with a high degree of accuracy.

For more information, click here.

LaMDA

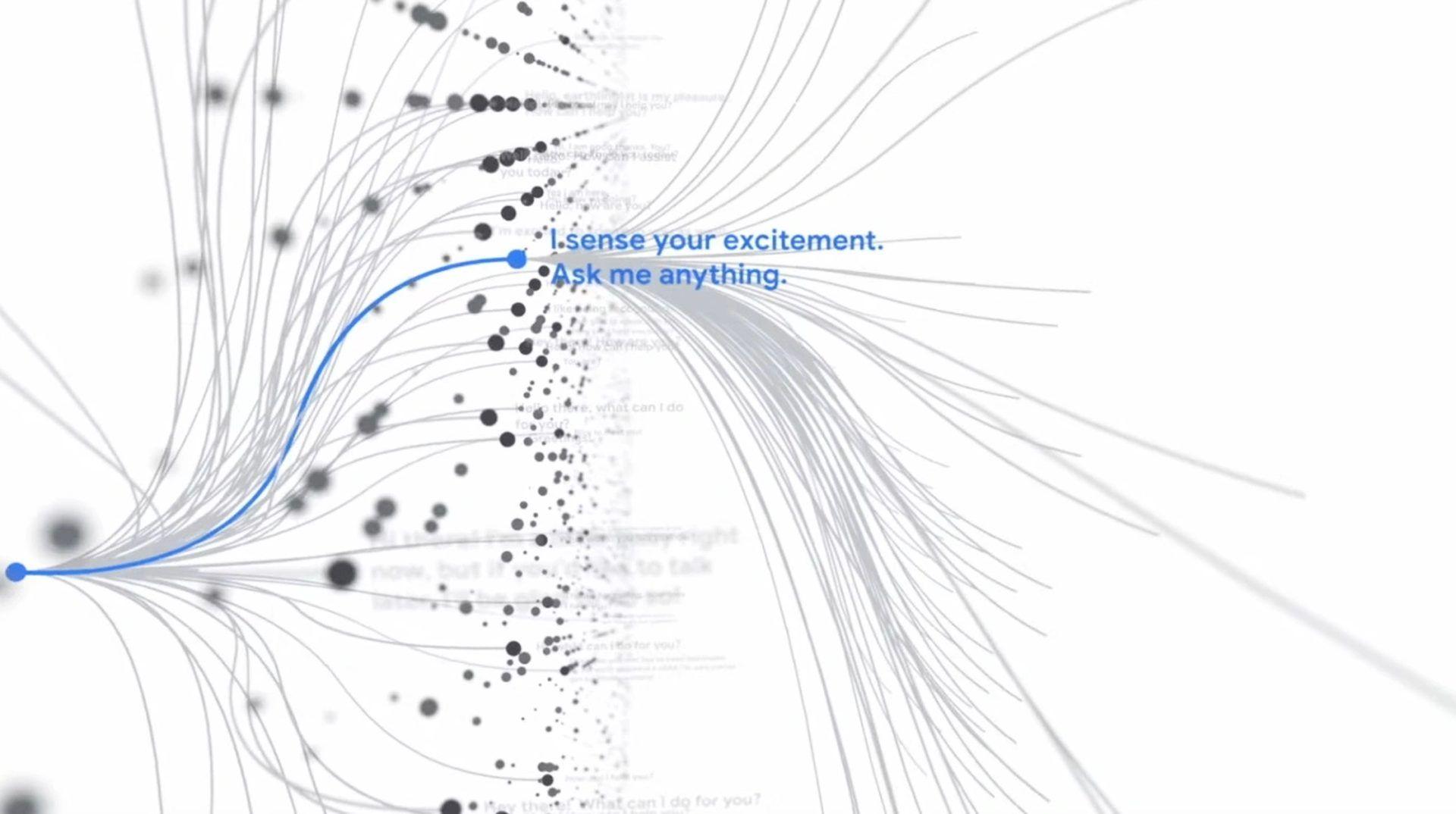

LaMDA is “the language model” that people are afraid of. After a Google employee believed LaMDA was conscious, the AI became a topic of discussion due to the impression it gave off in its answers. In addition, the engineer hypothesized that LaMDA, like humans, expresses its anxieties through communication. It powered Google’s ChatGPT killer, Bard AI.

First and foremost, it is a statistical method for predicting the following words in a series based on the previous ones. LaMDA’s innovativeness lies in the fact that it may stimulate dialogue in a looser fashion than is allowed by task-based responses. So that the conversation can flow freely from one topic to another, a conversational language model needs to be familiar with concepts such as Multimodal user intent, reinforcement learning, and suggestions.

Chinchilla AI

DeepMind by Chinchilla AI is a popular choice for a large language model, and it has proven itself to be superior to its competitors. In March of 2022, DeepMind released Chinchilla AI. It functions in a manner analogous to that of other large language models such as GPT-3, Jurassic-1, Gopher, and Megatron-Turing NLG. Nonetheless, Chinchilla AI’s main selling point is that it can be created for the same anticipated cost as Gopher, and yet it employs fewer parameters with more data to provide, on average, 7% more accurate results than Gopher.

For a FLOP budget, previous work over-allocated parameters at the expense of training tokens. Chinchilla and Gopher use the same training compute; yet Chinchilla is trained on 4x more tokens and is 4x smaller making it cheaper to use downstream. https://t.co/RepU03NJ91 2/3 pic.twitter.com/kBAavQ3rTC

— Google DeepMind (@GoogleDeepMind) April 12, 2022

It is one of the best AI models. For detailed information, we previously explained Chinchilla AI.

LLaMA

Meet the latest large language model! The LLaMA model was developed to aid researchers and developers in examining the potential of artificial intelligence in areas such as question answering and document summarization.

A new model developed by Meta’s Fundamental AI Research (FAIR) team has been released when both established tech firms and well-funded startups compete to boast about their artificial intelligence (AI) advancements and incorporate the technology into their commercial offerings.

“LLMs have shown a lot of promise in generating text, having conversations, summarizing written material, and more complicated tasks like solving math theorems or predicting protein structures.”

-Mark Zuckerberg

Companies are investing heavily in developing the best AI models to gain a competitive edge.

DALL-E 2

To generate digital images from written descriptions, OpenAI developed the DALL-E 2 machine learning models. The new company specializing in AI Open AI designed it to be a generative tool, one that can generate original pieces of art rather than only modifying existing ones. It is one of the best AI models for image generation.

The best AI models are often the result of collaboration between teams of experts from different fields.

For detailed information, we previously explained DALL-E 2.

Stable Diffusion

Stable Diffusion is an open-source AI art generator released on August 22 by Stability AI. Stable Diffusion is written in Python, and its type is the transformer language model. It can work on any operating system that supports Cuda kernels.

Eyes Wide Shut

ai art, stable diffusion, digital art pic.twitter.com/7QA8JpDkFB— Cyborg Werewolf🕯🌹 (@MirrorinSpace) December 15, 2022

It is one of the best AI models for image generation. Check out our Stable Diffusion AI guide for detailed information.

Midjourney v5

Midjourney v5 is the latest model of the popular AI art program. Midjourney’s high-quality images and unique artistic flair have made it a popular AI text-to-image generator. The program takes user input via Discord bot commands and then makes an image based on the words entered.

Pixar-Ryan 😂

Thanks @midjourney pic.twitter.com/FihoaEMxGZ

— Ryan Carson (@ryancarson) December 11, 2022

It is one of the best AI models for image generation. Check out our Midjourney guide for detailed information.

How to choose the right AI Model for your business?

Artificial intelligence (AI) is transforming every industry and sector, from healthcare to finance, from education to entertainment. But how do you know which AI model is best suited for your specific business problem? In this blog post, we will provide some guidelines and tips on how to choose the right AI model for your use case.

To choose the right AI model for your business problem, you need to consider the following factors:

- The type and amount of data you have: Different AI models require different types and amounts of data to train and perform well. For example, supervised learning models need a lot of labeled data, which can be expensive and time-consuming to obtain. Unsupervised learning models can work with unlabeled data, but they may not produce meaningful results if the data is noisy or irrelevant. Reinforcement learning models need a lot of trial-and-error interactions with an environment, which can be challenging to simulate or replicate in real life.

- The complexity and specificity of your problem: Different AI models have different levels of complexity and specificity. For example, supervised learning models can be very accurate and precise for well-defined problems, such as image classification or sentiment analysis. Unsupervised learning models can be more flexible and generalizable for complex or ambiguous problems, such as anomaly detection or customer segmentation. Reinforcement learning models can be very adaptive and creative for dynamic or interactive problems, such as game playing or robot navigation.

- The trade-off between speed and accuracy: Different AI models have different trade-offs between speed and accuracy. For example, supervised learning models can be very fast and accurate once they are trained, but they may take a long time and a lot of resources to train. Unsupervised learning models can be very fast and efficient to train, but they may not be very accurate or reliable in some cases. Reinforcement learning models can be very slow and unstable to train, but they may achieve very high levels of performance and optimization in the long run.

As you can see, there is no one-size-fits-all solution when it comes to choosing the right AI model for your business problem. You need to understand your problem domain, your data availability and quality, your performance goals and constraints, and your budget and timeline. You also need to experiment with different AI models and evaluate their results using appropriate metrics and criteria.

With ChatGPT DAN prompt, you can jailbreak ChatGPT

AI 101

Are you new to AI? You can still get on the AI train! We have created a detailed AI glossary for the most commonly used artificial intelligence terms and explain the basics of artificial intelligence as well as the risks and benefits of AI. Feel free the use them. Learning how to use AI is a game changer!

AI tools we have reviewed

Almost every day, a new tool, model, or feature pops up and changes our lives, like the new OpenAI ChatGPT plugins, and we have already reviewed some of the best ones:

- Text-to-text AI tools

Do you want to learn how to use ChatGPT effectively? We have some tips and tricks for you without switching to ChatGPT Plus! When you want to use the AI tool, you can get errors like “ChatGPT is at capacity right now” and “too many requests in 1-hour try again later”. Yes, they are really annoying errors, but don’t worry; we know how to fix them. Is ChatGPT plagiarism free? It is a hard question to find a single answer. If you are afraid of plagiarism, feel free to use AI plagiarism checkers. Also, you can check other AI chatbots and AI essay writers for better results.

- Text-to-image AI tools

While there are still some debates about artificial intelligence-generated images, people are still looking for the best AI art generators. Will AI replace designers? Keep reading and find out.

- Other AI tools

Do you want more tools? Check out the best free AI art generators.