Imagine a speech recognition model that not only understands multiple languages but also seamlessly translates and identifies them. Whisper v3 is the embodiment of this vision. It’s not just a model; it’s a dynamic force that reshapes the boundaries of audio data comprehension.

The ability to transcribe, translate, and identify languages in spoken words has long been the holy grail of technology and OpenAI just changed it.

Whisper v3’s multifaceted audio revolution

Whisper v3 is a highly advanced and versatile speech recognition model developed by OpenAI. It is a part of the Whisper family of models, and it brings significant improvements and capabilities to the table. Let’s dive into the details of Whisper v3:

- General-purpose speech recognition model: Whisper v3, like its predecessors, is a general-purpose speech recognition model. It is designed to transcribe spoken language into text, making it an invaluable tool for a wide range of applications, including transcription services, voice assistants, and more.

- Multitasking capabilities: One of the standout features of Whisper v3 is its multitasking capabilities. It can perform a variety of speech-related tasks, which include:

- Multilingual speech recognition: Whisper v3 can recognize speech in multiple languages, making it suitable for diverse linguistic contexts.

- Speech translation: It can not only transcribe speech but also translate it into different languages.

- Language identification: The model has the ability to identify the language being spoken in the provided audio.

- Voice activity detection: Whisper v3 can determine when speech is present in audio data, making it useful for applications like voice command detection in voice assistants.

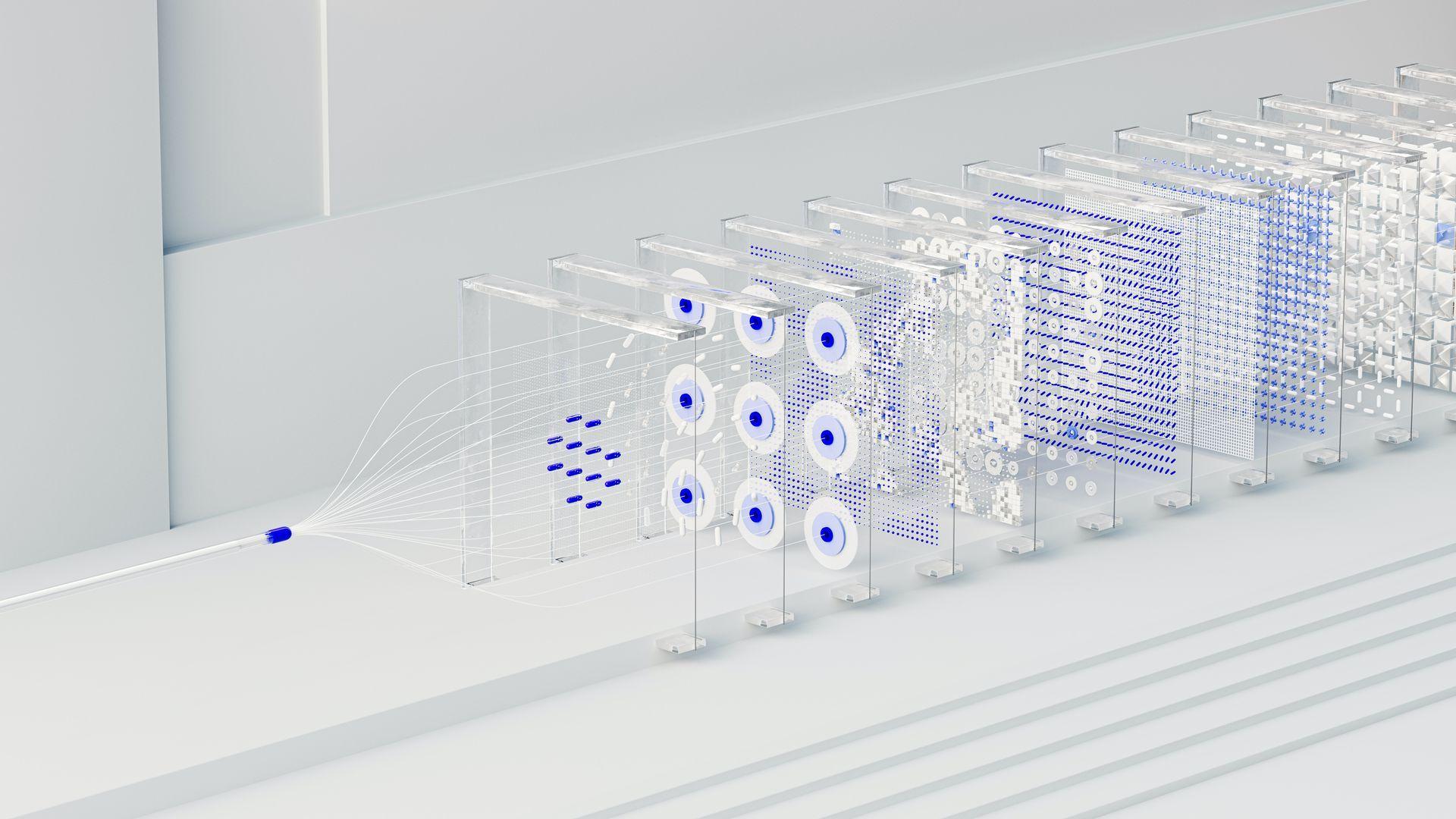

Whisper v3 is built on a state-of-the-art Transformer sequence-to-sequence model. In this model, a sequence of tokens representing the audio data is processed and decoded to produce the desired output. This architecture enables Whisper v3 to replace several stages of a traditional speech processing pipeline, simplifying the overall process.

To perform various tasks, v3 uses special tokens that serve as task specifiers or classification targets. These tokens guide the model in understanding the specific task it needs to perform.

OpenAI Dev Day summary: ChatGPT will integrate further into daily life

Available models and languages

Whisper v3 offers a range of model sizes, with four of them having English-only versions. These models differ in terms of the trade-off between speed and accuracy. The available models and their approximate memory requirements and relative inference speeds compared to the large model are as follows:

- Tiny: 39 million parameters, ~32x faster than the large model, and requires around 1 GB of VRAM.

- Base: 74 million parameters, ~16x faster, and also requires about 1 GB of VRAM.

- Small: 244 million parameters, ~6x faster, and needs around 2 GB of VRAM.

- Medium: 769 million parameters, ~2x faster, and requires about 5 GB of VRAM.

- Large: 1550 million parameters, which serves as the baseline, and needs approximately 10 GB of VRAM.

The English-only models, particularly the tiny.en and base.en versions, tend to perform better, with the difference becoming less significant as you move to the small.en and medium.en models.

Whisper v3’s performance can vary significantly depending on the language being transcribed or translated. Word Error Rates (WERs) and Character Error Rates (CERs) are used to evaluate performance on different datasets. The model’s performance is detailed in the provided figures and metrics, offering insights into how well it handles various languages and tasks.

How to use Whisper v3

To use Whisper v3 effectively, it’s important to set up the necessary environment. The model was developed using Python 3.9.9 and PyTorch 1.10.1. However, it is expected to be compatible with a range of Python versions, from 3.8 to 3.11, as well as recent PyTorch versions.

Additionally, it relies on various Python packages, including OpenAI’s tiktoken for efficient tokenization. Installation of Whisper v3 can be done using the provided pip commands. It is important to note that the model’s setup also requires the installation of ffmpeg, a command-line tool used for audio processing. Depending on the operating system, various package managers can be used to install it.

For more detailed information, click here.

Conclusion

Whisper v3 is a versatile speech recognition model by OpenAI. It offers multilingual speech recognition, translation, language identification, and voice activity detection. Built on a Transformer model, it simplifies audio processing. Whisper v3 is compatible with various Python versions, has different model sizes, and is accessible via command-line and Python interfaces. Released under the MIT License, it encourages innovation and empowers users to extract knowledge from spoken language, transcending language barriers.

Featured image credit: Andrew Neel/Pexels