Stability AI recently unveiled its latest innovation, Stable Video Diffusion (SVD), a groundbreaking AI tool that transforms static images into dynamic short videos. This free research utility, available as an open-weights preview, operates on two AI models employing the image-to-video technique. Remarkably, SVD functions efficiently on local machines equipped with Nvidia GPUs. This release marks a significant step in the realm of AI-assisted video generation, though its outcomes currently show a spectrum of effectiveness.

Today, we are releasing Stable Video Diffusion, our first foundation model for generative video based on the image model Stable Diffusion. Now available in research preview, this state-of-the-art generative AI video model represents a significant step in our journey toward creating models for everyone of every type.

-Stability AI

What is Stable Video Diffusion, or SVD?

Stable Video Diffusion (SVD), a venture by Stability AI, is an ambitious step into the domain of AI video synthesis. This innovation comes on the heels of the successful launch of Stable Diffusion last year, an open weights image synthesis model that ignited the field of open image synthesis. It fostered a robust community of enthusiasts who have since expanded the technology with their unique customizations. Now, Stability AI is aiming to replicate this success in video synthesis, though it’s a technology still in its early stages.

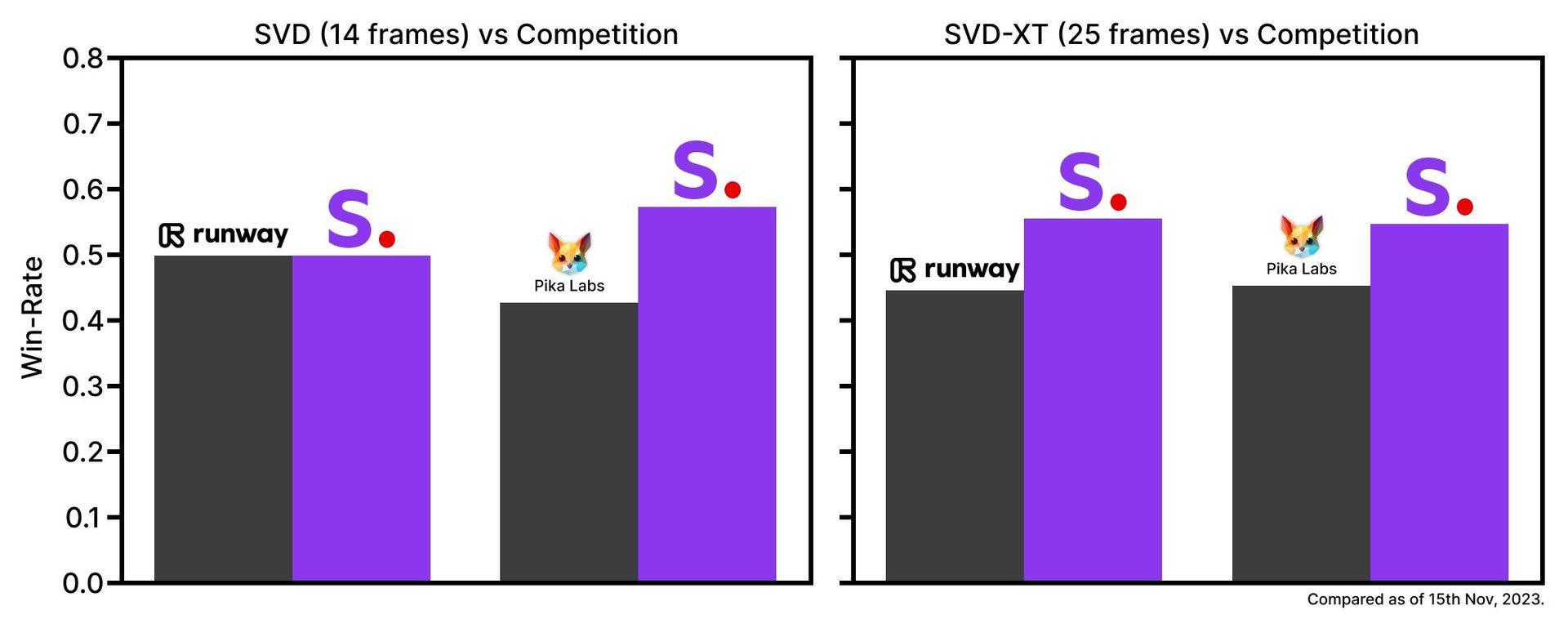

Presently, Stable Video Diffusion comprises two distinct models. The first model, known simply as “SVD,” is capable of converting images into videos up to 14 frames in length. The second, “SVD-XT,” extends this capability to 25 frames. These models offer versatility in operation, running at speeds ranging from 3 to 30 frames per second. They generate short video clips, typically spanning 2 to 4 seconds, in MP4 format with a resolution of 576×1024.

Key points detailed by Stability AI:

- Adaptable to numerous video applications: SVD’s adaptability shines in numerous video-related tasks. One of its notable applications is multi-view synthesis from a single image, achievable with fine-tuning on multi-view datasets. Stability AI envisions a range of models that will build upon and enhance this foundational technology, aspiring to create an ecosystem akin to that which has developed around Stable Diffusion.

- Competitive in performance: Released in two versions for image-to-video conversion, Stable Video Diffusion demonstrates its prowess in generating videos of 14 and 25 frames at adjustable frame rates between 3 and 30 fps. In initial external evaluations, these models have shown to outperform leading closed models in user preference studies, even in their nascent stages.

Stability AI is keen to clarify that Stable Video Diffusion (SVD) is currently in its infancy and is primarily designed for research purposes:

While we eagerly update our models with the latest advancements and work to incorporate your feedback. This model is not intended for real-world or commercial applications at this stage. Your insights and feedback on safety and quality are important to refining this model for its eventual release.

The research paper on Stable Video Diffusion does not disclose the origins of the training datasets. It mentions the use of “a large video dataset,” which has been crafted into the Large Video Dataset (LVD). This impressive dataset comprises approximately 580 million annotated video clips, encapsulating 212 years’ worth of content in duration.

Stable Diffusion models that can elevate your generation process

SVD is not the first AI model to provide such functionality, but it stands out in its approach and potential. For those interested in exploring or contributing to SVD, the source code and weights are openly accessible on GitHub. An alternative way to experiment with SVD is through the Pinokio platform. This platform simplifies the process by managing installation dependencies and running the model in a dedicated environment.

Stability AI has introduced an opportunity to join their waitlist for a new web experience featuring a Text-To-Video interface. This interface, powered by Stable Video Diffusion, is expected to find applications across various sectors like Advertising, Education, and Entertainment. It presents a glimpse into the practical utilities of SVD, demonstrating its potential impact beyond the research community.