Apple discreetly introduced the Ferret LLM, a multimodal language model that’s anything but ordinary. This silent launch diverges from the norm by fusing language understanding with image analysis, redefining the scope of AI capabilities.

Released quietly on GitHub, Ferret LLM signifies Apple’s subtle stride towards openness, beckoning developers and researchers to unravel its potential. However, amidst its launch, challenges loom in scaling Ferret against larger models, posing infrastructure-related hurdles. Still, the potential impact of Ferret on Apple devices is considerable, promising a new dimension in user interactions and a deeper comprehension of visual content. Want to learn more? We gathered everything you need to know about Apple’s latest move in the AI landscape.

What is Apple Ferret LLM?

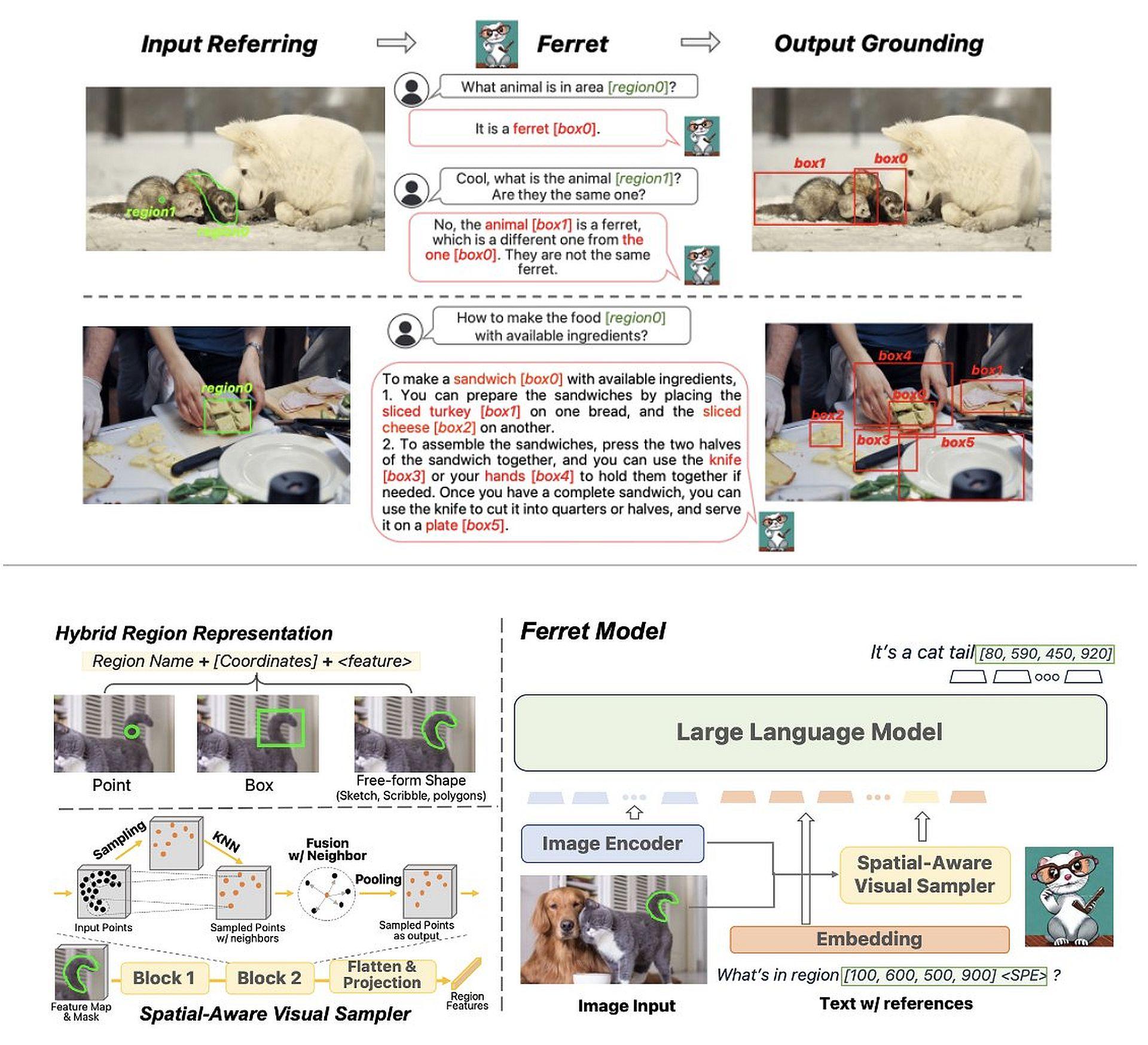

Ferret, an open-source multimodal large language model (LLM) developed by Apple Inc. in collaboration with Cornell University, stands out for its unique integration of language understanding with image analysis. Released on GitHub, it diverges from traditional language models by incorporating visual elements into its processing.

Here is how the Apple Ferret LLM works:

- Visual integration: Ferret doesn’t limit itself to textual comprehension but analyzes specific regions of images, identifying elements within them. These elements are then used as part of a query, allowing Ferret to respond to prompts that involve both text and images.

- Contextual responses: For instance, when asked to identify an object within an image, Ferret not only recognizes the object but leverages surrounding elements to provide deeper insights or context, going beyond mere object recognition.

Zhe Gan, an Apple AI research scientist, highlighted Ferret’s capability to reference and understand elements within images at various levels of detail. This flexibility allows Ferret to comprehend queries involving complex visual content.

What sets Ferret’s introduction apart is its technological prowess and Apple’s strategic move towards openness. Departing from its typically guarded nature, Apple chose to release Ferret as an open-source model. This shift towards transparency signifies a collaborative approach, inviting contributions and fostering an ecosystem where researchers and developers globally can enhance, refine, and explore the model’s capabilities

Challenges ahead

Ferret’s emergence heralds a new era in AI, where multimodal understanding becomes the norm rather than the exception. Its capabilities open doors to myriad applications across diverse fields, from enhanced content analysis to innovative human-AI interactions.

However, Apple faces challenges in scaling Ferret due to infrastructure limitations, raising questions about its ability to compete with industry giants like GPT-4 in deploying large-scale language models. This dilemma necessitates strategic decisions, potentially involving partnerships or further embracing open-source principles to leverage collective expertise and resources.

For more detailed information about the Apple Ferret LLM, visit its arXiv page.

Apple Ferret LLM’s potential impact on iPhones and other Apple devices

The introduction of Apple’s Ferret LLM could potentially have a significant impact on various Apple products, particularly in enhancing user experiences and functionalities in the following ways:

Improved image-based interactions

Apple Ferret LLM’s image analysis integration within Siri could enable more sophisticated and contextual interactions. Users might be able to ask questions about images or request actions based on visual content.

Ferret’s capabilities might power advanced visual search functionalities within Apple’s ecosystem. Users could search for items or information within images, leading to a more intuitive and comprehensive search experience.

Augmented user assistance

Ferret’s ability to interpret images and provide contextual information could greatly benefit users with accessibility needs. It could assist in identifying objects or scenes for visually impaired users, enhancing their daily interactions with Apple devices.

Ferret’s integration might enhance the capabilities of Apple’s ARKit, allowing for more sophisticated and interactive augmented reality experiences based on image understanding and contextual responses.

Enriched media and content understanding

Ferret could enhance the organization and search functionalities within the Photos app by recognizing and indexing specific elements within images and videos, enabling smarter categorization and search.

Leveraging Ferret’s image understanding, Apple might offer more personalized content recommendations based on users’ interactions with visual content across its ecosystem.

Developer innovation

Developers might leverage Ferret’s capabilities to create innovative applications across various domains, from education to healthcare, by incorporating advanced image and language understanding into their apps.

However, the implementation of Ferret’s capabilities into Apple products would depend on various factors, including technological feasibility, user privacy considerations, and the extent of integration into existing Apple software and hardware. Additionally, Apple’s strategic decisions regarding the scalability and deployment of Ferret within its product lineup will determine the actual impact on consumer-facing features and functionalities.

Featured image credit: Jhon Paul Dela Cruz/Unsplash