The big LPU vs GPU debate when Groq has recently showcased its Language Processing Unit’s remarkable capabilities, setting new benchmarks in processing speed. This week, Groq’s LPU astounded the tech community by executing open-source Large Language Models (LLMs) like Llama-2, which boasts 70 billion parameters, at an impressive rate of over 100 tokens per second.

Furthermore, it demonstrated its prowess with Mixtral, achieving nearly 500 tokens per second per user. This breakthrough highlights the potential shift in computational paradigms, where LPUs may offer a specialized, more efficient alternative to the traditionally dominant GPUs in handling language-based tasks.

What is an LPU?

What exactly is an LPU, its functioning mechanism, and Groq’s origins (a name that unfortunately clashes with Musk’s similarly named Grok)? Groq’s online presence introduces its LPUs, or ‘language processing units,’ as “a new type of end-to-end processing unit system that provides the fastest inference for computationally intensive applications with a sequential component to them, such as AI language applications (LLMs).”

Recall the historic Go match in 2016, where AlphaGo defeated the world champion Lee Sedol? Interestingly, about a month prior to their face-off, AlphaGo lost a practice match. Following this, the DeepMind team transitioned AlphaGo to a Tensor Processing Unit (TPU), significantly enhancing its performance to secure a victory by a substantial margin.

This moment showed the critical role of processing power in unlocking the full potential of sophisticated computing, inspiring Jonathan Ross, who had initially spearheaded the TPU project at Google, to establish Groq in 2016, leading to the development of the LPU. The LPU is uniquely engineered to swiftly tackle language-based operations. Contrary to conventional chips that handle numerous tasks simultaneously (parallel processing), the LPU processes tasks in sequence (sequential processing), making it highly effective for language comprehension and generation.

Consider the analogy of a relay race where each participant (chip) hands off the baton (data) to the next, significantly accelerating the process. The LPU specifically aims to address the dual challenges of computational density and memory bandwidth in large language models (LLMs).

Groq adopted an innovative strategy from its inception, prioritizing software and compiler innovation before hardware development. This approach ensured that the programming would direct the inter-chip communication, facilitating a coordinated and efficient operation akin to a well-oiled machine in a production line.

Consequently, the LPU excels in swiftly and efficiently managing language tasks, making it highly suitable for applications that require text interpretation or generation. This breakthrough has led to a system that not only surpasses conventional configurations in speed but also in cost-effectiveness and reduced energy use. Such advancements hold significant implications for sectors like finance, government, and technology, where rapid and precise data processing is crucial.Top of Form

Diving deep in Language Processing Units (LPUs)

To gain a deeper insight into its architecture, Groq has published two papers:

- one in 2020 titled: “Think Fast: A Tensor Streaming Processor (TSP) for Accelerating Deep Learning Workloads“

- another in 2022 called: “A Software-defined Tensor Streaming Multiprocessor for Large-scale Machine Learning“

It appears the designation “LPU” is a more recent term in Groq’s lexicon, as it doesn’t feature in either document.

However, it’s not time to discard your GPUs just yet. Although LPUs excel at inference tasks, effortlessly handling the application of trained models to novel data, GPUs maintain their dominance in the model training phase. The synergy between LPUs and GPUs could form a formidable partnership in AI hardware, with each unit specializing and leading in its specific domain.

LPU vs. GPU

Let’s compare LPU vs GPU to understand their distinct advantages and limitations more clearly.

GPUs: The versatile powerhouses

Graphics Processing Units, or GPUs, have transcended their initial design purpose of rendering video game graphics to become key elements of Artificial Intelligence (AI) and Machine Learning (ML) efforts. Their architecture is a beacon of parallel processing capability, enabling the execution of thousands of tasks simultaneously.

This attribute is particularly beneficial for algorithms that thrive on parallelization, effectively accelerating tasks that range from complex simulations to deep learning model training.

The versatility of GPUs is another commendable feature; these processors adeptly handle a diverse array of tasks, not just limited to AI but also including gaming and video rendering. Their parallel processing prowess significantly hastens the training and inference phases of ML models, showcasing a remarkable speed advantage.

However, GPUs are not without their limitations. Their high-performance endeavors come at the cost of substantial energy consumption, posing challenges in power efficiency. Additionally, their general-purpose design, while flexible, may not always deliver the utmost efficiency for specific AI tasks, hinting at potential inefficiencies in specialized applications.

LPUs: The language specialists

Language Processing Units represent the cutting edge in AI processor technology, with a design ethos deeply rooted in natural language processing (NLP) tasks. Unlike their GPU counterparts, LPUs are optimized for sequential processing, a necessity for accurately understanding and generating human language. This specialization endows LPUs with superior performance in NLP applications, outshining general-purpose processors in tasks like translation and content generation. The efficiency of LPUs in processing language models stands out, potentially diminishing both the time and energy footprint of NLP tasks.

The specialization of LPUs, however, is a double-edged sword. While they excel in language processing, their application scope is narrower, limiting their versatility across the broader AI task spectrum. Moreover, as emergent technologies, LPUs face challenges in widespread support and availability, a gap that time and technological adoption may bridge.

| Feature | GPUs | LPUs |

| Design Purpose | Originally for video game graphics | Specifically for natural language processing tasks |

| Advantages | Versatility, Parallel Processing | Specialization, Efficiency in NLP |

| Limitations | Energy Consumption, General Purpose Design | Limited Application Scope, Emerging Technology |

| Suitable for | AI/ML tasks, gaming, video rendering | NLP tasks (e.g., translation, content generation) |

| Processing Type | Parallel | Sequential |

| Energy Efficiency | Lower due to high-performance tasks | Potentially higher due to optimization for specific tasks |

What about NPUs?

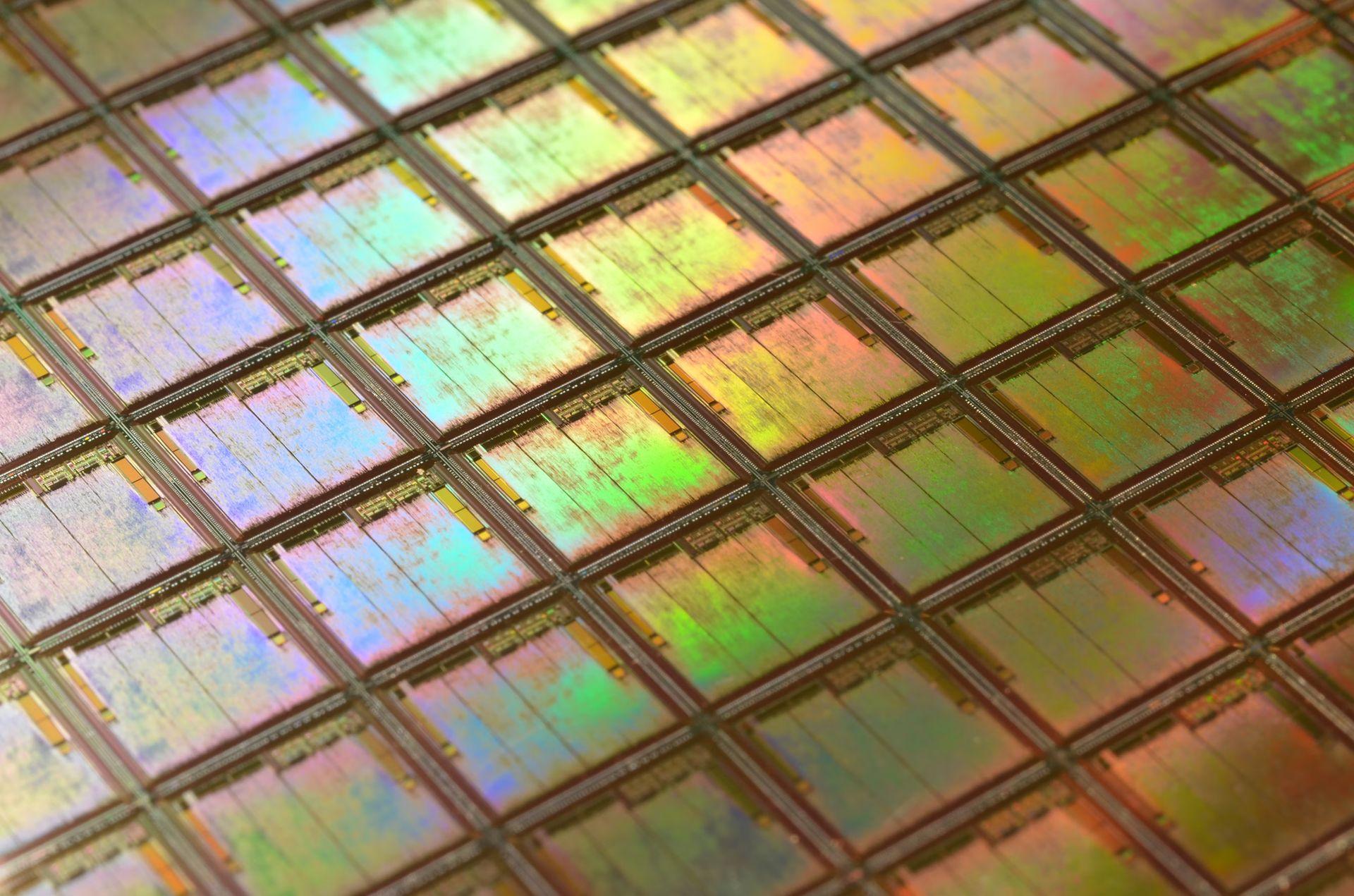

GPUs, despite their versatility and power, come with significant manufacturing challenges and high costs due to the complexity of their design and fabrication process. As AI models grow in sophistication, the limitations of GPUs in terms of energy efficiency and task specialization become more apparent. This has led to the emergence of Neural Processing Units (NPUs), specialized chips designed specifically for neural network computations. Unlike GPUs, which are general-purpose processors optimized for parallel processing, NPUs are architected to handle the dense matrix operations and tensor manipulations that are core to machine learning and AI workloads.

Hot topic: AI PCs and NPUs

NPUs leverage advancements in hardware acceleration for deep learning, featuring dedicated pathways for tasks such as matrix multiplication, activation functions, and gradient calculations, making them inherently more efficient at training and inference compared to GPUs. Their architecture minimizes data transfer bottlenecks by keeping computations localized and optimizing memory access patterns, which significantly reduces power consumption while maximizing throughput.

NPUs could become the dominant force in AI hardware, replacing GPUs in various specialized applications. With reduced complexity and costs associated with production, NPUs could lead to more accessible, high-performance AI systems, particularly in edge computing environments where power and space constraints make the use of traditional GPUs impractical.

What about TPUs?

Tensor Processing Units (TPUs) are another specialized class of AI accelerators, developed by Google specifically for deep learning tasks. Unlike GPUs, which are general-purpose and excel at parallel processing across a wide variety of tasks, TPUs are purpose-built to handle the specific matrix operations central to machine learning algorithms, particularly in training and inference for neural networks.

TPUs are designed around the principles of dataflow architecture, where massive amounts of data are processed in parallel as it moves through the chip. This architecture enables TPUs to efficiently handle the computationally intense operations involved in training deep learning models, such as matrix multiplications and convolutions, with specialized hardware for common AI operations like softmax, RELU, and pooling layers.

One of the key advantages of TPUs is their ability to provide higher throughput while consuming less power compared to GPUs. Their design focuses on accelerating tensor computations, which makes them incredibly efficient for large-scale AI workloads. This is particularly important for enterprises like Google that rely on processing vast amounts of data for applications such as search, translation, and image recognition. By offloading these workloads to TPUs, companies can achieve significant reductions in both time and energy consumption.

TPUs have been deployed extensively in Google’s cloud infrastructure, available to customers as part of Google Cloud’s AI offerings. They are particularly well-suited for training large models, such as those used in natural language processing (NLP), image classification, and recommendation systems. However, like LPUs and NPUs, TPUs are specialized processors that shine in specific areas but may not be as versatile for tasks outside their design scope.

Recent academic research sheds light on how TPUs compare to GPUs in deep learning tasks, especially in terms of speed, efficiency, and energy consumption. TPUs are specialized for high-throughput tensor operations, significantly outperforming GPUs in specific scenarios like training large-scale models and performing inference.

For example, when training the ResNet-50 model on the CIFAR-10 dataset, an NVIDIA Tesla V100 GPU takes approximately 40 minutes for ten epochs, whereas a Google Cloud TPU v3 completes the same task in just 15 minutes.

Will Groq LPU transform the future of AI inference?

The debate around LPU vs GPU has been growing. Initially, Groq piqued interest when its public relations team heralded it as a key player in AI development late last year. Despite initial curiosity, a conversation with the company’s leadership was delayed due to scheduling conflicts.

The interest was reignited by a desire to understand whether this company represents another fleeting moment in the AI hype cycle, where publicity seems to drive recognition, or if its LPUs truly signify a revolutionary step in AI inference. Questions also arose about the experiences of the company’s relatively small team, especially following a significant burst of recognition in the tech hardware scene.

A keymoment came when a social media post drastically increased interest in the company, leading to thousands of inquiries about access to its technology within just a day. The company’s founder shared these details during a video call, highlighting the overwhelming response and their current practice of offering access to their technology for free due to the absence of a billing system.

The founder is no novice to Silicon Valley’s startup ecosystem, having been an advocate for the company’s technological potential since its inception in 2016. A prior engagement in developing a key computational technology at another major tech firm provided the foundation for launching this new venture. This experience was crucial in shaping the company’s unique approach to hardware development, focusing on user experience from the outset, with significant initial efforts directed towards software tools before moving on to the physical design of the chip.

This narrative pins a significant transition towards specialized processors like LPUs, which might start a new era in AI inference, offering more efficient, targeted computing solutions. As the industry continues to evaluate the impact of such innovations, the potential for LPUs to redefine computational approaches in AI applications remains a compelling discussion point, suggesting a transformative future for AI technology.

Image credits: Kerem Gülen/Midjourney