Have you ever wished your still photos could speak or sing? Meet EMO, short for Emote Portrait Alive. Developed by researchers at Alibaba’s Institute for Intelligent Computing, EMO is an artificial intelligence system designed to do just that.

EMO takes a unique approach to animation, bypassing complex 3D models by directly converting audio into video frames. This means your animated videos retain the natural movements and expressions of speech or song, all from a single photo and audio clip.

Alibaba AI: What is Emote Portrait Alive (EMO)?

EMO, or Emote Portrait Alive, is an artificial intelligence system developed by researchers at Alibaba’s Institute for Intelligent Computing. Its primary function is to animate static portrait photos, creating videos where the subject appears to talk or sing realistically.

What sets EMO apart is its approach to generating these animations. Rather than relying on traditional methods that often struggle to capture the nuances of human expression, EMO directly converts audio waveforms into video frames. This means it doesn’t need intermediate 3D models or facial landmarks to generate animations. Instead, it focuses on capturing subtle facial movements and individual facial styles associated with natural speech.

Just in 👀

this is the most amazing audio2video I have ever seen.

It is called EMO: Emote Portrait Alive pic.twitter.com/3b1AQMzPYu— Stelfie the Time Traveller (@StelfieTT) February 28, 2024

The technology powering EMO is based on a diffusion model, which is well-known for its ability to generate realistic synthetic imagery. To train the system, researchers used a large dataset of talking head videos from various sources, including speeches, films, TV shows, and musical performances. This extensive training enables EMO to produce high-quality videos while preserving the identity of the subject and conveying expressiveness.

In addition to generating conversational videos, EMO can also animate singing portraits. Synchronizing mouth shapes and facial expressions with the vocals can create singing videos in different styles and durations.

While the development of EMO presents exciting possibilities for personalized video content creation, it also raises ethical concerns. There’s a risk of misuse, such as impersonation or the spread of misinformation. Therefore, it’s essential to approach the deployment of such technology with caution and ensure that appropriate safeguards are in place to address these ethical concerns.

Pika Lip Sync makes AI-generated videos talk too

How does EMO work?

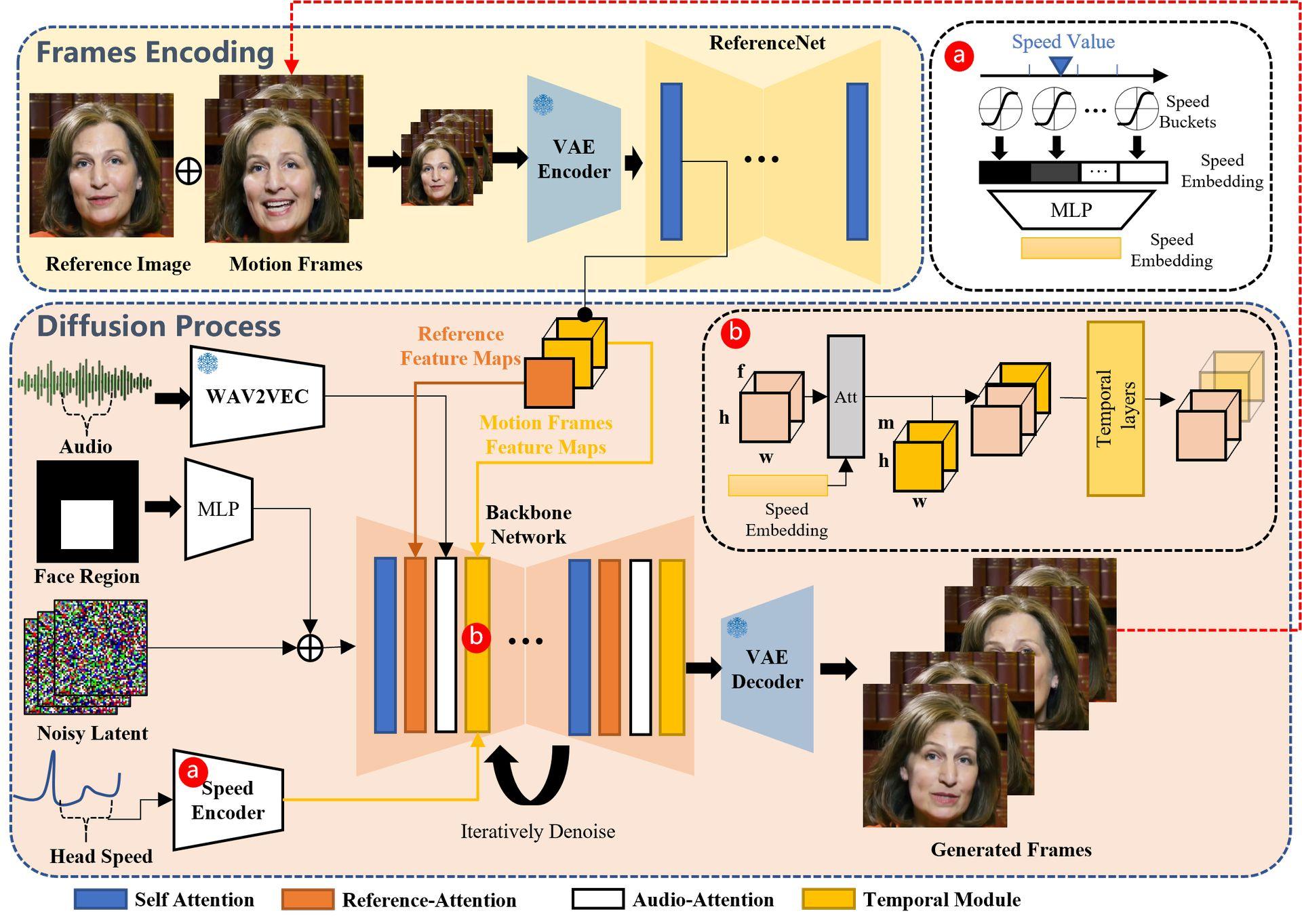

The EMO system operates in two main stages: Frames Encoding and Diffusion Process.

- Frames encoding: Extracts features from reference images and motion frames to establish the foundation for animation.

- Diffusion process: Utilizes a pretrained audio encoder to process audio input. It integrates facial region masks with multi-frame noise for animation generation. Backbone Network denoises animations, aided by Reference-Attention and Audio-Attention mechanisms. Temporal Modules adjust motion velocity.

What can you do with EMO?

EMO offers a versatile tool for creating lifelike animated videos, expanding possibilities for personalized and expressive content creation, such as:

- Singing: Generates vocal avatar videos with expressive facial expressions synced to singing audio inputs.

- Language & Style: Supports diverse languages and portrait styles, capturing tonal variations for dynamic avatar animations.

- Rapid rhythm: Ensures synchronization of character animations with fast-paced rhythms.

- Talking: Animates portraits in response to spoken audio inputs in various languages and styles.

- Cross-actor performance: Portrays characters from movies or other media in multilingual and multicultural contexts.

In summary, EMO, also known as Emote Portrait Alive, is a significant advancement in animation technology. It can turn still pictures into lively videos where subjects appear to talk or sing realistically. EMO achieves this by directly converting audio into video frames, accurately capturing facial expressions and movements. While EMO offers exciting possibilities for creating dynamic visual content, ethical concerns about its potential misuse must be addressed. Nonetheless, EMO presents a valuable tool for bringing still images to life and can potentially transform how we interact with visual media in the future.

For more detailed information, here is its research paper.

Featured image credit: EMO: Emote Portrait Alive research