- Cerebras Systems unveils the Wafer Scale Engine 3 (WSE-3), touted as the world’s most advanced AI chip, powering the CS-3 AI supercomputer with a peak performance of 125 petaFLOPS.

- The WSE-3 chip promises to revolutionize AI training, significantly enhancing efficiency while maintaining cost and energy efficiency, boasting four trillion transistors and double the performance of its predecessor.

- The CS-3 system, with 900,000 AI cores and up to 1.2 Petabytes of external memory, positions itself theoretically among the top 10 supercomputers globally, showcasing unprecedented potential for AI model training and scalability.

AI technology, heralded by some as a trailblazing innovation and criticized by others as a boon for the elite, now has a game-changing asset in its arsenal. Cerebras Systems has lifted the veil on what is claimed to be the planet’s most advanced AI chip, the Wafer Scale Engine 3 (WSE-3). This powerhouse drives the Cerebras CS-3 AI supercomputer, boasting an unparalleled peak performance of 125 petaFLOPS, with scalability that pushes the boundaries of what’s possible.

What does Cerebras’ WSE-3 have to offer?

Key specs detailed in the press release:

- 4 trillion transistors

- 900,000 AI cores

- 125 petaflops of peak AI performance

- 44GB on-chip SRAM

- 5nm TSMC process

- External memory: 1.5TB, 12TB, or 1.2PB

- Trains AI models up to 24 trillion parameters

- Cluster size of up to 2048 CS-3 systems

Before an AI can generate those captivating videos and images, it undergoes training on a colossal scale of data, guzzling the energy equivalent of over 100 households in the process. However, this cutting-edge chip, along with the computing systems leveraging it, promises to expedite and enhance the efficiency of this training phase significantly.

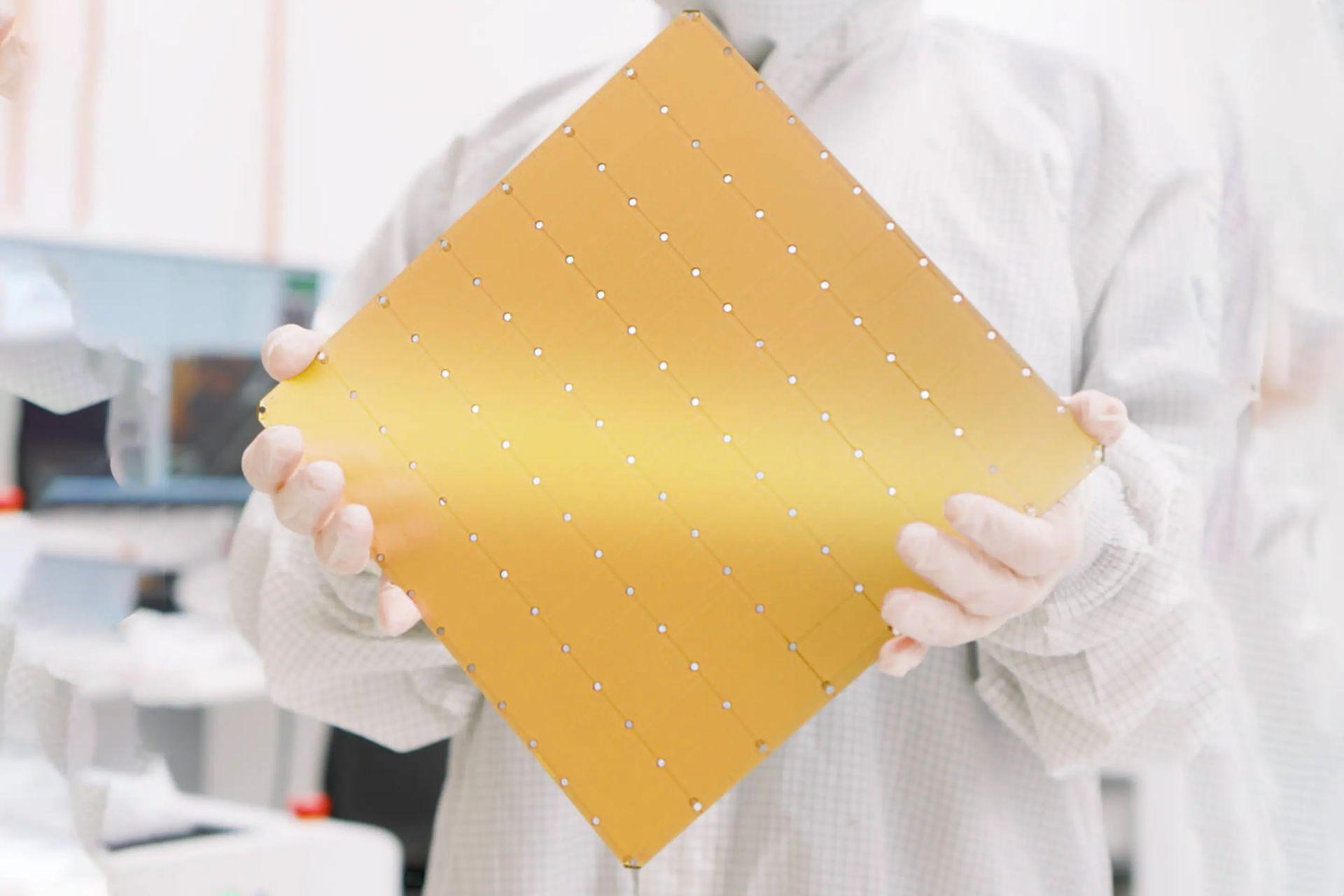

The WSE-3 chip, comparable in size to a standard board game, is remarkably equipped with four trillion transistors, achieving double the performance of Cerebras Systems’ prior iteration—the former champion in terms of speed—all while maintaining the same cost and energy efficiency. Integrated within the CS-3 system, these chips promise to deliver the computational power equivalent to an entire server room, all compacted into a unit merely the size of a mini-fridge.

US negotiates AI chip sales with Nvidia amidst China tensions

Cerebras highlights that the CS-3 boasts 900,000 AI cores and 44 GB of on-chip SRAM, capable of hitting a peak AI performance of 125 petaFLOPS. This impressive specification theoretically positions the CS-3 among the elite top 10 supercomputers globally. However, without benchmark testing, the actual performance in real-world applications remains speculative.

Addressing the insatiable data demands of AI, the CS-3 offers external memory configurations ranging from 1.5 TB to an expansive 1.2 Petabytes (PB), equivalent to 1,200 TB. This capacity enables the training of AI models with up to 24 trillion parameters, dwarfing the size of most current AI models, which count their parameters in the billions, with GPT-4 estimated at around 1.8 trillion. Cerebras suggests the CS-3 could train models with one trillion parameters as effortlessly as contemporary GPU-based systems handle models with one billion parameters.

The innovative wafer production process behind the WSE-3 chips allows for the CS-3’s scalable design. It supports the clustering of up to 2,048 units, culminating in a supercomputing behemoth capable of reaching 256 exaFLOPS. This would dwarf the performance of the world’s leading supercomputers, which currently operate just above one exaFLOP. Cerebras claims this unparalleled capability could facilitate the training of a Llama 70B model from scratch in a mere day.

Featured image credit: Kerem Gülen/Midjourney