NVIDIA NIM boosts developer productivity by offering a standardized way to embed generative AI into applications.

What is NVIDIA NIM?

NVIDIA NIM (NVIDIA Inference Microservices) is a platform designed to enhance the deployment and integration of generative AI models. It provides a standardized approach for embedding generative AI into various applications, significantly boosting developer productivity and optimizing infrastructure investments.

Features of NVIDIA NIM

Key features and capabilities of NVIDIA NIM include:

- Streamlined deployment: NVIDIA NIM containers come pre-built with essential software such as NVIDIA CUDA, NVIDIA Triton Inference Server, and NVIDIA TensorRT-LLM, enabling efficient GPU-accelerated inference.

- Support for multiple models: The platform supports a wide range of AI models, including Databricks DBRX, Google’s Gemma, Meta Llama 3, Microsoft Phi-3, and Mistral Large, making them accessible as endpoints on ai.nvidia.com.

- Integration with popular platforms: NVIDIA NIM can be integrated with platforms like Hugging Face, allowing developers to easily access and run models using NVIDIA-powered inference endpoints.

- Extensive ecosystem support: It is supported by numerous platform providers (Canonical, Red Hat, Nutanix, VMware) and integrated into major cloud platforms (Amazon Web Services, Google Cloud, Azure, Oracle Cloud Infrastructure).

- Industry adoption: Leading companies across various industries, such as Foxconn, Pegatron, Amdocs, and ServiceNow, are leveraging NVIDIA NIM for applications like AI factories, smart cities, electric vehicles, customer billing, and multimodal AI models.

- Developer access: Members of the NVIDIA Developer Program can access NIM for free for research, development, and testing, facilitating experimentation and innovation in AI applications.

Nearly 200 technology partners, including Cadence, Cloudera, Cohesity, DataStax, NetApp, Scale AI, and Synopsys, are incorporating NVIDIA NIM to speed up generative AI deployments tailored for specific applications like copilots, coding assistants, and digital human avatars. Additionally, Hugging Face is introducing NVIDIA NIM, beginning with Meta Llama 3.

Jensen Huang, NVIDIA’s founder and CEO, stressed the accessibility and significance of NVIDIA NIM, saying, “Every enterprise aims to integrate generative AI into its operations, but not all have a team of dedicated AI researchers.” NVIDIA NIM makes it possible for almost any organization to utilize generative AI.

Businesses can implement AI applications using NVIDIA NIM via the NVIDIA AI Enterprise software platform. Starting next month, NVIDIA Developer Program members will have free access to NVIDIA NIM for research, development, and testing on their chosen infrastructure.

NVIDIA NIM containers are designed to streamline model deployment for GPU-accelerated inference and come equipped with NVIDIA CUDA software, NVIDIA Triton Inference Server, and NVIDIA TensorRT-LLM software. Over 40 models, such as Databricks DBRX, Google’s Gemma, Meta Llama 3, Microsoft Phi-3, and Mistral Large, are accessible as NIM endpoints on ai.nvidia.com.

Through the Hugging Face AI platform, developers can easily access NVIDIA NIM microservices for Meta Llama 3 models. This integration allows them to efficiently run Llama 3 NIM using Hugging Face Inference Endpoints powered by NVIDIA GPUs.

Numerous platform providers, including Canonical, Red Hat, Nutanix, and VMware, support NVIDIA NIM on both open-source KServe and enterprise solutions. AI application companies like Hippocratic AI, Glean, Kinetica, and Redis are utilizing NIM to drive generative AI inference. Leading AI tools and MLOps partners, such as Amazon SageMaker, Microsoft Azure AI, Dataiku, DataRobot, and others, have integrated NIM into their platforms, enabling developers to create and deploy domain-specific generative AI applications with optimized inference.

Get your free NVIDIA Game Pass offer now

Global system integrators and service delivery partners, including Accenture, Deloitte, Infosys, Latentview, Quantiphi, SoftServe, TCS, and Wipro, have developed expertise in NIM to assist enterprises in rapidly developing and implementing production AI strategies. Enterprises can run NIM-enabled applications on NVIDIA-Certified Systems from manufacturers like Cisco, Dell Technologies, Hewlett-Packard Enterprise, Lenovo, and Supermicro, as well as on servers from ASRock Rack, ASUS, GIGABYTE, Ingrasys, Inventec, Pegatron, QCT, Wistron, and Wiwynn. Additionally, NIM microservices are integrated into major cloud platforms, including Amazon Web Services, Google Cloud, Azure, and Oracle Cloud Infrastructure.

Leading companies are leveraging NIM for diverse applications across industries. Foxconn uses NIM for domain-specific LLMs in AI factories, smart cities, and electric vehicles. Pegatron employs NIM for Project TaME to advance local LLM development for various industries. Amdocs uses NIM for a customer billing LLM, significantly reducing costs and latency while improving accuracy. ServiceNow integrates NIM microservices within its Now AI multimodal model, providing customers with fast and scalable LLM development and deployment.

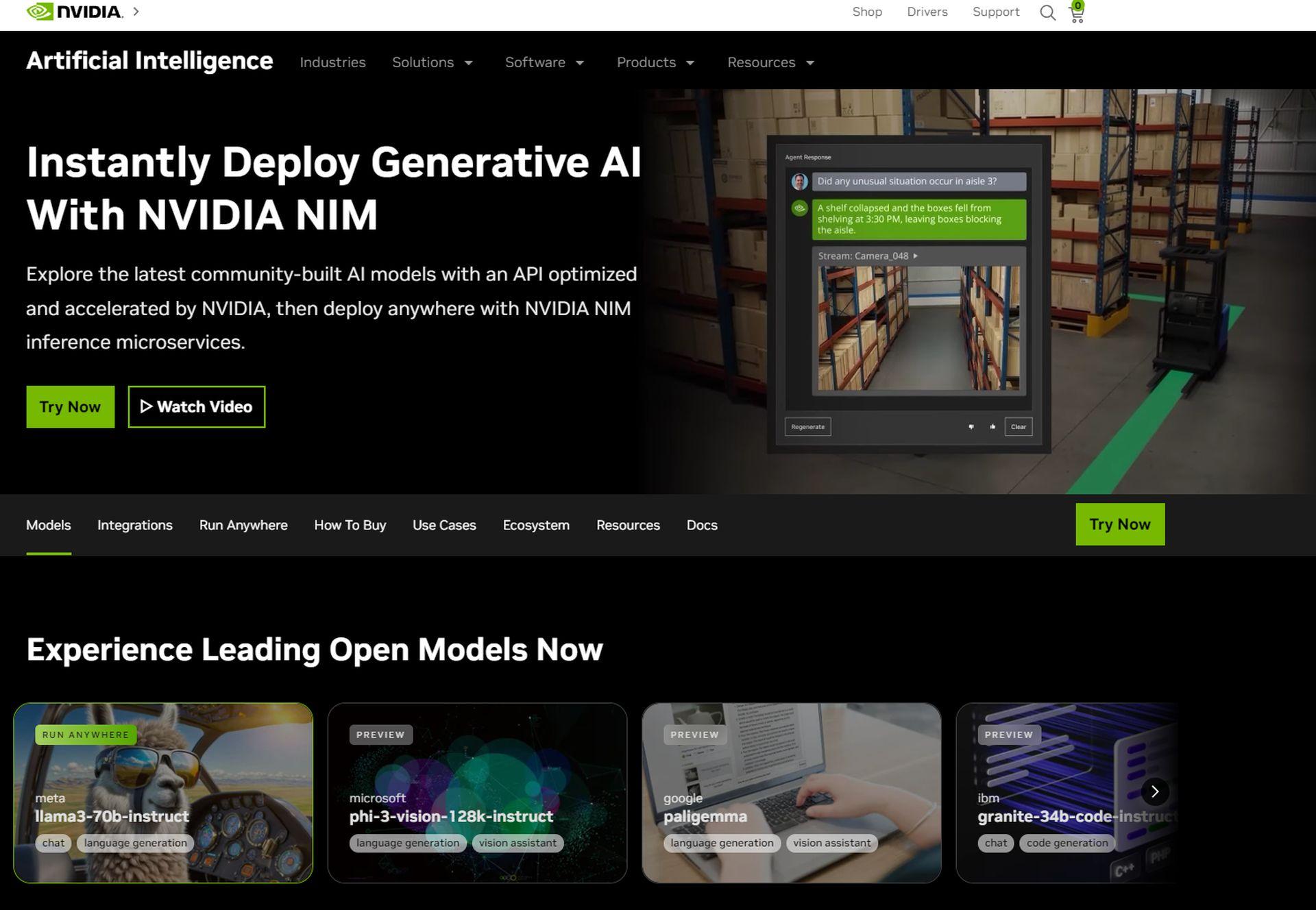

How to try NVIDIA NIM?

Using NVIDIA NIM to deploy and experiment with generative AI models is straightforward. Here is a step-by-step guide to help you get started:

- Visit the NVIDIA NIM website:

- Open your web browser and navigate to ai.nvidia.com.

- Start exploring:

- On the homepage, you’ll find an option to “Try Now.” Click on this button to begin.

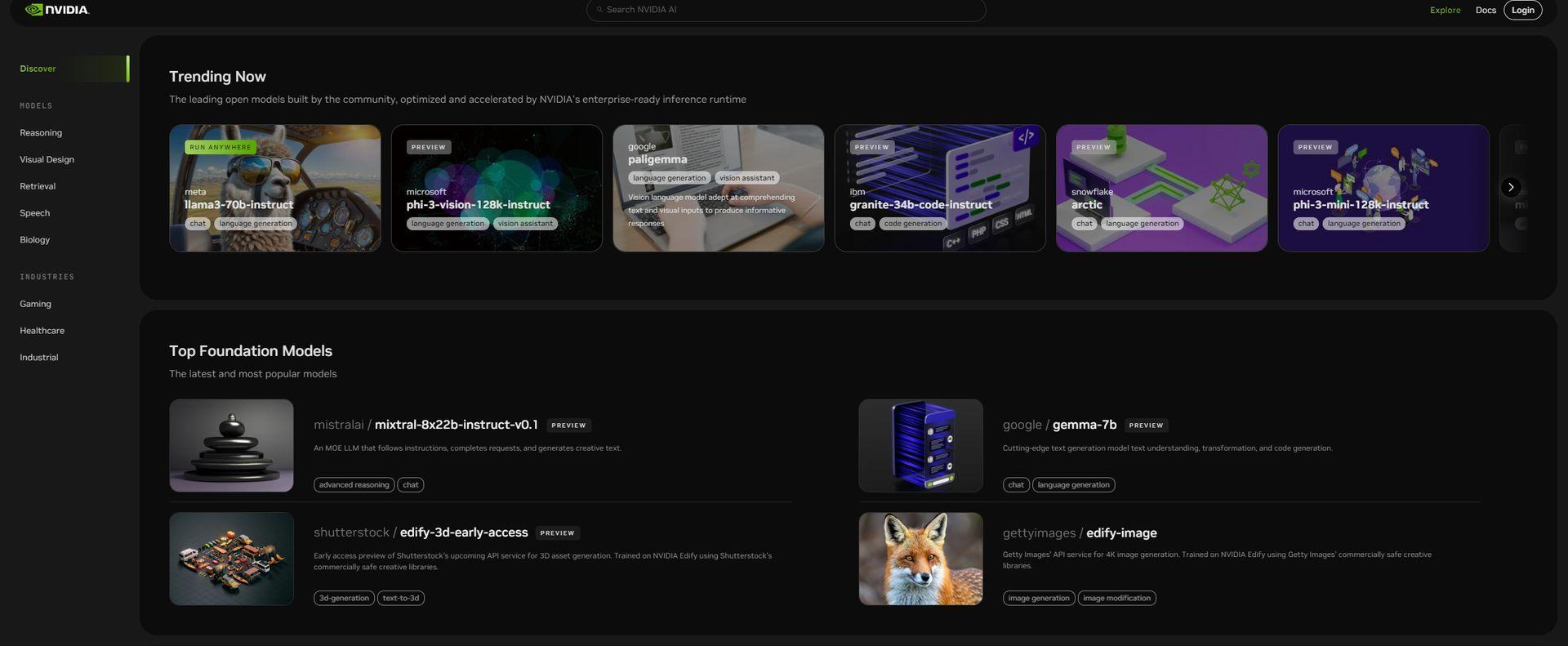

- Select your model:

- You will be directed to a page showcasing various models. For this example, select the “llama3-70b-instruct” model by Meta. This model is designed for language generation tasks.

- Deploy the model:

- Once you select the model, you will see an interface with options to deploy the model. Click on “Run Anywhere” to proceed.

- Use the inference endpoint:

- After deployment, you will have access to the Hugging Face Inference Endpoints powered by NVIDIA GPUs. This allows you to run the model efficiently.

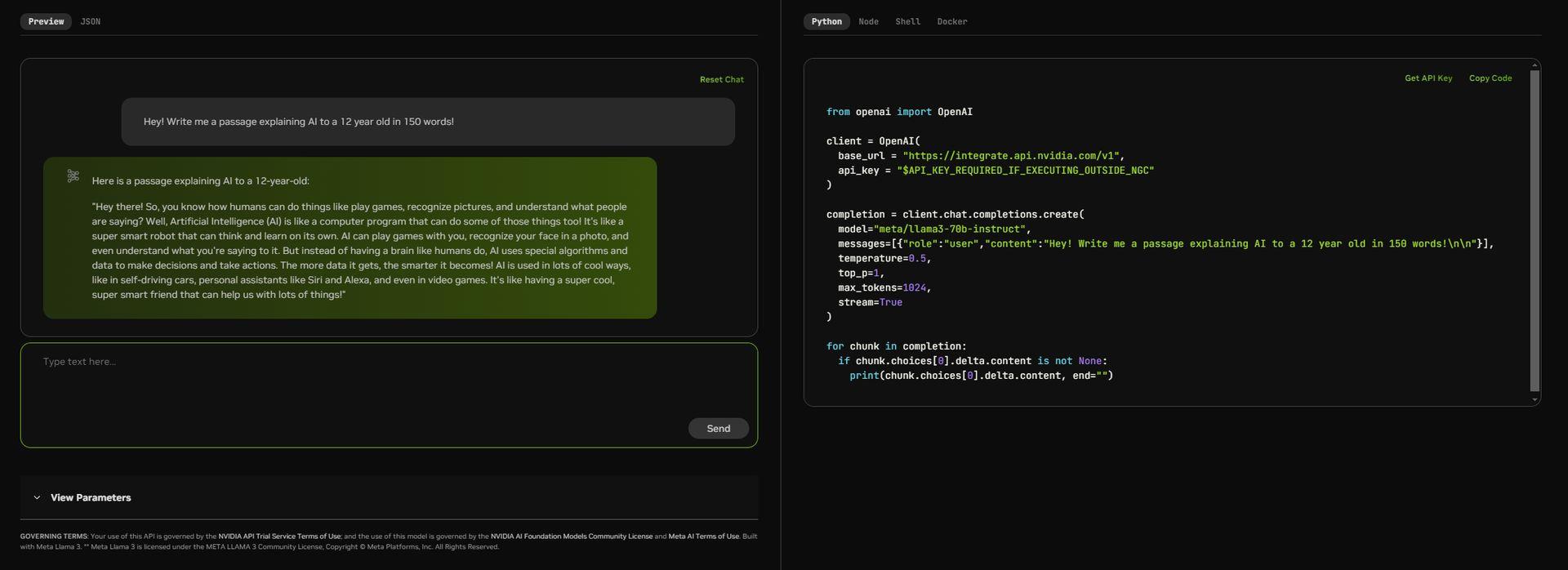

- Enter your prompt:

- In the interface, you will find a text box where you can enter your prompt. For instance, you can type “Hey! Write me a passage explaining AI to a 12-year-old in 150 words!”

- Execute the prompt:

- Click on the “Send” button to execute the prompt. The model will generate a response based on your input.

- View the output:

- The generated response will be displayed in the output section. You can review the text generated by the model and use it as needed.

- Explore additional features:

- NVIDIA NIM also allows you to tweak parameters and view the JSON code for more advanced usage. Explore these options to customize the output further.

- Free access for developers:

- If you’re a developer, you can access NVIDIA NIM microservices for research, development, and testing at no charge by registering through the NVIDIA Developer Program.

Example interaction

Here is an example of using the model:

- Prompt: “Hey! Write me a passage explaining AI to a 12-year-old in 150 words!”

- Response: “Hey there! So, you know how humans can do things like play games, recognize pictures, and understand what people are saying? Well, Artificial Intelligence (AI) is like a computer program that can do some of those things too! It’s like a super smart robot that can think and learn on its own. AI can play games with you, recognize your face in a photo, and even understand what you’re saying to it. But instead of having a brain like humans do, AI uses special algorithms and data to make decisions and take actions. The more data it gets, the smarter it becomes! AI is used in lots of cool ways, like in self-driving cars, personal assistants like Siri and Alexa, and even in video games. It’s like having a super cool, super smart friend that can help us with lots of things!”

Featured image credit: Kerem Gülen/Midjourney