ComfyUI Stable Diffusion 3 is a cutting-edge tool for anyone interested in generating high-quality images from text descriptions. This version builds on the solid foundation of its predecessors, bringing even more refined capabilities to the table. Whether you’re an artist, designer, or just curious about AI, ComfyUI Stable Diffusion 3 offers an exciting way to explore and create visual content.

The evolution of Stable Diffusion has been fascinating to watch. The first version provided a basic framework for turning text into images, and each subsequent version has improved on this, making the process smoother and the results more impressive. ComfyUI Stable Diffusion 3 is the latest in this line, offering a user-friendly interface and powerful features that make it easier than ever to create stunning visuals.

What is ComfyUI Stable Diffusion 3?

ComfyUI Stable Diffusion 3 isn’t just a minor update; it’s a major step in AI-generated imagery. It’s integrated within the RunComfy Beta Version, making it easy to access and use for various creative projects. With the Stable Diffusion 3 Node, you can tap into this powerful model without the need for complicated setups.

To start using ComfyUI Stable Diffusion 3, you’ll need to get an API key from the Stability AI Developer Platform. This key gives you access to both the standard and Turbo versions of the model. The standard version costs 6.5 credits per image, while the Turbo version is more cost-effective at 4 credits per image. Make sure you have enough credits to avoid any interruptions.

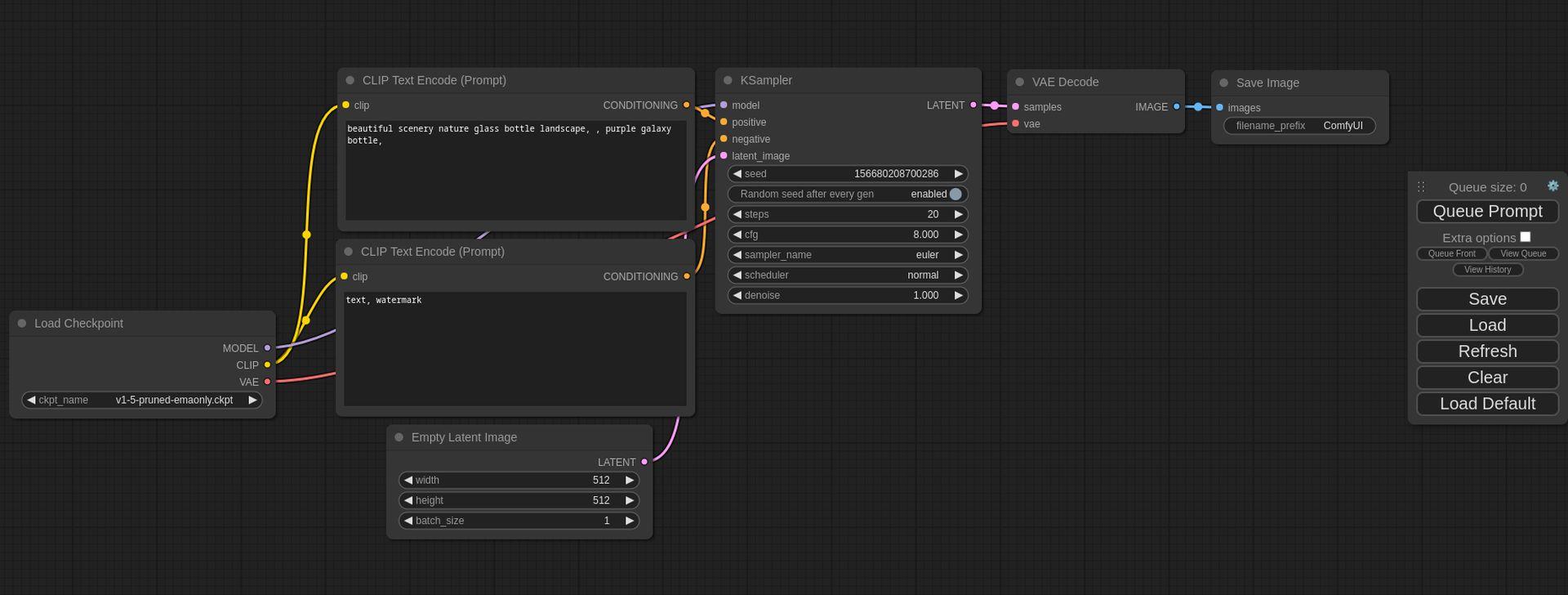

Once you have your API key, integrating the Stable Diffusion 3 Node into your workflow is straightforward. The node comes preloaded in the RunComfy Beta Version, so there’s no need for manual setups. You can start creating right away, using features like positive and negative prompts, aspect ratio adjustments, and various model options. This flexibility makes ComfyUI Stable Diffusion 3 a versatile tool for all kinds of creative and technical projects.

The unique ComfyUI Stable Diffusion 3 workflow

The secret sauce of ComfyUI Stable Diffusion 3 lies in its Multimodal Diffusion Transformer (MMDiT) architecture. This advanced framework enhances the way the model processes and integrates text and visual information. Unlike earlier versions that used a single set of neural network weights for both text and image processing, ComfyUI Stable Diffusion 3 uses separate weight sets for each. This allows for more specialized handling of text and image data, resulting in more accurate and coherent images.

Here’s a closer look at the key components of the MMDiT architecture:

- Text embedders: ComfyUI Stable Diffusion 3 uses three text embedding models, including two CLIP models and T5, to convert text into a format that the AI can understand and process.

- Image encoder: An enhanced autoencoding model converts images into a suitable form for the AI to manipulate and generate new visual content.

- Dual transformer: The architecture features two distinct transformers for text and images. These transformers operate independently but are interconnected for attention operations, allowing both modalities to influence each other directly. This setup enhances the coherence between the text input and the image output.

This sophisticated architecture is what makes ComfyUI Stable Diffusion 3 excel at generating detailed and accurate images from text prompts, setting it apart from other models.

How to install ComfyUI Stable Diffusion 3

Using ComfyUI Stable Diffusion 3 is designed to be straightforward, even for beginners. Here’s a step-by-step guide to help you get started:

- Obtain an API Key: First, visit the Stability AI Developer Platform and get your API key. This key is essential for generating images and provides initial credits to start your projects.

- Install RunComfy Beta Version: Ensure you have the RunComfy Beta Version installed. This version includes the Stable Diffusion 3 Node, so you won’t need any manual setups.

- Integrate the Node: Within the RunComfy Beta Version, you can either use the Stable Diffusion 3 Node directly or integrate it into your existing workflows. Features like positive and negative prompts, aspect ratios, and model options are easily accessible.

- Configure Settings: Adjust settings according to your project needs. Choose the appropriate model (SD3 or SD3 Turbo), set aspect ratios, and use prompts to guide the AI in generating your desired images.

- Generate Images: Once everything is set up, start generating images. Keep an eye on your credit balance to ensure uninterrupted access to the API services.

Following these steps, you can effectively use ComfyUI Stable Diffusion 3 to bring your creative ideas to life.

Featured image credit: Freepik