Stable Diffusion 3 Medium, the latest offering from Stability AI, has recently made its debut, sparking both excitement and controversy within the SD3 community.

As a text-to-image model, Stable Diffusion 3 Medium aims to transform textual prompts into visually compelling images, yet its reception has been met with mixed reactions, particularly regarding its depiction of human figures.

Although Stability AI describes it as their “most sophisticated image generation model to date” in a blog post, the results we have seen are… Let’s not sugarcoat it: Nightmare fuels!

Stable Diffusion 3 Medium’s lineage

Stable Diffusion 3 Medium traces its roots back to a lineage of AI image-synthesis models developed by Stability AI. This iteration builds upon the foundation laid by its predecessors, incorporating advancements in both technology and training methodologies. The model’s name, “Medium,” signifies its position within the broader Stable Diffusion 3 series, suggesting a balance between computational efficiency and generative capabilities.

At its core, Stable Diffusion 3 Medium employs a sophisticated neural network architecture to interpret and translate textual prompts into visual representations. The model’s training data, which comprises a vast collection of images and their corresponding textual descriptions, plays a pivotal role in shaping its ability to generate coherent and contextually relevant images.

Where SD3 fails?

Stable Diffusion 3 Medium showcases notable strengths in various areas. Its ability to grasp and respond to complex prompts involving spatial relationships, compositional elements, and diverse styles is commendable. The model’s proficiency in generating images with intricate details and vibrant colors is also evident.

However, it has garnered criticism for its occasional struggles with accurately depicting human anatomy, particularly hands, and faces, as conveyed by HornyMetalBeing‘s and many others’ posts on social media. These shortcomings have raised questions about the model’s training data and the potential impact of filtering mechanisms employed during its development.

Why is SD3 so bad at generating girls lying on the grass?

byu/HornyMetalBeing inStableDiffusion

The training data used to educate Stable Diffusion 3 Medium encompasses a wide array of visual content, including:

- Photographs

- Artworks

- Illustrations

However, the model’s developers have implemented filtering processes to exclude explicit or sensitive material from this dataset. While these filters aim to ensure the model’s responsible use, they have inadvertently led to the removal of images depicting certain poses or anatomical details, contributing to the model’s difficulties in accurately rendering human figures.

Let’s put Stable Diffusion 3 Medium to a test

You can easily put the model through its paces using online platforms that offer accessible interfaces for interacting with it.

You know the internet, it contains so many people and so many ideas. How bad could an image-generation model prepared according to 2024 standards be?

We tried the free online demo of SD3 on Hugging Face to get our answer.

Here are our prompts and results:

There seems to be no problem with the dog’s anatomy other than missing one eye, but the woman’s hands and legs really look like she had a terrible accident…

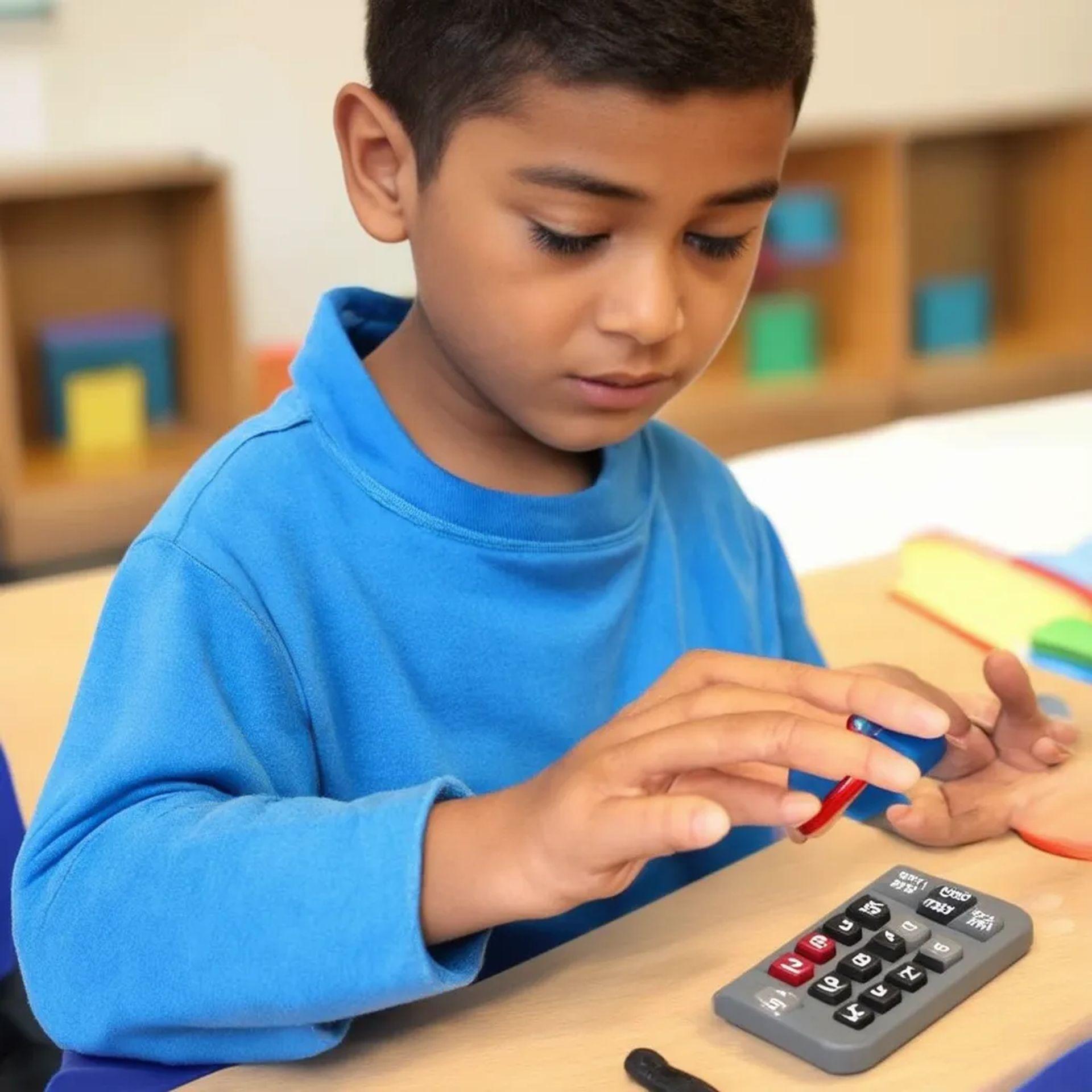

Looks like mathematics not only confused the young boy but also made his fingers very long and melted one of his hands to the table!

Did you know if you have three arms and 12 fingers (maybe more because we cannot see the hand of the third arm), you can administer two intravenous therapies at the same time? At least this time there is no problem with the dog other than being sick…

Never mind, it seems like not even ComfyUI Stable Diffusion 3 can save it…

How to try SD3 online

While Stable Diffusion 3 Medium has encountered its share of criticism, and according to our experience, these are not very wrong complaints. If you would like to try SD3 by yourself, here is what you need to do:

- Go to the demo: Visit the Stable Diffusion 3 Medium demo on Hugging Face Spaces

- Enter your prompt: Type a description of the image you want in the text box provided.

- Generate: Click the “Generate” button and wait for the model to create your image.

- Review and refine: Examine the generated image. If it’s not what you expected, adjust your prompt and try again.

While Stable Diffusion 3 Medium has encountered its share of criticism, it is crucial to acknowledge its potential as a valuable asset. The model’s capacity to comprehend complex prompts and generate visually appealing images across diverse styles remains noteworthy. As the technology matures and undergoes further development, it is poised to contribute significantly to the ever-expanding creative expression methods of ours.

However, for now, we recommend using Midjourney, especially after the introduction of Midjourney model personalization.

Featured image credit: Stability AI