Mistral Large 2 has arrived on the scene, marking a major step forward in language model technology. This new offering from Mistral AI packs an impressive 123 billion parameters and boasts a 128,000-token context window. The release of Mistral Large 2 signals growing competition among top AI companies to develop ever more capable models.

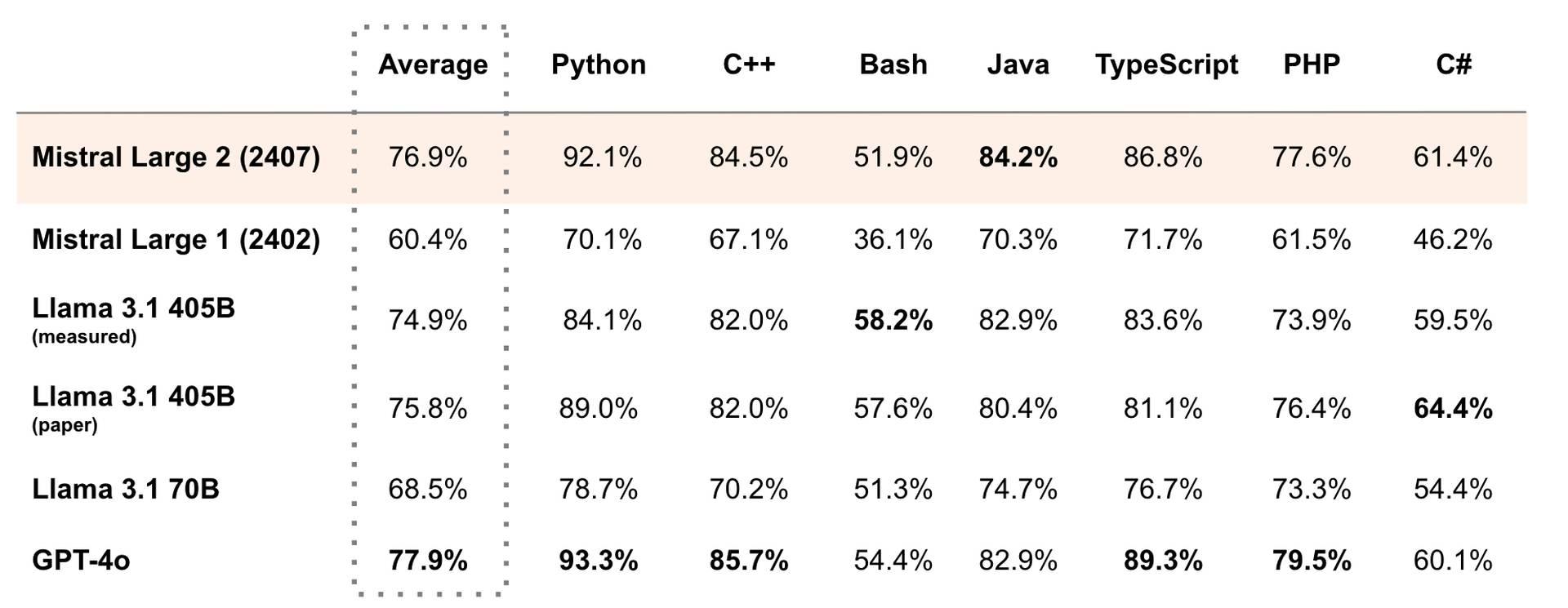

Following the launch of Meta Llama 3.1 405b, Mistral Large 2 shows its mettle across key benchmarks. In coding tasks like HumanEval, it outperforms other recent models while coming close to industry leader GPT-4. For math problems, particularly on the MATH benchmark, Mistral Large 2 ranks just behind GPT-4. The model also flexes its multilingual muscles, surpassing competitors across multiple languages on the Multilingual MMLU test.

Despite its large scale, Mistral AI designed Mistral Large 2 to run efficiently on a single machine. This focus on throughput makes it well-suited for applications requiring the processing of long text inputs.

A deeper look at Mistral Large 2’s technical specs

Digging into the technical details reveals what makes Mistral Large 2 tick. Its 123 billion parameters give it the capacity to capture nuanced patterns in language and knowledge. The expansive 128,000-token context window allows it to maintain coherence over very long passages of text.

Mistral AI put major effort into honing the model’s coding capabilities. Building on their prior work with code-focused models, they trained Mistral Large 2 extensively on programming languages. The specialized training shows in its strong performance on coding benchmarks, rivaling top models from OpenAI and Anthropic.

The developers also prioritized enhancing Mistral Large 2’s reasoning skills and reducing nonsensical outputs. Careful fine-tuning helped minimize the model’s tendency to generate plausible-sounding but incorrect information. As a result, Mistral Large 2 shows improved accuracy on math problems and other tasks requiring logical reasoning.

Mistral Large 2 speaks many languages

A standout feature of Mistral Large 2 is its multilingual prowess. The model was trained on texts spanning dozens of languages, allowing it to understand and generate high-quality content across linguistic variations.

Key supported languages include:

- French

- German

- Spanish

- Italian

- Portuguese

- Arabic

- Hindi

- Russian

- Chinese

- Japanese

- Korean

The broad language coverage makes Mistral Large 2 a versatile tool for global businesses and multilingual applications. Benchmark tests confirm Mistral Large 2’s multilingual strengths. On the Multilingual MMLU test, it outperformed other recent models across nine different languages. This consistent cross-lingual performance highlights the model’s potential for breaking down language barriers in various domains.

It is not just the speaking languages, Mistral knows coding language like a book!

In coding tasks, Mistral Large 2 can work with over 80 programming languages. Python, Java, C, C++, JavaScript, and Bash are just a few of the supported options. This linguistic flexibility in both human and computer languages sets Mistral Large 2 apart in the current AI landscape.

Tackling the hallucination problem

A major focus during Mistral Large 2’s development was reducing hallucinations – those plausible but incorrect outputs that plague many language models. Mistral AI trained the model to be more discerning and cautious in its responses. When faced with uncertainty, Mistral Large 2 is designed to acknowledge gaps in its knowledge rather than inventing false information.

The emphasis on accuracy and truthfulness addresses a common criticism of large language models. By striving to minimize hallucinations, Mistral AI aims to make their model more trustworthy and reliable for real-world applications.

Funding is there too

Despite being a relative newcomer, Mistral AI has quickly established itself as a serious player in artificial intelligence. The Paris-based startup recently secured $640 million in Series B funding, reaching a $6 billion valuation. The financial backing, combined with their ability to rapidly develop cutting-edge models, positions Mistral as a growing force in the AI industry.

The missing piece

One area where Mistral Large 2 (and Meta’s recent Llama 3.1) lags behind is multimodal functionality. OpenAI currently leads the pack in developing AI systems that can process both text and images simultaneously. This capability is increasingly in demand, with many startups looking to build multimodal features into their applications.

Accessibility and integration

Mistral Large 2 is now available through major cloud platforms including:

Developers can also access it directly through Mistral’s own platform, la Plateforme, and in HugginFace under the name “mistral-large-2407“.

For those wanting to experiment, Mistral offers free testing of the model on their ChatGPT competitor, le Chat. This accessibility across multiple platforms makes it easier for developers and businesses to integrate Mistral Large 2 into their projects and workflows.

However, it’s worth noting that like many advanced AI models, Mistral Large 2 is not truly open source. While more accessible than some competitors, commercial use still requires a paid license. The technical complexity of implementing such a large model also limits its practical availability to most users.

As AI models continue to evolve at a breakneck pace, Mistral Large 2 represents another step forward in language model capabilities. Its focus on efficiency, accuracy, and versatility makes it a noteworthy addition to the growing ecosystem of advanced AI tools. While challenges remain, particularly in multimodal processing, Mistral’s rapid progress suggests they’ll remain a company to watch in the ongoing AI race.

Featured image credit: Mistral AI