Meta Segment Anything Model 2 (SAM 2) is making waves with its impressive capabilities. Unlike traditional models that struggle with new types of objects, SAM 2 uses zero-shot learning to identify and segment items it hasn’t specifically been trained on. This means it can easily handle a wide variety of visual content.

Imagine a tool that not only segments objects in a single frame but tracks and maintains object continuity across an entire video sequence, even amidst occlusions and rapid changes. SAM 2 achieves this through its unified architecture and memory mechanism. Here is how.

Meta Segment Anything Model 2 (SAM 2) explained

Meta Segment Anything Model 2 is an advanced computer vision model designed to handle object segmentation across a variety of media types, including images and videos. Unlike traditional segmentation models that may require extensive retraining for new types of objects, SAM 2 is equipped with zero-shot learning capabilities. Thanks to its robust generalization abilities, this means it can segment objects it has not explicitly been trained on. This is particularly useful for applications with diverse or evolving visual content where new object categories frequently emerge.

The core of Meta SAM 2’s functionality is its unified architecture, which processes both images and video frames. This architecture incorporates a memory mechanism that helps maintain continuity across video frames, addressing challenges such as occlusion and object movement.

The model’s memory encoder captures information from previous frames, which is stored in a memory bank and accessed by a memory attention module. This setup ensures that the model can accurately track and segment objects over time, even when they are partially obscured or when the scene changes dynamically.

SAM 2 is also highly interactive, allowing users to provide various types of prompts—such as clicks, bounding boxes, or masks—to guide the segmentation process. This promptable segmentation feature enables users to refine the segmentation results based on their specific needs and to address any ambiguities in object detection. When encountering complex scenes where multiple interpretations are possible, SAM 2 can generate several potential masks and select the most appropriate one based on confidence levels.

The model is trained and evaluated using the SA-V dataset, which is notable for its scale and diversity. This dataset includes around 51,000 real-world video sequences and over 600,000 masklets, providing a comprehensive resource for training and testing segmentation models. The annotations in this dataset are generated interactively with SAM 2 itself, ensuring high accuracy and relevance to real-world scenarios. The dataset covers a broad range of geographical locations and various types of visual content, including scenarios with partial object views and occlusions.

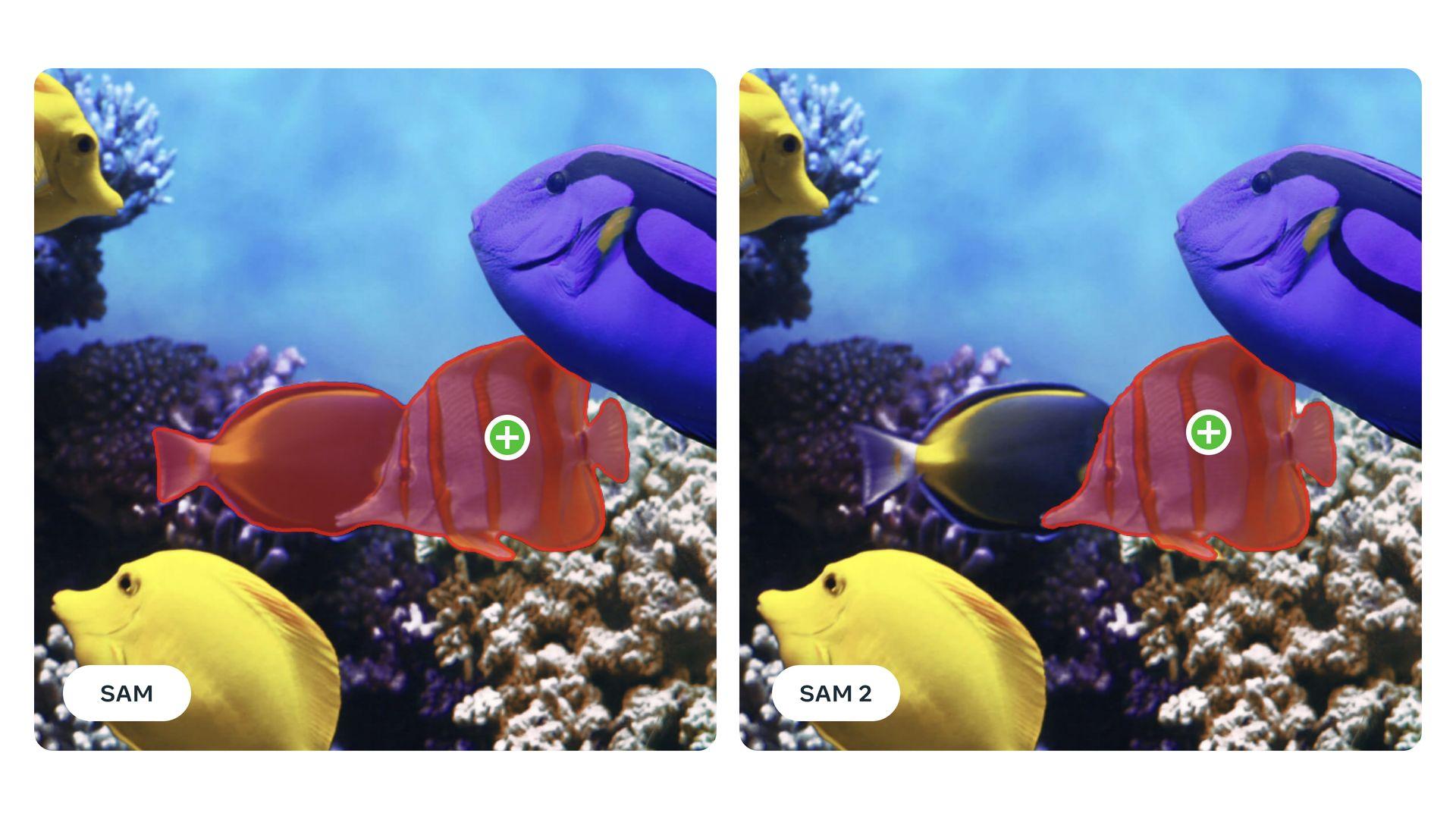

Meta’s Segment Anything Model (SAM) 2 represents a major advancement in interactive video segmentation, dramatically outperforming previous models. It excels across 17 zero-shot video datasets, requiring three times fewer human interactions and delivering results six times faster than its predecessor, SAM. SAM 2 outperforms existing benchmarks like DAVIS and YouTube-VOS and processes video at approximately 44 frames per second, making it highly efficient for real-time applications. Its ability to perform video segmentation 8.4 times faster than manual annotation with SAM further underscores its effectiveness and efficiency in handling complex video tasks.

SAM 2’s advancements are not only significant for practical applications such as video analysis and annotation but also contribute to the broader field of computer vision research. By making its code and dataset publicly available, Meta encourages further innovation and development in segmentation technology. Future improvements may focus on enhancing the model’s ability to handle long-term occlusions and increasingly complex scenes with multiple moving objects, continuing to push the boundaries of what is possible in object segmentation.

How to use SAM 2

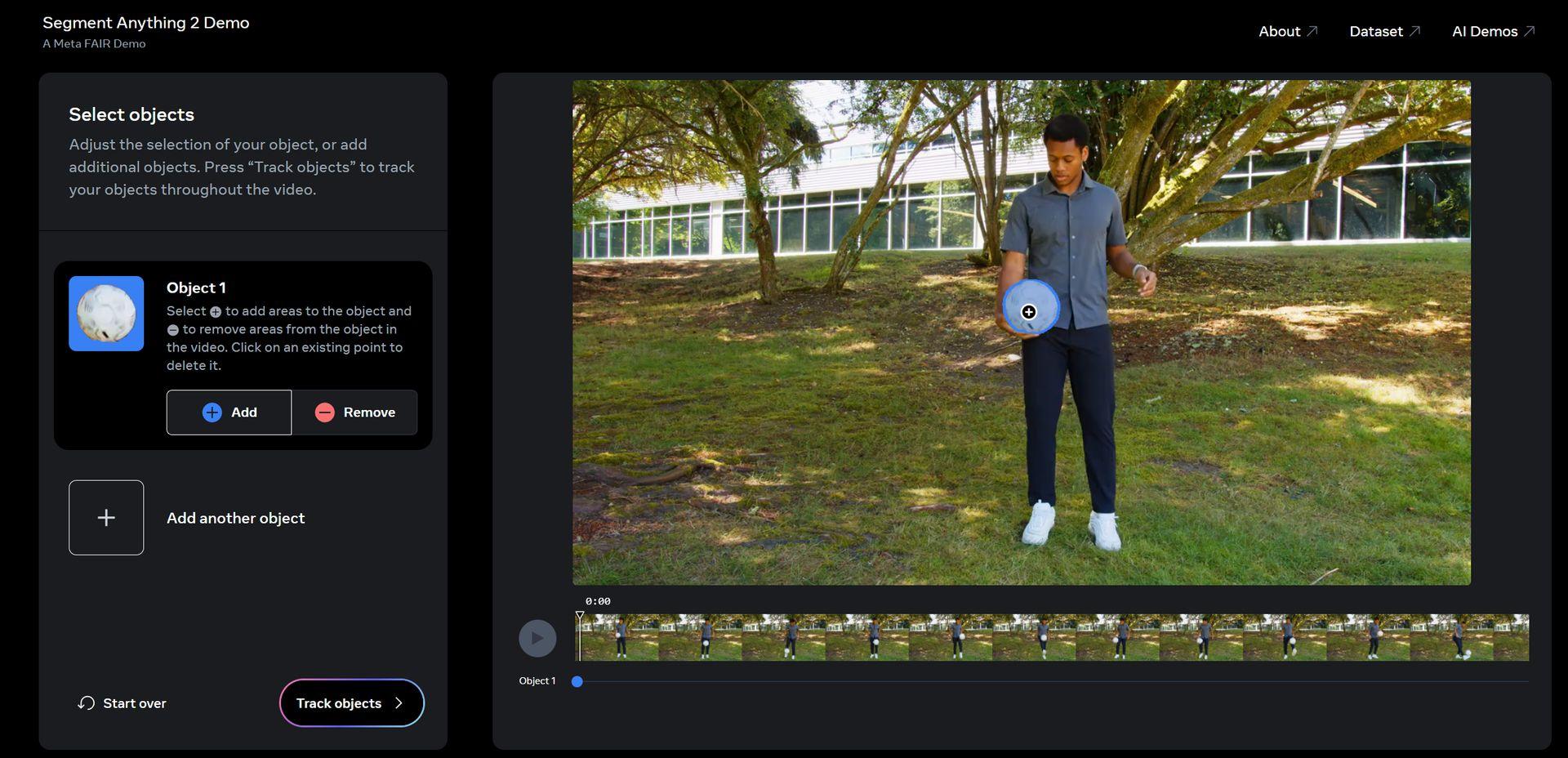

Meta Segment Anything Model 2 (SAM 2) has a web demo, you only need to:

- Click here and visit the demo page.

- Accept the warning

- Click an object or objects you want to follow.

- Hit “Track objects”

- Control the output. If there are no issues, click “Next”

- Add effects as you like, or let AI do its thing by clicking “Surprise me”

- Click “Next”

- Now, you can either get a link to it or download it. Here is our result:

Meta Segment Anything Model 2 (SAM 2) test pic.twitter.com/d07PGto0eO

— Alan Davis (@AlanDav73775659) July 30, 2024

That’s all!

Featured image credit: Meta