Colossus is a groundbreaking artificial intelligence (AI) training system developed by Elon Musk’s xAI Corp. This supercomputer, described by Musk as the “most powerful AI training system in the world,” is a critical component of xAI’s strategy to lead in the rapidly advancing field of AI.

This weekend, the @xAI team brought our Colossus 100k H100 training cluster online. From start to finish, it was done in 122 days.

Colossus is the most powerful AI training system in the world. Moreover, it will double in size to 200k (50k H200s) in a few months.

Excellent…

— Elon Musk (@elonmusk) September 2, 2024

Nvidia will power the Colossus

At the core of Colossus are 100,000 NVIDIA H100 graphics cards. These GPUs (Graphics Processing Units) are specifically designed to handle the demanding computational requirements of AI training and here is why these GPUs are so vital:

- Raw processing power: The H100 is Nvidia’s flagship AI processor, designed to accelerate the training and inference of AI models, particularly those based on deep learning and neural networks. Compared to its predecessor, the H100 can run language models up to 30 times faster.

- Transformer engine: A key feature of the H100 is its Transformer Engine, a specialized set of circuits optimized for running AI models based on the Transformer neural network architecture. This architecture is the backbone of some of the most advanced language models, like GPT-4 and Meta’s Llama 3.1 405B. The Transformer Engine enables these GPUs to handle large-scale models more efficiently, making them ideal for training sophisticated AI systems.

The next level: Doubling down with the H200

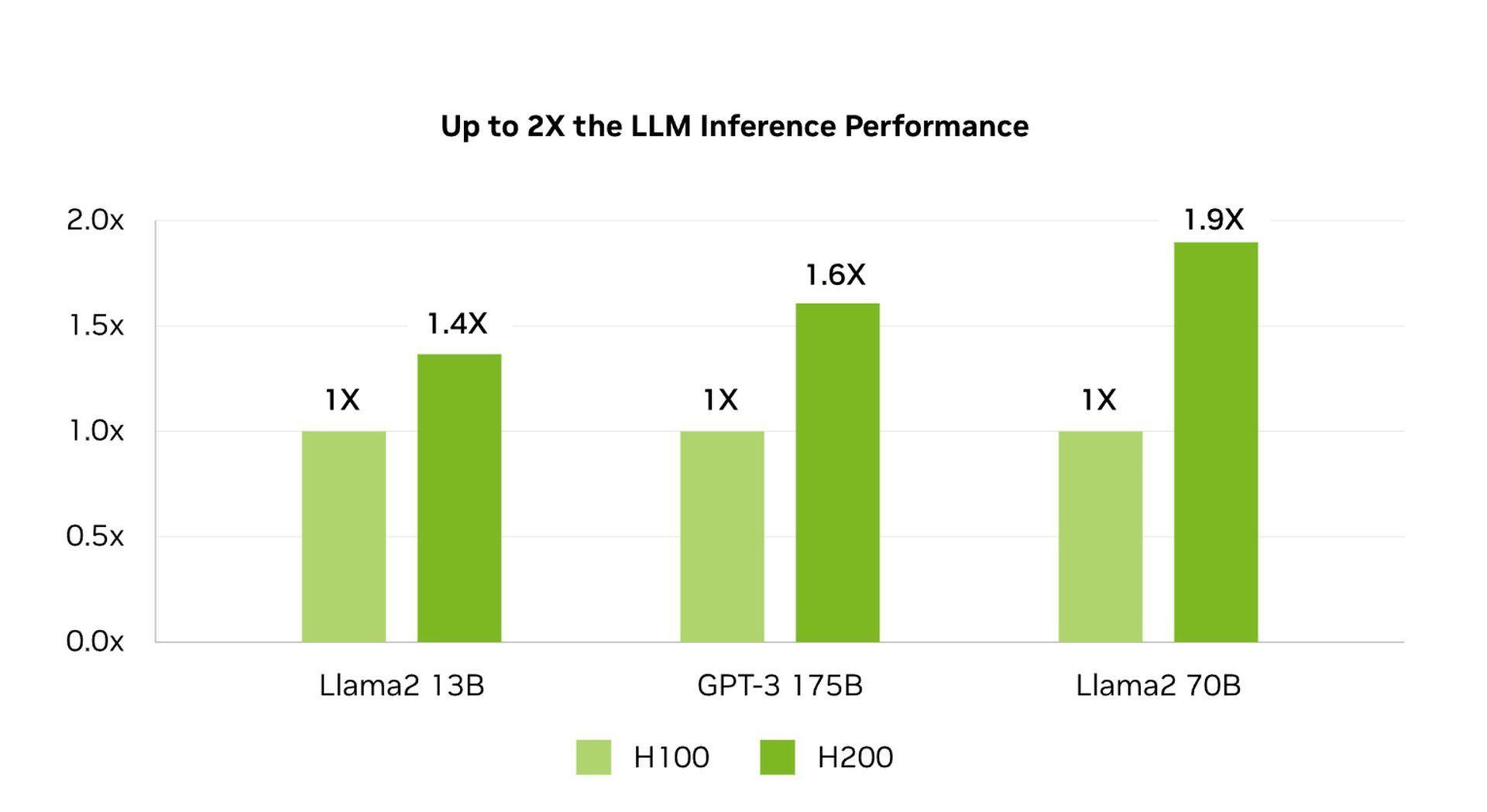

Musk has ambitious plans to further expand Colossus, aiming to double the system’s GPU count to 200,000 in the near future. This expansion will include 50,000 units of Nvidia’s H200, an even more powerful successor to the H100. The H200 offers several significant upgrades:

- HBM3e Memory: The H200 uses High Bandwidth Memory 3e (HBM3e), which is faster than the HBM3 used in the H100. This type of memory enhances the speed at which data is transferred between the memory and the chip’s logic circuits. For AI models, which constantly shuffle vast amounts of data between processing and memory, this speed is crucial.

- Increased Memory Capacity: The H200 nearly doubles the onboard memory capacity to 141 gigabytes. This allows the GPU to store more of an AI model’s data closer to its logic circuits, reducing the need to fetch data from slower storage sources. The result is faster processing times and more efficient model training.

Colossus’ role in AI training

Colossus is specifically designed to train large language models (LLMs), which are the foundation of advanced AI applications.

The sheer number of GPUs in Colossus allows xAI to train AI models at a scale and speed that is unmatched by other systems. For example, xAI’s current flagship LLM, Grok-2, was trained on 15,000 GPUs. With 100,000 GPUs now available, xAI can train much larger and more complex models, potentially leading to significant improvements in AI capabilities.

The advanced architecture of the H100 and H200 GPUs ensures that models are trained not only faster but with greater precision. The high memory capacity and rapid data transfer capabilities mean that even the most complex AI models can be trained more efficiently, resulting in better performance and accuracy.

What’s next?

Colossus is not just a technical achievement; it’s a strategic asset in xAI’s mission to dominate the AI industry. By building the world’s most powerful AI training system, xAI positions itself as a leader in developing cutting-edge AI models. This system gives xAI a competitive advantage over other AI companies, including OpenAI, which Musk is currently in legal conflict with.

Moreover, the construction of Colossus reflects Musk’s broader vision for AI. By reallocating resources from Tesla to xAI, including the rerouting of 12,000 H100 GPUs worth over $500 million, Musk demonstrates his commitment to AI as a central focus of his business empire.

Can he succeed? We have to wait for the answer!

Featured image credit: Eray Eliaçık/Grok