Computer vision is one of the most influential areas of artificial intelligence, changing nearly every aspect of our lives, on par with generative AI. From medical image analysis and autonomous vehicles to security systems, AI-powered computer vision is critical in enhancing safety, efficiency, and healthcare through technologies like object detection, facial recognition, and image classification.

But computer vision isn’t just making waves in specialized fields; it’s also a part of the consumer apps we use daily. Enhancing camera focus, editing photos, real-time text recognition and scanning with a smartphone camera, enabling smart home devices like security cameras to detect and alert users about movement, pose estimation for fitness tracking apps, calorie and food identification for diet tracking apps, face identification for unlocking phones, and face detection and classification to organize photos by person in albums. These applications have become integral to the daily experiences of millions of people.

Source: Real Computer Vision by Boris Denisenko on Medium

Most machine learning engineers don’t build their models from scratch to bring these features to life. Instead, they rely on existing open-source models. While this is the most feasible approach, as building a model from scratch is prohibitively expensive, there’s still a lot of work to be done before the model can be used in an app.

First, the open-source model may solve a similar scenario but not the exact one an engineer needs. For example, an ML engineer may need an app that compares different drinks, but the available model is designed to compare food items. Although it performs well with food, it may struggle when applied to drinks.

Secondly, the real-world conditions these models need to run often differ significantly from the environments they were initially designed for. For example, a model might have hundreds of millions of parameters, making it too large and computationally intensive to run, let’s say, on a smartphone. Attempting to run such a model on a device with limited computational resources leads to slow performance, excessive battery drain, or failure to run.

Adapting to Real-World Scenarios and Conditions

This sooner or later leads most engineers applying machine learning for computer vision in consumer apps to face the necessity of:

- Adapting an existing open-source model to fit their specific scenario.

- Optimizing the model to run within limited capacities.

Adapting a model isn’t something you can just breeze through. You start with a pre-trained model and tailor it to your specific task. This involves tweaking a multitude of parameters — the number of layers, number of neurons in each layer, learning rate, batch size, and more. The sheer number of possible combinations can be overwhelming, with potentially millions of different configurations to test. This is where hyperparameter optimization (HPO) comes into play. HPO helps streamline this process, allowing you to find the best configuration faster than if you were to manually adjust parameters separately.

Once you’ve adapted the model to your scenario, the next challenge is getting it to run on a device with limited resources. For instance, you might need to deploy the model on a smartphone with just 6 GB of RAM. In such cases, model compression becomes essential to reduce the model’s size and make it manageable for devices with limited memory and processing power.

Hyperparameter optimization (HPO) techniques

Hyperparameter optimization involves finding the best set of parameters for your neural network to minimize error on a specific task. Let’s say you’re training a model to estimate a person’s age from a photo. The error in this context refers to the deviation of the model’s age estimate from the person’s actual age — measured, let’s say, in the number of years it is off.

Grid search

Grid search is a brute-force method that finds the optimal combination by testing every possible set of parameters. You start with an existing model and adapt it to your task. Then, you systematically modify parameters — like the number of neurons or layers—to see how these changes affect the model’s error. Grid search involves testing each combination of these parameters to find the one that produces the lowest error. The challenge is that there are numerous parameters you could adjust, each with a broad range of potential values.

While this method guarantees finding the best option, it is incredibly time-consuming and often impractical.

Random Search

Another approach is random search, where you randomly sample a portion of possible combinations instead of testing every combination. This method involves selecting random values for each parameter within a specified range and testing those combinations. While it’s faster than grid search, it doesn’t guarantee the best result. However, it’s likely to find a good, if not optimal, solution. It’s a trade-off between speed and precision.

For instance, if there are 1,000 possible parameter combinations, you could randomly sample and test 100, which would take only one-tenth of the time compared to testing all combinations.

HPO Using Optimization Algorithms

Optimization-based hyperparameter tuning methods use different mathematical approaches to efficiently find the best parameter settings. For example, Bayesian optimization uses probabilistic models to guide the search, while TetraOpt—an algorithm developed by the author and team—employs tensor-train optimization to better navigate high-dimensional spaces. These methods are more efficient than grid or random search because they aim to minimize the number of evaluations needed to find optimal hyperparameters, focusing on the most promising combinations without testing every possibility.

Such optimization algorithms help find better solutions faster, which is especially valuable when model evaluations are computationally expensive. They aim to deliver the best results with the fewest trials.

ML model Compression techniques

Once a model works in theory, running it in real-life conditions is the next challenge. Take, for example, ResNet for facial recognition, YOLO for traffic management and sports analytics, or VGG for style transfer and content moderation. While powerful, these models are often too large for devices with limited resources, such as smartphones or smart cameras.

ML engineers turn to a set of tried-and-true compression techniques to make the models more efficient for such environments. These methods — Quantization, Pruning, Matrix Decomposition, and Knowledge Distillation — are essential for reducing the size and computational demands of AI models while preserving their performance.

Quantization

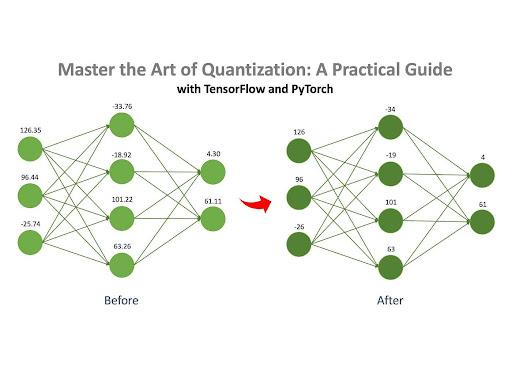

Source: Master the Art of Quantization by Jan Marcel Kezmann on Medium

Quantization is one of the most popular methods for compressing neural networks, primarily because it requires minimal additional computation compared to other techniques.

The core idea is straightforward: a neural network comprises numerous matrices filled with numbers. These numbers can be stored in different formats on a computer, such as floating-point (e.g., 32.15) or integer (e.g., 4). Different formats take up different amounts of memory. For instance, a number in the float32 format (e.g., 3.14) takes up 32 bits of memory, while a number in the int8 format (e.g., 42) only takes 8 bits.

If a model’s numbers are originally stored in float32 format, they can be converted to int8 format. This change significantly reduces the model’s memory footprint. For example, a model initially occupying 100MB could be compressed to just 25MB after quantization.

Pruning

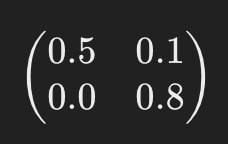

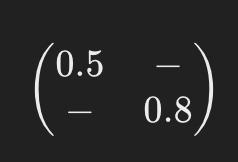

As mentioned earlier, a neural network consists of a set of matrices filled with numbers, known as “weights.” Pruning is the process of removing the “unimportant” weights from these matrices. By eliminating these unnecessary weights, the model’s behavior remains largely unaffected, but the memory and computational requirements are significantly reduced.

For example, imagine one of the neural network’s matrices looks like this:

After pruning, it might look something like this:

The dashes (“-“) indicate where elements were removed during pruning. This simplified model requires fewer computational resources to operate.

Matrix Decomposition

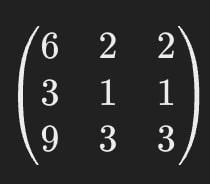

Matrix decomposition is another effective compression method that involves breaking down (or “decomposing”) the large matrices in a neural network into several smaller, simpler matrices.

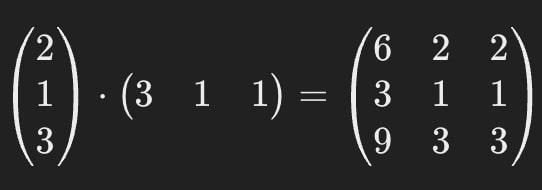

For instance, let’s say one of the matrices in a neural network looks like this:

Matrix decomposition allows us to replace this single large matrix with two smaller ones.

When multiplied together, these smaller matrices give the same result as the original one, ensuring that the model’s behavior remains consistent.

This means we can replace the matrix from the first picture with the matrices from the second.

The original matrix contains 9 parameters, but after decomposition, the matrices together hold only 6, resulting in a ~33% reduction. One of the key advantages of this method is its potential to greatly compress AI models — several times in some cases.

It’s important to note that matrix decomposition isn’t always perfectly accurate. Sometimes, a small approximation error is introduced during the process, but the efficiency gains often outweigh this minor drawback.

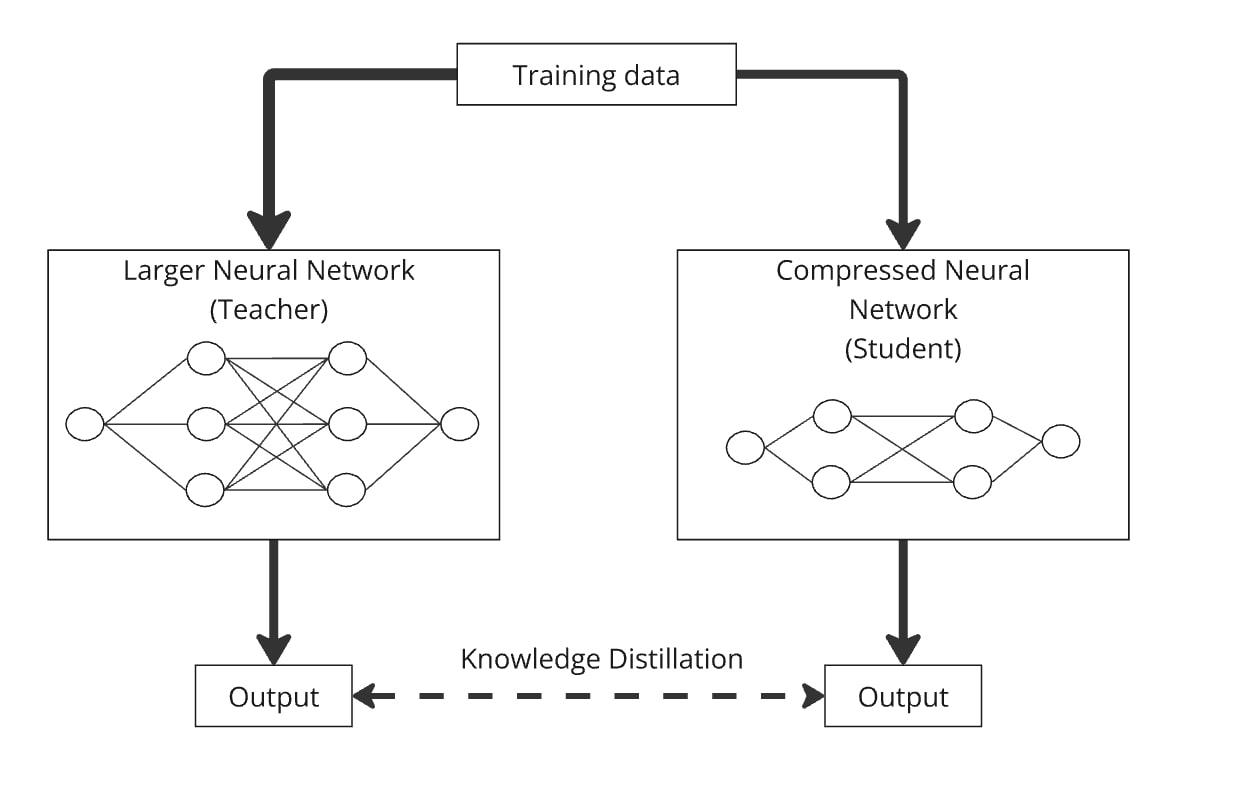

Knowledge Distillation

Knowledge Distillation is a technique for building a smaller model, known as the “student model,” by transferring knowledge from a larger, more complex model, called the “teacher model.” The key idea is to train the smaller model alongside the larger one so the student model learns to mimic the behavior of the teacher model.

Here’s how it works: You pass the same data through the large neural network (the teacher) and the compressed one (the student). Both models produce outputs, and the student model is trained to generate outputs as similar as possible to the teacher’s. This way, the compressed model learns to perform similarly to the larger model but with fewer parameters.

Distillation can be easily combined with quantization, pruning, and matrix decomposition, where the teacher model is the original version and the student is the compressed one. These combinations help refine the accuracy of the compressed model.

In practice, engineers often combine these techniques to maximize the performance of their models when deploying them in real-world scenarios.

AI evolves along two parallel paths. On the one hand, it fuels impressive advancements in areas like healthcare, pushing the limits of what we thought was possible. On the other, adapting AI to real-world conditions is just as crucial, bringing advanced technology into the daily lives of millions, often seamlessly and unnoticed. This duality mirrors the impact of the smartphone revolution, which transformed computing from something disruptive and costly into technology accessible and practical for everyone.

The optimization techniques covered in this article are what engineers use to make AI a tangible part of everyday life. This research is ongoing, with large tech companies (like Meta, Tesla, or Huawei) and research labs investing significant resources in finding new ways to optimize models. However, well-implemented HPO techniques and compression methods are already helping engineers worldwide bring the latest models into everyday scenarios and devices, creating impressive products for millions of people today and pushing the industry forward through their published and open-sourced findings.