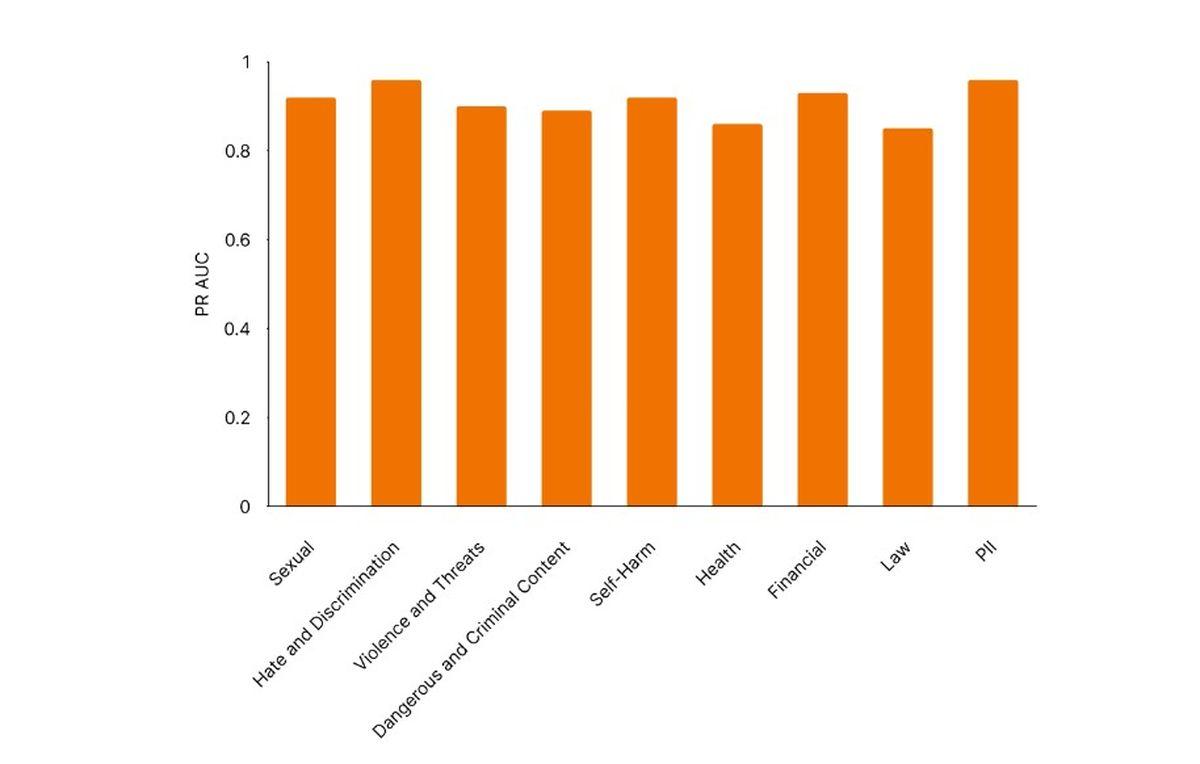

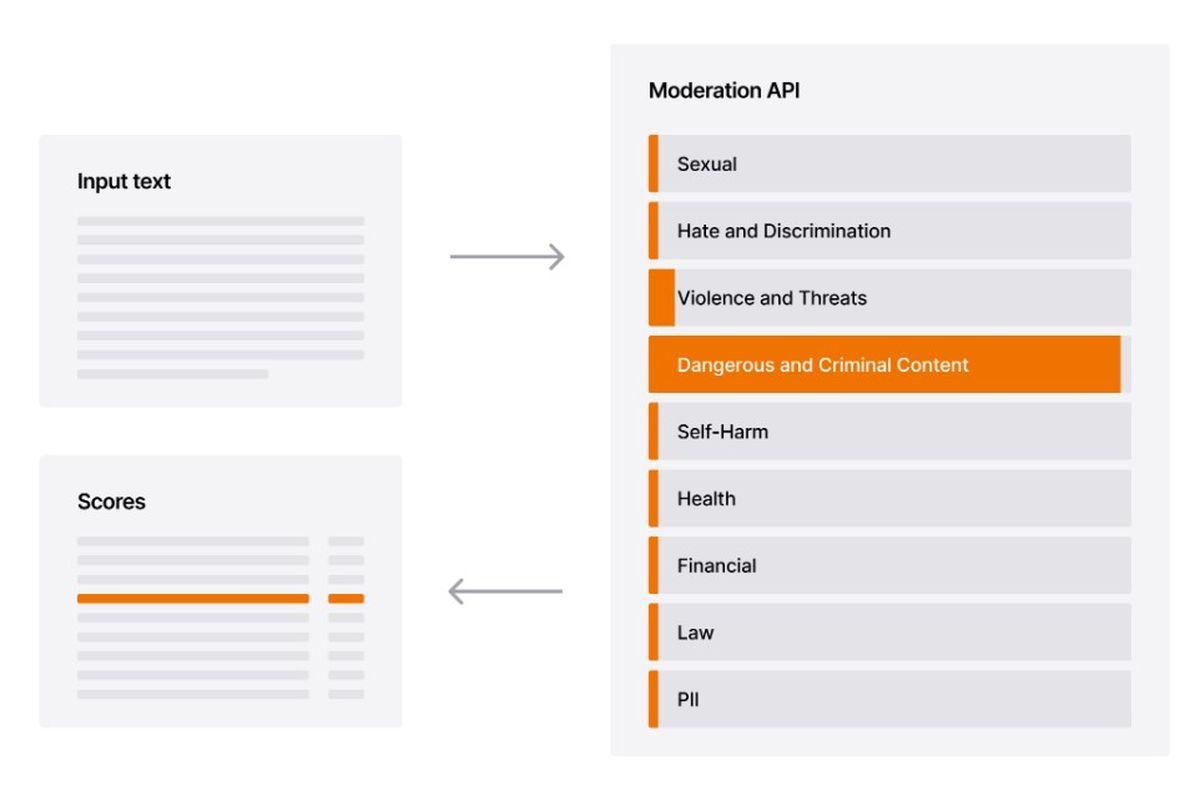

Mistral AI has announced the release of its new content moderation API. This API, which already powers Mistral’s Le Chat chatbot, is designed to classify and manage undesirable text across a variety of safety standards and specific applications. Mistral’s moderation tool leverages a fine-tuned language model called Ministral 8B, capable of processing multiple languages, including English, French, and German, and categorizing content into nine distinct types: sexual content, hate and discrimination, violence and threats, dangerous or criminal activities, self-harm, health, financial, legal, and personally identifiable information (PII).

The moderation API is versatile, with applications for both raw text and conversational messages. “Over the past few months, we’ve seen growing enthusiasm across the industry and research community for new AI-based moderation systems, which can help make moderation more scalable and robust across applications,” Mistral shared in a recent blog post. The company describes its approach as “pragmatic,” aiming to address risks from model-generated harms like unqualified advice and PII leaks by applying nuanced safety guidelines.

Moderation API addresses bias concerns and customization needs

AI-driven content moderation systems hold potential for efficient, scalable content management, but they are not without limitations. Similar AI systems have historically struggled with biases, particularly in detecting language styles associated with certain demographics. For example, studies show that language models often flag phrases in African American Vernacular English (AAVE) as disproportionately toxic, as well as mistakenly labeling posts discussing disabilities as overly negative.

Generative AI vs. predictive AI: Full comparison

Mistral acknowledges the challenges of creating an unbiased moderation tool, stating that while their moderation model is highly accurate, it is still evolving. The company has yet to benchmark its API’s performance against established tools like Jigsaw’s Perspective API or OpenAI’s moderation API. Mistral aims to refine its tool through ongoing collaboration with customers and the research community, stating, “We’re working with our customers to build and share scalable, lightweight, and customizable moderation tooling.”

Batch API reduces processing costs by 25%

Mistral also introduced a batch API designed for high-volume request handling. By processing these requests asynchronously, Mistral claims the batch API can reduce processing costs by 25%. This new feature aligns with similar batch-processing options offered by other tech companies like Anthropic, OpenAI, and Google, which aim to enhance efficiency for customers managing substantial data flows.

Mistral’s content moderation API aims to be adaptable across a range of use cases and languages. The model is trained to handle text in multiple languages, including Arabic, Chinese, Italian, Japanese, Korean, Portuguese, Russian, and Spanish. This multilingual capability ensures the model can address undesirable content across different regions and linguistic contexts. Mistral’s tool offers two endpoints tailored for either raw text or conversational contexts, accommodating diverse user needs. The company provides detailed technical documentation and benchmarks for users to gauge the model’s performance.

As Mistral continues to refine its tool, the API provides a unique level of customization, allowing users to adjust parameters based on specific content safety standards.

Featured image credit: Mistral