OpenAI Orion, the company’s next-generation AI model, is hitting performance walls that expose limitations in traditional scaling approaches. Sources familiar with the matter reveal that Orion is delivering smaller performance gains than its predecessors, prompting OpenAI to rethink its development strategy.

Early testing reveals plateauing improvements

Initial employee testing indicates that OpenAI Orion achieved GPT-4 level performance after completing only 20% of its training. While this might sound impressive, it’s important to note that early stages of AI training typically yield the most dramatic improvements. The remaining 80% of training is unlikely to produce significant advancements, suggesting that OpenAI Orion may not surpass GPT-4 by a wide margin.

“Some researchers at the company believe Orion isn’t reliably better than its predecessor in handling certain tasks,” reported The Information. “Orion performs better at language tasks but may not outperform previous models at tasks such as coding, according to an OpenAI employee.”

The data scarcity dilemma

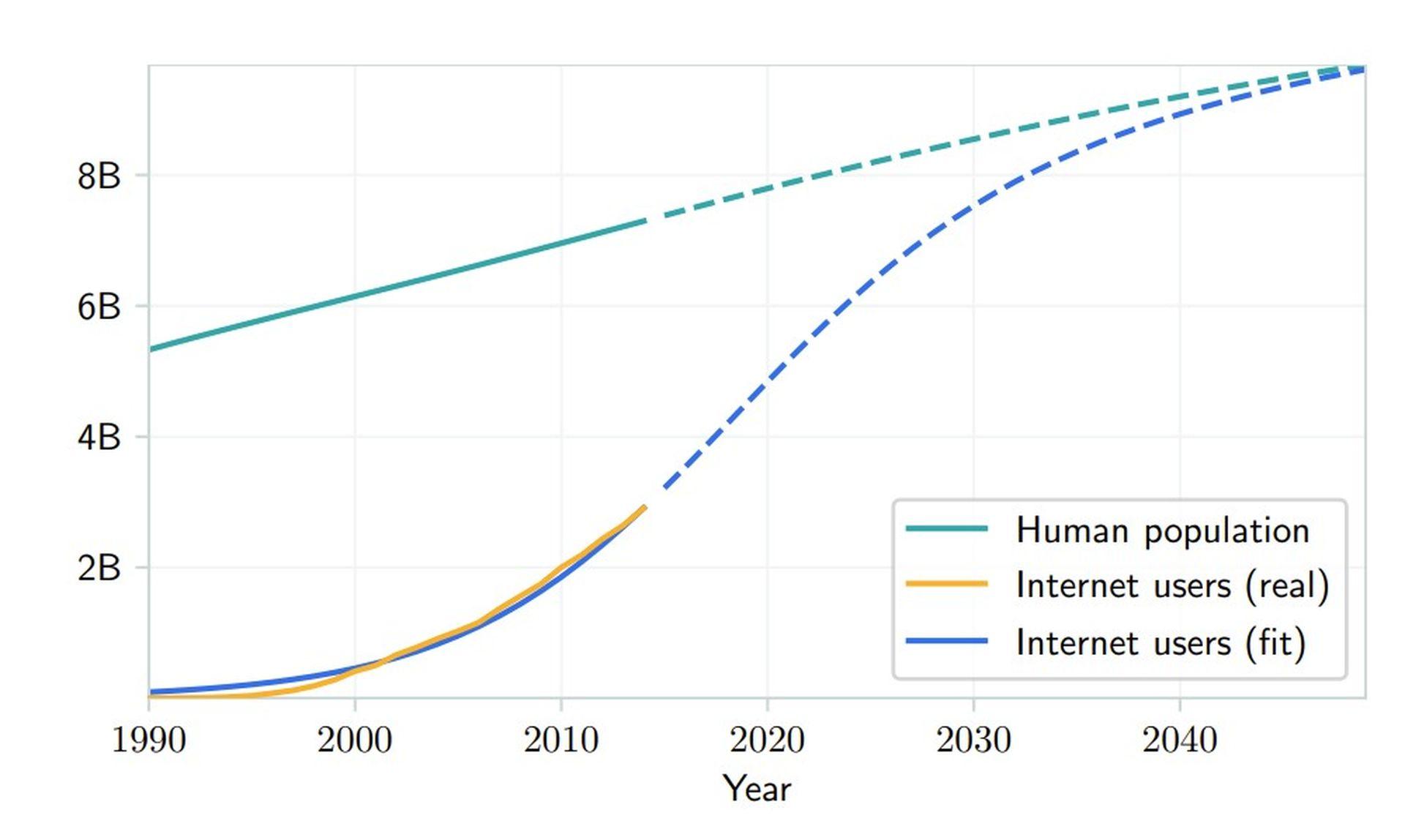

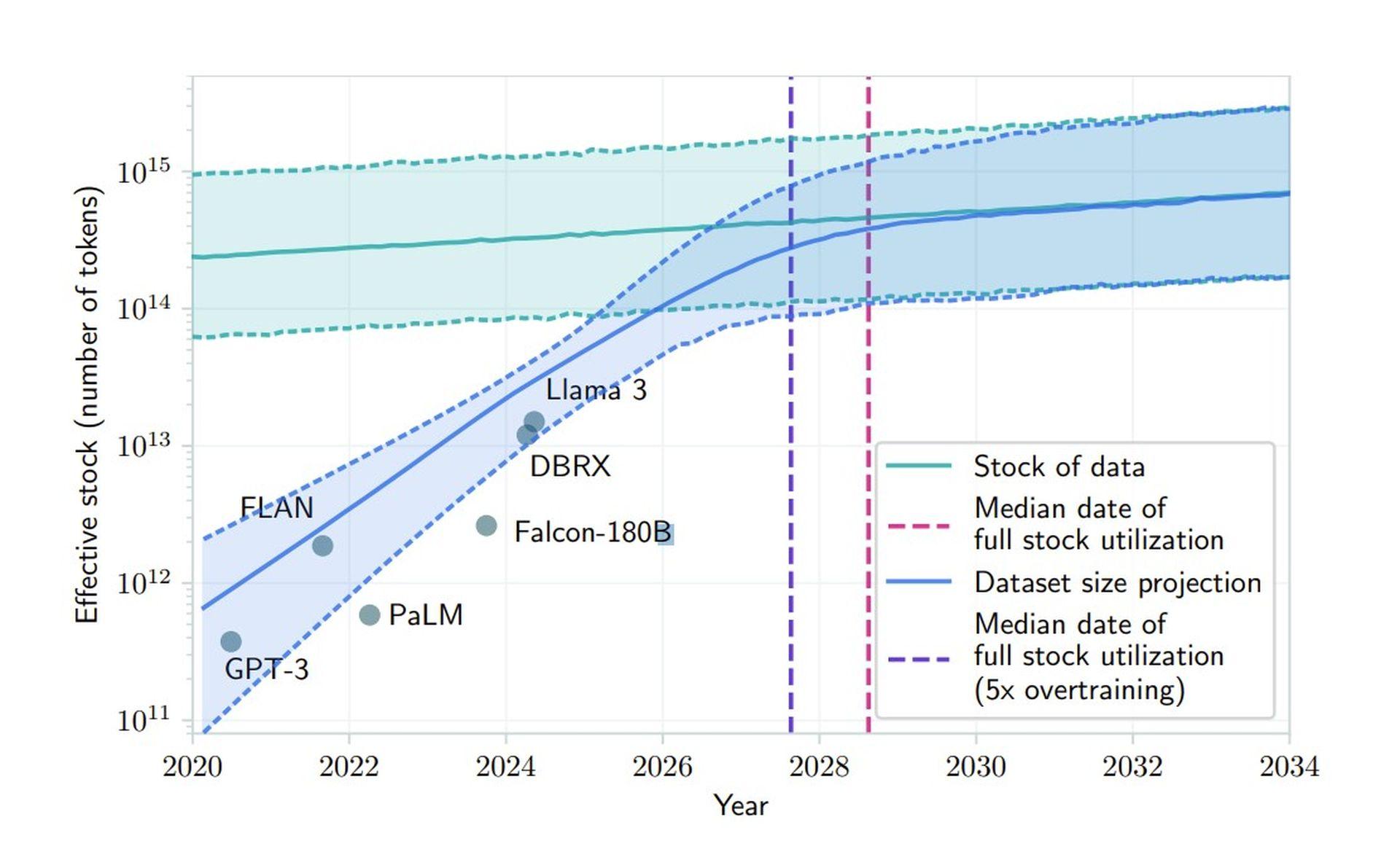

OpenAI’s challenges with Orion highlight a fundamental issue in the AI industry: the diminishing supply of high-quality training data. Research published in June predicts that AI companies will exhaust available public human-generated text data between 2026 and 2032. This scarcity marks a critical inflection point for traditional development approaches, forcing companies like OpenAI to explore alternative methods.

“Our findings indicate that current LLM development trends cannot be sustained through conventional data scaling alone,” the research paper states. This underscores the need for synthetic data generation, transfer learning, and the use of non-public data to enhance model performance.

OpenAI’s dual-track development strategy

To tackle these challenges, OpenAI is restructuring its approach by separating model development into two distinct tracks. The O-Series, codenamed Strawberry, focuses on reasoning capabilities and represents a new direction in model architecture. These models operate with significantly higher computational intensity and are explicitly designed for complex problem-solving tasks.

In parallel, the Orion models—or the GPT series—continue to evolve, concentrating on general language processing and communication tasks. OpenAI’s Chief Product Officer Kevin Weil confirmed this strategy during an AMA, stating, “It’s not either or, it’s both—better base models plus more strawberry scaling/inference time compute.”

Synthetic data: A double-edged sword

OpenAI is exploring synthetic data generation to address data scarcity for OpenAI Orion. However, this solution introduces new complications in maintaining model quality and reliability. Training models on AI-generated content may lead to feedback loops that amplify subtle imperfections, creating a compounding effect that’s increasingly difficult to detect and correct.

Researchers have found that relying heavily on synthetic data can cause models to degrade over time. OpenAI’s Foundations team is developing new filtering mechanisms to maintain data quality, implementing validation techniques to distinguish between high-quality and potentially problematic synthetic content. They’re also exploring hybrid training approaches that combine human and AI-generated content to maximize benefits while minimizing drawbacks.

OpenAI Orion is still in its early stages, with significant development work ahead. CEO Sam Altman has indicated that it won’t be ready for deployment this year or next. This extended timeline could prove advantageous, allowing researchers to address current limitations and discover new methods for model enhancement.

Facing heightened expectations after a recent $6.6 billion funding round, OpenAI aims to overcome these challenges by innovating its development strategy. By tackling the data scarcity dilemma head-on, the company hopes to ensure that OpenAI Orion will make a substantial impact upon its eventual release.

Featured image credit: Jonathan Kemper/Unsplash