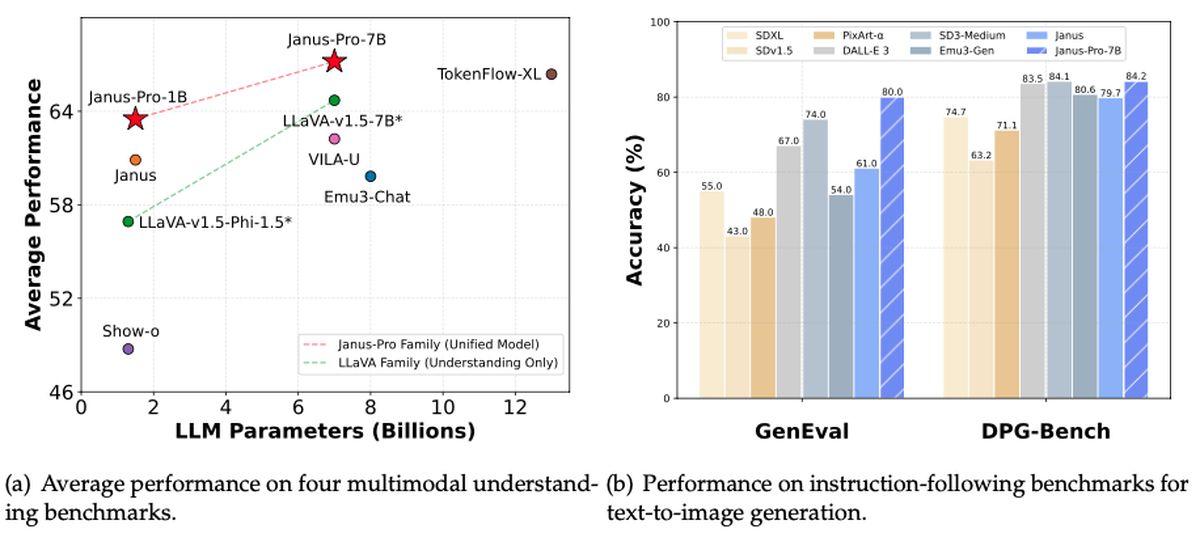

DeepSeek has unveiled yet another major contribution to the open-source AI landscape. This time, it’s Janus-Pro-7B: a multimodal powerhouse capable of both understanding and generating images. According to Rowan Cheung, the new model not only eclipses OpenAI’s DALL-E 3 and Stable Diffusion in benchmarks like GenEval and DPG-Bench but also shows the same “freely available” spirit that made DeepSeek’s earlier R1 model a viral sensation. Investors, meanwhile, are scrambling to make sense of the surge in AI breakthroughs, with NVIDIA’s stock dipping over 17% at midday.

Could Janus-Pro-7B be the next big disruptor in an already turbulent tech market?

What is DeepSeek Janus-Pro-7B?

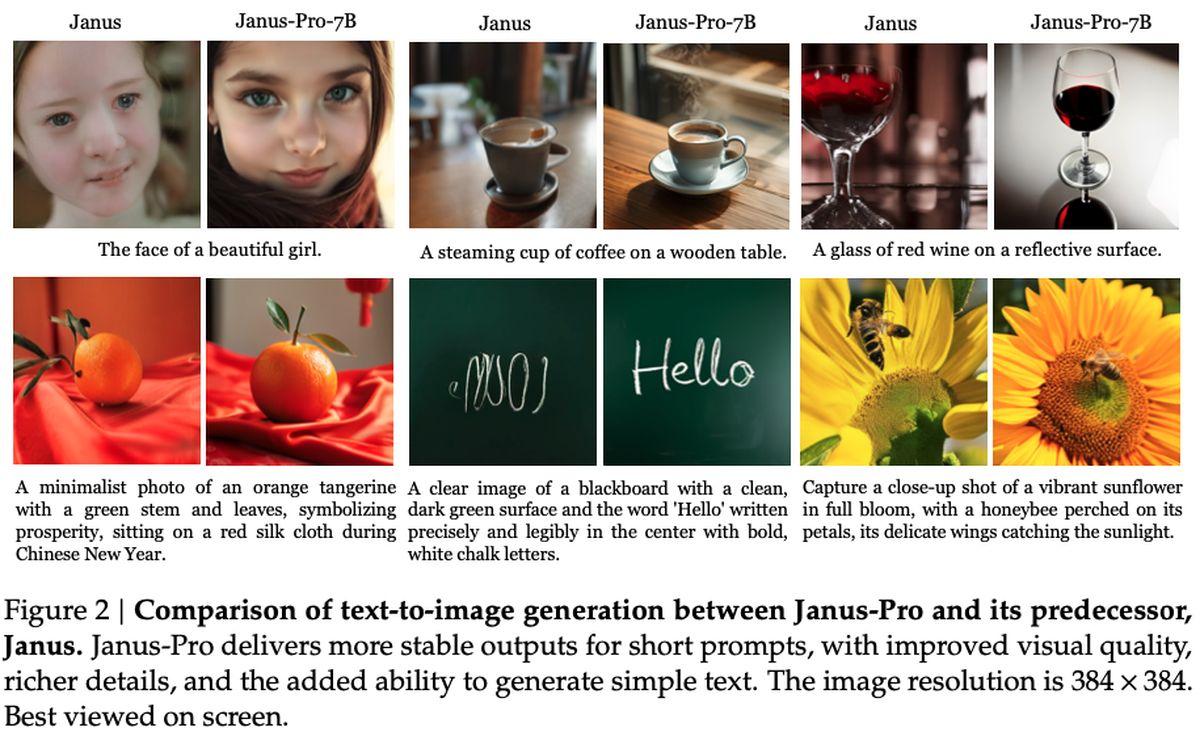

Under the hood, Janus-Pro-7B looks to bridge the gap between powerful vision processing and rapid text generation. Borrowing a novel decoupling approach from its SigLIP-L encoder, the system can quickly parse a 384 x 384 image before jumping into creative output mode. It matches, or even surpasses, many specialized models in the space—an achievement especially striking given that it also remains remarkably easy to customize and extend.

Analysts point to DeepSeek’s consistent philosophy: keep it open-source, stay privacy-first, and undercut subscription-based rivals. Janus-Pro-7B seems to deliver on all three fronts, setting high performance marks while preserving the accessibility that drew fans to DeepSeek-R1’s offline capabilities.

In purely factual terms, Janus-Pro-7B is licensed under a permissive MIT framework, with added usage guidelines from DeepSeek. The model integrates with downstream projects through a GitHub repository, and it reportedly uses just 16x downsampling in its image generation pipeline. Current indicators suggest that Janus-Pro-7B’s arrival may spark fresh competition among AI developers, though only time will tell how this latest free offering will affect the AI zone.

How to setup DeepSeek-R1 easily for free (online and local)?

How does it work?

As detailed in the research paper published by DeepSeek, the model employs a SigLIP-Large-Patch16-384 encoder, which breaks each image into 16×16 patches at a 384×384 resolution. This approach preserves fine-grained details, helping the system interpret images more accurately. On the generation side, Janus-Pro uses a codebook of 16,384 tokens to represent images at a 16× downsampled scale, enabling efficient reconstructions that rival—if not surpass—traditional diffusion models in quality.

Two key MLP (Multi-Layer Perceptron) adaptors connect these understanding and generation components, ensuring data flows smoothly between the two tasks. During training, the model sees a mix of image and text data, allowing it to learn both how to interpret visual scenes and produce its own images. Sessions typically take 7 to 14 days on large-scale GPU clusters (for both 1.5B and 7B parameter versions), with performance tested on benchmarks like GQA (for visual comprehension) and VisualGen (for image creation). The result is a versatile framework that excels at multimodal tasks, thanks to its specialized yet cohesive architecture.

How to use DeepSeek Janus-Pro-7B?

Getting started with Janus-Pro-7B is as simple as heading to its official GitHub repository, cloning or downloading the code, and installing the necessary dependencies. The repository includes a comprehensive README that walks you through setting up a Python environment, pulling the model weights, and running sample scripts. Depending on your hardware, you can choose between CPU-only mode or harness GPU acceleration for faster performance. Either way, the installation process remains straightforward, thanks to well-documented prerequisites and step-by-step instructions.

Once everything is up and running, you can feed in prompts for text generation or provide image inputs for the model’s unique multimodal capabilities. Sample notebooks in the repo demonstrate how to generate creative outputs, apply advanced image transformations, or explore “visual Q&A” scenarios. For more advanced users, the repository’s modular design means you can tweak the underlying layers, plug in your own datasets, or even stack the model alongside other DeepSeek releases like R1.