Max Kanaskar is an Associate for Strategy& (formerly Booz & Company). He has extensive experience in IT capability development and data management, and has advised companies in transformational data management programs, including data strategy, management and governance. He’s a regular commentator on big data and technology. His views can be found here.

In the first introductory instalment of the Big Data Technology Series, we looked at some of the drivers behind recent innovation in the database management and analytic platform market. We also looked at a high level scheme to classify the plethora of technologies and solutions currently in the market based on four distinct environments. Before we start getting into the details of this technology landscape, it will be worthwhile to take a step back and understand a bit of history. As Winston Churchill once said, “The farther you can look into the past, the farther you can see into the future”. Once we understand history, we will shift our focus to understanding some of the characteristics, needs and current technology trends in big data management in a bit more detail. This will then set us up nicely to understand core capabilities and modules in a typical big data platform, and key vendor offerings in this space.

The story of big data technology evolution has three major threads in it. First, database management technology has continued to evolve and improve over time, both from a hardware perspective as well as from a logical modeling perspective. A number of innovations in the big data landscape are innovations in database management platforms, so it is important to understand how databases originated in the first place and how they have evolved.

The second thread is related to development of business intelligence and analytic platforms, which have their origins in the concept of decision support systems of the past. The concept of decision support systems and executive information systems originated in management circles, and technology to fully realize the vision originated only in the 1990s with the rise of the modern-day data warehousing and business intelligence platforms. Such platforms form an integral part of the big data landscape and have continued to develop, offering analytic capabilities ranging from strategic long-range descriptive style to operational and real-time predictive and prescriptive style.

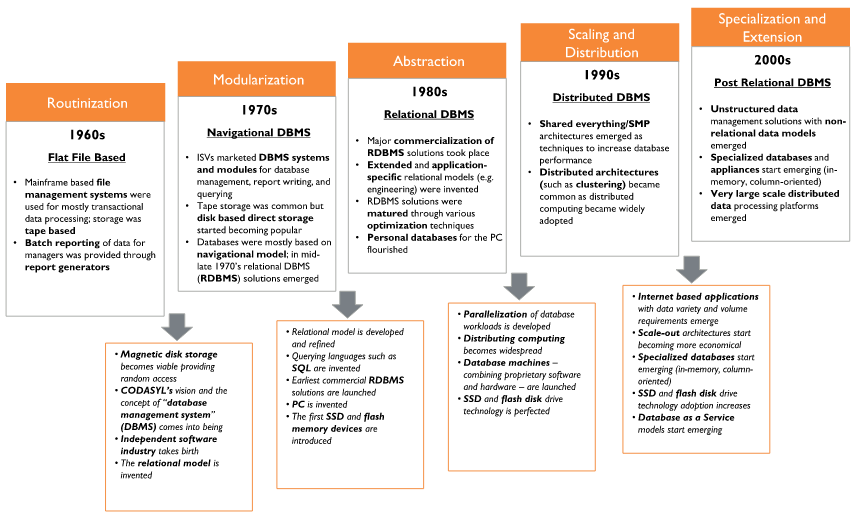

The third thread is related to technologies and packages for statistical processing, which originated in such fields as agricultural research and social sciences, and have slowly made their way into commercial business applications. Statistical processing as applied to big data sets is becoming increasingly feasible due to falling cost of technology, and forms an important trend in the big data evolution story. These three threads are increasingly getting intertwined, at least from a logical perspective if not from a physical one. We will examine how each one of these threads has evolved over time, starting with the database management platform (see the graphic below).

In the 1960s, mainframe computer programs were required to manage batch transactional data processing. Report generators and file processing software were the “database management systems” of the early 1960s, handling batch mode file manipulation and reading tasks offloaded from the expensive mainframe computers. Data in those days was stored as flat files in slow magnetic tape based systems that provided serial data access. Report generators and file processing software provided routinization of the data manipulation and other tasks, however applications programs still had intimate knowledge of how data was structured at the physical level.

Because of this strong coupling between programming logic and data design, it was extremely cumbersome to change and extend programs. Each program was written to manage its own data, and data sharing across programs or modules was very limited. As the data management needs of the programs increased, it became increasingly burdensome to manage and administer the data subsystem. The emergence of random access based magnetic disk-drive further complicated the management and administration of data. This multiplying complexity led to the arrival of more sophisticated data subsystems such as General Electric’s IDS and IBM’s IMS around mid 1960s. The concept of a “database management system” had not been defined yet, although solutions such as IDS and IMS were early embodiment of the concept.

The concept of the database management system (DBMS) was outlined by CODASYL (an industry consortium) in the late 1960s. The concept envisioned extending the existing file management and data sub systems to an integrated platform that provided a single corporate information store, a “data base” in support of Management Information System (MIS) capabilities that provided online integrated data creation, manipulation and reporting. CODASYL’s conceptual outline of the DBMS was a major influence on the evolution of the independent DBMS software industry in the subsequent decade which developed as a result of IBM’s introduction of System/360 that standardized the operating system software for IBM product lines, and IBM’s decision to un-bundle software from its hardware, which all happened in the mid-to-late 1960s.

CODASYL’s vision of the DBMS was based on the so-called “navigational model” in which data elements in the data structure were linked with each other as part of a linked list. As the independent software industry developed through the 1970s, several DBMS solutions based on CODASYL specifications arrived on the market. CODASYL’s model had its advantages, but it was extremely complex to develop and manage. These drawbacks, and specifically the lack of an effective way to search for data elements, prompted E. F. Codd, an IBM researcher, to develop an alternate data model called the “relational model” in the early 1970s. The relational model modelled the data in a very different way, and paved the way for higher level abstraction in data manipulation and querying. While the CODASYL DBMS market was in full bloom in the 1970s, a number of upstart solutions such as IBM’s System R and Ingres that were based on the relational model started appearing in parallel.

The relational model quickly became popular, and a number of independent relational database vendors appeared on the market in mid to late 1970s, notable among them the future Oracle Corporation. Throughout the 1970s and 1980s, the relational database model was successively developed and refined through development of query languages, database indexing techniques, storage management and query optimization and execution management. As the relational model was developed, the market witnessed major commercialization of the relational database management systems (RDBMS). The RDBMS solutions, due to their flexibility and ease of use, started challenging the market dominance of the navigational DBMS solutions, and became a serious contender as the market developed and solutions matured by mid to late 1980s. The 1980s also saw the rise of the PC and personal databases, for which the relational model was perfectly suited.

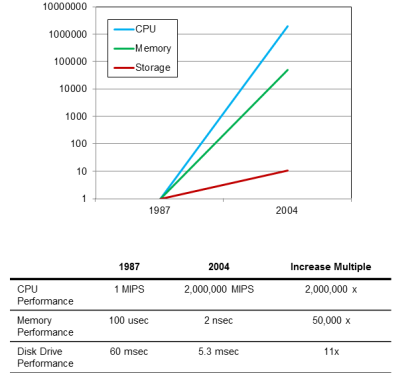

The 1980s was an important time period in the history of the database management system for another reason: it marked the beginning of the development of the “performance gap”: a sizeable spread in the speed and processing capacity of the CPU and that of database storage, mainly disk. Moore’s law has incessantly driven the exponential performance increase of processor chips for decades giving rise to ever more powerful CPUs, however the magnetic disk drive has been lagging in performance, so much so that disk is still today several orders of magnitude slower than a modern-day CPU (see graphic below).

This performance gap started becoming prominent beginning in the 1980s, and it had a significant impact on the design and architecture of database management platforms in the 1980s and 1990s. To bridge the performance gap and to maximize the use of expensive CPU resources, database management systems had to develop intricate management and optimization techniques around caching, disk/memory management and data movement. As powerful computers became cheap in the 1990s, distributed computing across commodity machines started becoming popular. The Internet too entered the business mainstream in the 1990s, resulting in increased data needs of businesses, all of which imposed very high performance requirements on RDBMS platforms. All this gave rise to various distributed data management architectures in RDBMS in the 1990s, such as vertical database scaling as part of SMP designs, database clustering, etc. that provided ways to enhance RDBMS performance. The 1990s also witnessed the rise of alternative data modeling techniques such as the object-oriented data model, gaining some acceptance but not enough adoption to be able to challenge the hegemony of the relational model.

Increasing complexity led to increasing headache and cost in the implementation and management of the modern database management system. Vendors responded by innovating in product development and configuration by introducing database appliances that allowed customers to buy pre-configured solutions requiring minimal setup. The 2000s witnessed the emergence of new players in the information based economy, notably Google and Amazon. The data management needs of these new data driven companies led to the development of the so-called “post relational database models” such as key-value stores and document oriented databases. New data management architectures involving horizontal scaling (massively parallel processing or MPP) and supporting innovations in the logical data management layer (in the form of Google’s MapReduce) were invented. Falling cost of hardware allowed database vendors to bring to market a range of new database appliances such as those using in-memory computing and flash storage. Finally, cloud computing technologies enabled vendors to bring to market new offerings around Database As a Service.

The database management platform continues to evolve as the cost of technology falls further, and as the business needs around management of data become more varied and complex. We have not yet seen an alternative to the hugely successful relational model, however. Increasingly the relational model will be complemented with alternative data management models as guided by specific needs and opportunities. The database management technology market has evolved from a “one size fits all” state to one with an “assorted mix” of tools and techniques that are best of breed and fit for the purpose. We will surely continue to witness yet more interesting developments in this important market.

In the next installment of the Big Data Technologies Series, we will examine the second thread in the story of big data technology evolution: business intelligence and analytic platforms that provide information analysis and information delivery capabilities. We will start with the origins of decision support systems, and understand how technology has evolved to provide such capabilities as alerting, dash boarding and analytical reporting.

This article was first posted here

Interested in more content like this? Sign up to our newsletter, and you wont miss a thing!

[mc4wp_form]

(Image Credit: paul bica)