Since Microsoft began working with deep learning neural networks in 2009, we’ve seen huge improvements in the way algorithms can detect our language and dialogue. IBM have continued to pour money and resources into the development of Watson; Apple have moved the development of Siri in-house, to improve its NLP capabilities; we’ll soon see a version of Skype which can translate the spoken word on the fly.

But Francisco Webber, co-founder of cortical.io, noticed a grey area in the realm of natural language processing. Most of it is heavily based in statistical analysis. “The problem with statistics”, he says, “is that it’s always right in principle but it’s not right in the sense that you can’t use it to create an NLP performance that is even close to what a human’s doing.”

“Normally in science, you use statistics if you don’t understand or don’t know the actual function,” he continues. “Then you observe and create statistics and it lets you make good guesses.”

Webber saw similarities between the state of NLP today, and the history of quantum physics. “In the beginning, quantum physics was an extremely statistical science,” he explains. “But since they have found out quarks, and up-spin & down-spin particles, they have become pretty good in predicting how this whole model basically works. I think this is what we have been lacking in NLP, and I think for the main reason for this is because we did not come up with a proper representation of data that would allow us to do this.”

So, Webber embarked on an academic journey to find a proper way of representing and modelling language, one which would take him years. Ultimately, he was drawn to the work of Palm-Pilot-inventor-turned-neuroscientist Jeff Hawkins; it was this line of inquiry which would turn out to be his “Eureka” moment. Jeff Hawkins, with his work at Numenta, has been working on understanding brain function, and developing algorithms which mimic these processes- such as his work with hierarchical temporal memory, which we reported on back in May, as well as fixed-sparsity distributed representations.

Sparse distributed representation, as Webber explains, is “the language in which the brain encodes information if it wants to store it. This gave me the theoretical breakthrough in saying ‘Okay if all data that is processed in the brain has to be in the SDR format, what we need to do is convert language into this SDR format’. The fundamental property of SDRs is that they are large binary vectors which are only very sparsely filled and that the set bits, if you want, are distributed over the space that is represented by the vector.”

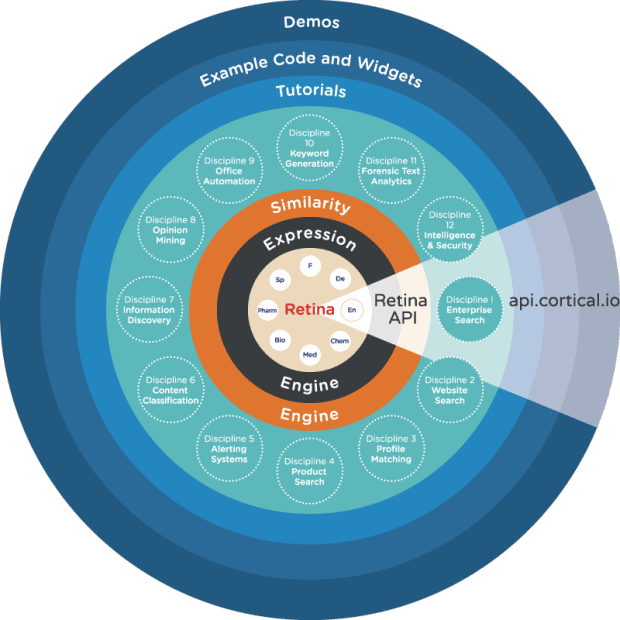

One of the key elements of SDRs is that if words have similar meanings, their SDRs are similar- meaning this model represents a way of mapping words which semantically resemble each other. Webber and his team set about using sparse distributed representation to create “semantic fingerprints”, two-dimensional vectors which represent 16,000 semantic features of words.

To build these semantic maps, Webber and co. put their algorithm to work unsupervised on Wikipedia. “It turned out,” Webber remarks, “that if you just convert words into these SDRs, there are plenty of things—plenty of problems I would even say—that we have faced typically in NLP that we can now solve more easily, even without using any neural network back end.”

One of the findings was that you can semantically fingerprint documents, as well as words. “By using the rule of union, we can create a semantic fingerprint of a document by adding up all the fingerprints of the constituent word fingerprints. What’s great is that the document fingerprint behaves in the same way as the word fingerprint. So you can also compare two documents on how similar they are semantically by comparing the two fingerprints and by calculating the overlap between the two.”

“And there have been even more things that we found out. You can disambiguate terms computationally instead of using a thesaurus or dictionary by simply analyzing the fingerprint and using the similarity function. Recursively you can find all the meanings that are captured within a word. Of course this is based on the training data but as in our case, we have used Wikipedia. We can claim that we have found the more general ambiguities that you can find with words.”

Several intriguing use cases have already arisen. A leading German-English teaching service is using it to tailor learning material for its students; if a student is interested in, say, motorsports, they’ll be supplied with educational texts about formula one. There’s also interest from companies in the domains of rank analysis and medical documentation analysis.

What’s next for cortical.io? Expanding into different languages. “The algorithm is supposed to work on any language, on any material you provide it as long as it’s sufficient material and as long as it’s evenly spread across the domains you want to cover,” Webber explains. “So we are about to prepare a Spanish, French, German, Dutch and several others if there are enough Wikipedia documents available.”

In the realm of NLP, the work of Hawkins, Webber and cortical.io could represent a dramatic shift away from using statistical analysis to detect patterns, towards fundamentally understanding how we can computationally model language.

This post has been sponsored by cortical.io.

Eileen McNulty-Holmes – Editor

Eileen has five years’ experience in journalism and editing for a range of online publications. She has a degree in English Literature from the University of Exeter, and is particularly interested in big data’s application in humanities. She is a native of Shropshire, United Kingdom.

Email: [email protected]

(Featured image credit: cortical.io)