This year we have seen some interesting use cases in the application of big data analytics in sports. We have already uncovered how it has been used in cricket and baseball, whether we can predict the winner of the World Cup, and how the German football team is using SAP HANA for advanced data analytics. More recently, IBM announced that it will be using cloud technology to enhance user engagement during the Wimbledon Championships in July, while leveraging big data to provide real-time predictions on the winner of a particular match. The question, however, still remains: can big data analytics be used to predict outcomes in an industry as volatile and unpredictable as sports? This time, we’ll look at tennis.

In the 1991 Wimbledon championships, IBM implemented the first courtside technology to track service speed. The technology consisted of two radar guns – or sensors – behind the opposite baselines to record information on the speed of the ball and then presented the results to commentators during the game. Indeed, at the time, this technology was novel – never before was it possible to detect the speed of a serve and display this information so quickly.

Yet, technology has advanced at such an incredible speed that what would once have been considered innovation would now be regarded as elementary. Today, sensors can track not only the speed of a players serve but the direction of serve, the return of serve, the number of rally points, how the point was won or lost, “how far the other player has to go to retrieve it and where on the racket they make contact to hit it back.” While it is true that there are only a limited amount of variations possible in terms of shots on a tennis court (down the line, cross court, drop shot, lob, backhand, forehand, and volley), when these sensors accumulate this information, huge datasets emerge.

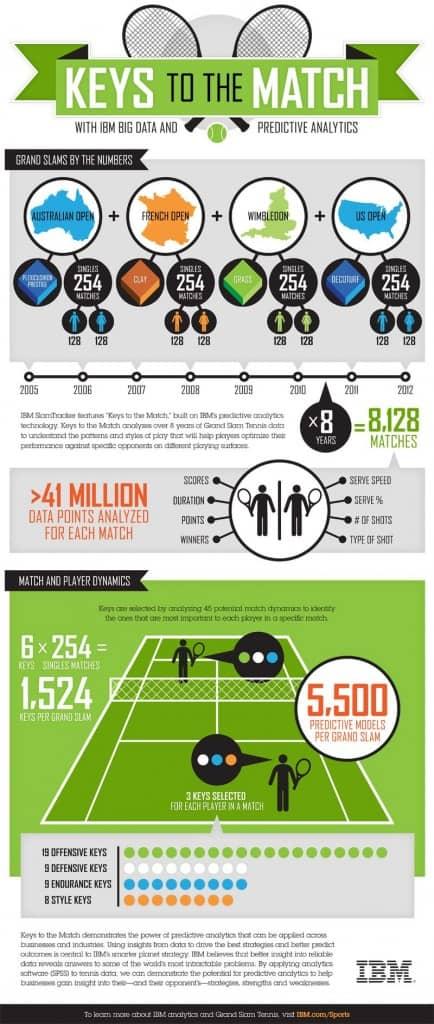

For example, IBM’s SlamTracker has employed this reserve of data to make real-time predictions about how a player can increase his or her chances of winning a match. The program accomplishes this with the help of a system titled “IBM Keys to the Match,” which sorts through 8 years of Grand Slam Tennis Data (precisely 8,128 matches and 41 billion data points) in order to analyze each competitor’s historical performance in head-to-heads — Novak Djokovic, for example, has a winning percentage that is negatively correlated with his first-serve percentage — and statistics about their particular player styles. With this insight into the players’ prior successes and patterns of play, SlamTracker determines “what the data indicates each player must do to do well in the match” – which it can then compare to real-time data (such as the number of aces, or serve speed) to predict how close they are to victory.

Nevertheless, IBM’s SlamTracker is not without its skeptics. In a 2013 Wall Street Journal blog, Carl Bialik argued that IBM’s “Keys to the Match” were much less predictive than they proposed to be, with losers of a match achieving, “as many keys as the winner, or more” up to one-third of the time. Bialik points out that less complex approaches, like Jeff Sackmann’s — author of the tennis-data website Tennis Abstract and blog Heavy Topspin) — achieved comparable, if not more accurate results with much simpler methods. Unlike IBM’s vast array of over 156 different keys identified over myriad tournaments and players, Sackmann used the same three keys for every player and every match: winning percentage on first-serve points better than 74%, winning percentage on second-serve points better than 52%, and first serve percentage better than 62%. The results? As Bialik writes, “There have been more matches in which the winner has achieved more Sackmann keys than his opponent, than matches in which the winner has checked off more IBM boxes.”

Nevertheless, IBM’s SlamTracker is not without its skeptics. In a 2013 Wall Street Journal blog, Carl Bialik argued that IBM’s “Keys to the Match” were much less predictive than they proposed to be, with losers of a match achieving, “as many keys as the winner, or more” up to one-third of the time. Bialik points out that less complex approaches, like Jeff Sackmann’s — author of the tennis-data website Tennis Abstract and blog Heavy Topspin) — achieved comparable, if not more accurate results with much simpler methods. Unlike IBM’s vast array of over 156 different keys identified over myriad tournaments and players, Sackmann used the same three keys for every player and every match: winning percentage on first-serve points better than 74%, winning percentage on second-serve points better than 52%, and first serve percentage better than 62%. The results? As Bialik writes, “There have been more matches in which the winner has achieved more Sackmann keys than his opponent, than matches in which the winner has checked off more IBM boxes.”

Why the discrepancy? According to Bialik, the issue might simply be that IBM has “overfit its model” by employing too many prediction schemes – a dangerous statistical endeavor, which often leads to arriving at results by chance, rather than actual forecasting. Sackmann also suggests that IBM’s keys belie a certain ignorance of the game itself: “many of the IBM keys, such as average first serve speeds below a given number of miles per hour, or set lengths measured in minutes, reek of domain ignorance.”

While data analytics in sports is undeniably interesting, its predictive capabilities are still to be determined. As Bialik and Sackmann note, often too much data can lead to inaccurate measurements — which is to say, more data does not equal more accuracy — and that an industry like sports is far too nuanced to have predictions casted on them (especially by those who may not completely know its subtleties). What side of the spectrum you fall on depends how much confidence you have in data, or perhaps those who are collecting it.

Furhaad worked as a researcher/writer for The Times of London and is a regular contributor for the Huffington Post. He studied philosophy on a dual programme with the University of York (U.K.) and Columbia University (U.S.) He is a native of London, United Kingdom.

Furhaad worked as a researcher/writer for The Times of London and is a regular contributor for the Huffington Post. He studied philosophy on a dual programme with the University of York (U.K.) and Columbia University (U.S.) He is a native of London, United Kingdom.

Email: [email protected]

Interested in more content like this? Sign up to our newsletter, and you wont miss a thing!

[mc4wp_form]

(Image Credit: Flickr)